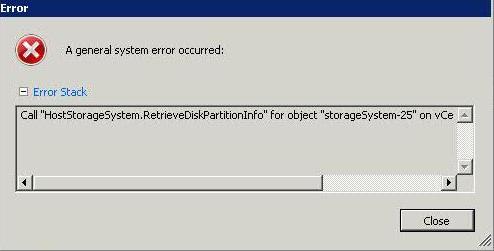

This alarm may appear in the vCenter vSphere Client. A warning message similar to one of these may also appear in the vCenter vSphere Client:

- Non-VI Workload detected on the datastore

- An external I/O workload is detected on datastore XYZABC

This informational event alerts the user of a potential misconfiguration or I/O performance issue caused by a non-ESX workload. It is triggered when Storage I/O Control (SIOC) detects that a workload that is not managed by SIOC is contributing to I/O congestion on a datastore that is managed by SIOC. (Congestion is defined as a datastore’s response time being above the SIOC threshold.) Specific situations that can trigger this event include:

- The host is running in an unsupported configuration.

- The storage array is performing a system operation such as replication or RAID reconstruction.

- VMware Consolidated Backup or vStorage APIs for Data Protection are accessing a snapshot on the datastore for backup purposes.

- The storage media (spindles, SSD) on which this datastore is located is shared with volumes used by non-vSphere workloads

SIOC continues to work during these situations. This event can be ignored in many cases and you can disable the associated alarm once you have verified that none of the potential misconfigurations or serious performance issues are present in your environment. As explained in detail below, SIOC ensures that the ESX workloads it manages are able to compete for I/O resources on equal footing with external workloads. This event notifies the user of what is happening, provides the user with the opportunity to better understand what is going on, and highlights a potential opportunity to correct or optimize the infrastructure configuration.

NOTE: At this time, SIOC is not supported with NFS storage or with Raw Device Mappings (RDM) virtual disks. This includes RDM’s used for MSCS (Microsoft cluster server) also datastores with multiple extents. This alarm could occur if these storage object are configured.

Example Scenario 1:

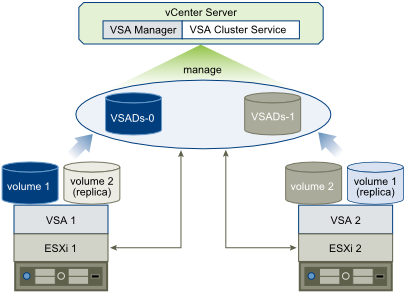

A /vmfs/volumes/shared-LUN datastore is accessible across multiple hosts. Some hosts are running ESX version 4.1 or later and others are either running an older version or are outside the control domain of vCenter Server.

Example Scenario 2:

The array being used for vSphere is also being used for non-vSphere workloads. The non-vSphere workloads are accessing a storage volume that is on the same disk spindles as the affected datastore.

Impact

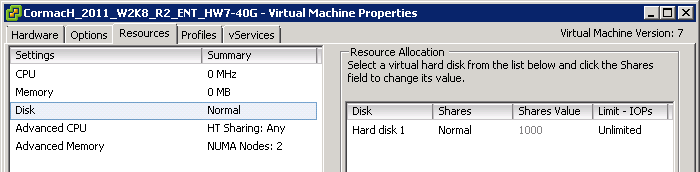

When SIOC detects that datastore response time has exceeded the threshold, it typically throttles the ESX workloads accessing the datastore to ensure that the workloads with the highest shares get preference for I/O access to the datastore and lower I/O response time. However, such throttling is not appropriate when workloads not managed by SIOC are accessing the same storage media. Throttling in this case would result in the external workload getting more and more bandwidth, while the vSphere workloads get less and less. Therefore, SIOC detects the presence of such external workloads, and as long as they are present while the threshold is being exceeded, SIOC competes with the interfering workload by curtailing its usual throttling activity.

SIOC automatically detects when the interference goes away and resumes its normal behavior. In this way, SIOC is able to operate correctly even in the presence of interference. The vCenter Server event is notifying the user that SIOC has noticed and handled the interference from external workloads.

Note: When an external workload is acting to drive the datastore response time above the SIOC threshold, the external workload might cause I/O performance issues for vSphere workloads. In most cases, SIOC can automatically and safely manage this situation. However, there may be an opportunity to improve performance by changing some aspects of your configuration. The next section provides guidance on this

These unsupported configurations can result in the event:

- One or more hosts accessing the datastore are running an ESX version older than 4.1.

- One or more hosts accessing the datastore are not managed by vCenter Server.

- Not all of the hosts accessing the datastore are managed by the same vCenter Server.

- The storage media (spindles, SSD) where this datastore is located are shared with other datastores that are not SIOC enabled.

- Datastores in the configuration have multiple extents.

Ensure that you are running a supported configuration:

- Can you disable and successfully re-enable congestion management for the affected datastore?

Disable and attempt to re-enable congestion management for the affected datastore. If the event occurred because the configuration includes hosts that are running an older version of ESX and the hosts are managed by the same vCenter Server, vCenter Server detects the problem and does not allow you to re-enable congestion management. When the older hosts are updated to ESX 4.1 or later, or the hosts are disconnected from the affected datastore, you can enable congestion management.

- Are hosts that are not managed by this vCenter Server accessing the affected datastore?

If disabling and re-enabling congestion management for the affected datastore does not solve the problem, other hosts that are not managed by this vCenter Server might be accessing the datastore.

Verify that the datastore is shared across hosts that are managed by different vCenter Server systems or are not managed hosts. If so, perform one of these actions:

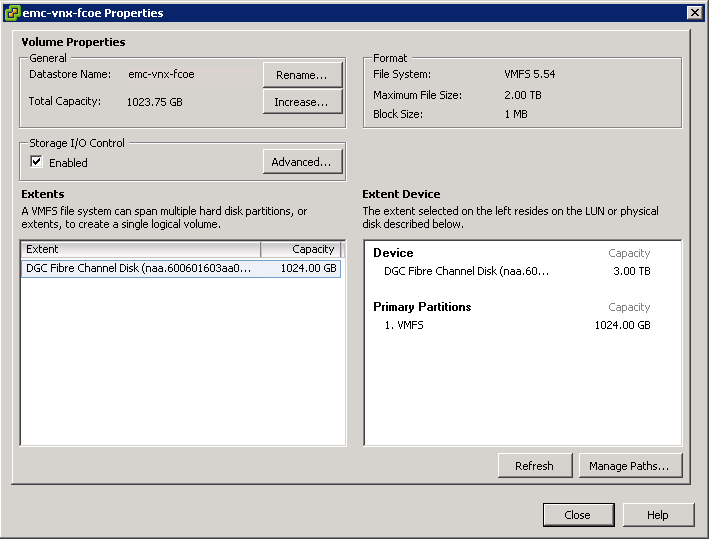

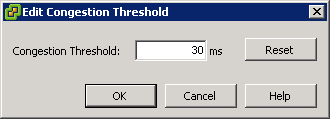

- Do all datastores in the configuration that share the same physical storage media (spindles, SSD) have the same SIOC configuration?

All datastores that share physical storage media must share the same SIOC configuration — all enabled or all disabled. In addition, if you have modified the default congestion threshold setting, all datastores that share storage media must have the same setting.

- Are any SIOC-enabled datastores in the configuration backed up by multiple extents?

SIOC-enabled datastores must not be backed up by multiple extents.

If none of the above scenarios apply to your configuration and you have determined that you are running a supported configuration, but are still seeing this event, investigate possible I/O throttling by the storage array.

If an environment is known to have shared access to datastores or performance constraints, it may be preferable to disable the Alarm in vCenter Server. For more information, see Working with Alarms in the vSphere 4.1 Datacenter Administration Guide.

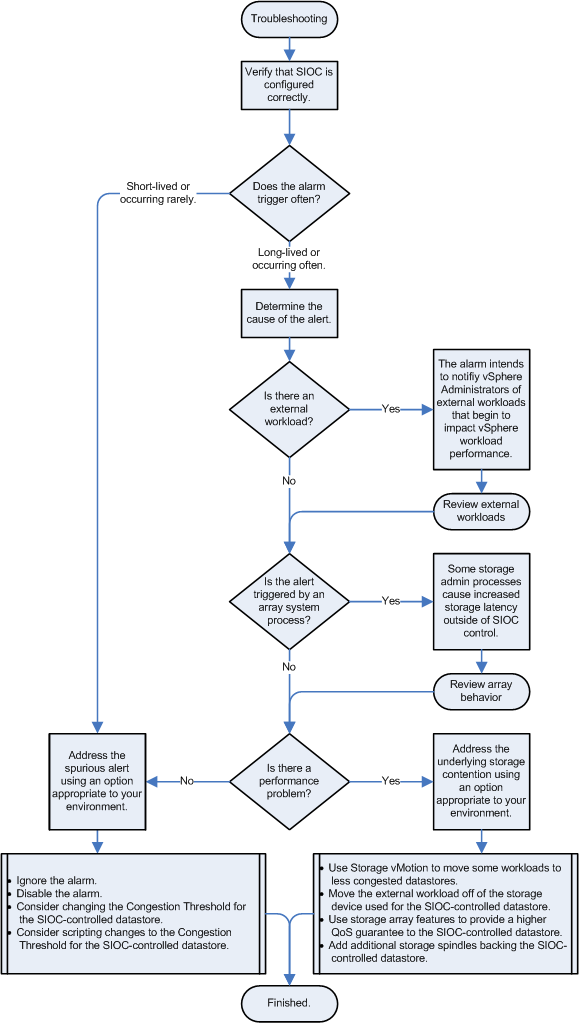

Flowchart for Troubleshooting