Benefits of NIC teaming include load balancing and failover: However, those policies will affect outbound traffic only. In order to control inbound traffic, you have to get the physical switches involved.

- Load balancing: Load balancing allows you to spread network traffic from virtual machines on a virtual switch across two or more physical Ethernet adapters, providing higher throughput. NIC teaming offers different options for load balancing, including route based load balancing on the originating virtual switch port ID, on the source MAC hash, or on the IP hash.

- Failover: You can specify either Link status or Beacon Probing to be used for failover detection. Link Status relies solely on the link status of the network adapter. Failures such as cable pulls and physical switch power failures are detected, but configuration errors are not. The Beacon Probing method sends out beacon probes to detect upstream network connection failures. This method detects many of the failure types not detected by link status alone. By default, NIC teaming applies a fail-back policy, whereby physical Ethernet adapters are returned to active duty immediately when they recover, displacing standby adapters

NIC Teaming Policies

|

Network Teaming Setting |

Description |

| Route based on the originating virtual port | Choose an uplink based on the virtual port where the traffic entered the virtual switch. |

| Route based on IP hash | Choose an uplink based on a hash of the source and destination IP addresses of each packet. For non-IP packets, whatever is at those offsets is used to compute the hash.Used for Etherchannel when set on the switch |

| Route based on source MAC hash | Choose an uplink based on a hash of the source Ethernet. |

| Route based on physical NIC load | Choose an uplink based on the current loads of physical NICs. |

| Use explicit failover order | Always use the highest order uplink from the list of Active adapters which passes failover detection criteria |

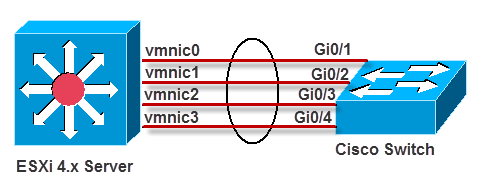

There are two ways of handling NIC teaming in VMware ESX:

- Without any physical switch configuration

- With physical switch configuration (EtherChannel, static LACP/802.3ad, or its equivalent)

There is a corresponding vSwitch configuration that matches each of these types of NIC teaming:

- For NIC teaming without physical switch configuration, the vSwitch must be set to either “Route based on originating virtual port ID”, “Route based on source MAC hash”, or “Use explicit failover order”

- For NIC teaming with physical switch configuration—EtherChannel, static LACP/802.3ad, or its equivalent—the vSwitch must be set to “Route based on ip hash”

Considerations for NIC teaming without physical switch configuration

Something to be aware of when setting up NIC Teaming without physical switch configuration is that you don’t get true load balancing as you do with Etherchannel. The following applies to the NIC Teaming Settings

Route based on the originating virtual switch port ID

Choose an uplink based on the virtual port where the traffic entered the virtual switch. This is the default configuration and the one most commonly deployed.

When you use this setting, traffic from a given virtual Ethernet adapter is consistently sent to the same physical adapter unless there is a failover to another adapter in the NIC team.

Replies are received on the same physical adapter as the physical switch learns the port association.

* This setting provides an even distribution of traffic if the number of virtual Ethernet adapters is greater than the number of physical adapters.

Route based on source MAC hash

Choose an uplink based on a hash of the source Ethernet MAC address.

When you use this setting, traffic from a given virtual Ethernet adapter is consistently sent to the same physical adapter unless there is a failover to another adapter in the NIC team.

Replies are received on the same physical adapter as the physical switch learns the port association.

* This setting provides an even distribution of traffic if the number of virtual Ethernet adapters is greater than the number of physical adapters.