A Zombie VMDK is as mentioned usually a VMDK which isn’t used anymore by a VM. You can double check this by checking if the disk is still linked to the VM which it should be a part off. If it isn’t you can delete it from the datastore via the datastore browser. I would suggest moving it first before you delete is, just in case

Storing a Virtual Machine Swapfile in a different location

By default, swapfiles for a virtual machine are located on a VMFS3 datastore in the folder that contains the other virtual machine files. However, you can configure your host to place virtual machine swapfiles on an alternative datastore.

Why move the Swapfiles?

- Place virtual machine swapfiles on lower-cost storage

- Place virtual machine swapfiles on higher-performance storage.

- Place virtual machine swapfiles on non replicated storage

- Moving the swap file to an alternate datastore is a useful troubleshooting step if the virtual machine or guest operating system is experiencing failures, including STOP errors, read only filesystems, and severe performance degradation issues during periods of high I/O.

vMotion Considerations

Note: Setting an alternative swapfile location might cause migrations with vMotion to complete more slowly. For best vMotion performance, store virtual machine swapfiles in the same directory as the virtual machine. If the swapfile location specified on the destination host differs from the swapfile location specified on the source host, the swapfile is copied to the new location which causes the slower migration. Copying host-swap local pages between source- and destination host is a disk-to-disk copy process, this is one of the reasons why VMotion takes longer when host-local swap is used.

Swapfile Moving Caveats

- If vCenter Server manages your host, you cannot change the swapfile location if you connect directly to the host by using the vSphere Client. You must connect to the vCenter Server system.

- Migrations with vMotion are not allowed unless the destination swapfile location is the same as the source swapfile location. In practice, this means that virtual machine swapfiles must be located with the virtual machine configuration file.

- Using host-local swap can affect DRS load balancing and HA failover in certain situations. So when designing an environment using host-local swap, some areas must be focused on to guarantee HA and DRS functionality.

DRS

If DRS decide to rebalance the cluster, it will migrate virtual machines to low utilized hosts. VMkernel tries to create a new swap file on the destination host during the VMotion process. In some scenarios, the host might not contain any free space in the VMFS datastore and DRS will not be able to vMotion any virtual machine to that host because the lack of free space. But the host CPU active and host memory active metrics were still monitored by DRS to calculate the load standard deviation used for its recommendations to balance the cluster. The lack of disk space on the local VMFS datastores influences the effectiveness of DRS and limits the options for DRS to balance the cluster.

High availability failover

The same applies when a HA isolation response occurs, when not enough space is available to create the virtual machine swap files, no virtual machines are started on the host. If a host fails, the virtual machines will only power-up on host containing enough free space on their local VMFS datastores. It might be possible that virtual machines will not power-up at-all if not enough free disk space is available

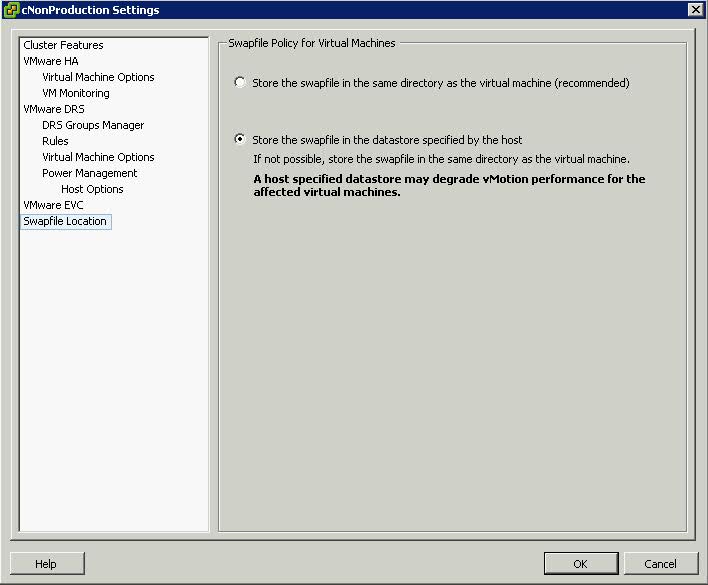

Procedure (Cluster Modification)

- Right click the cluster

- Edit Settings

- Click Swap File Location

- Select Store the Swapfile in the Datastore specified by the Host

Procedure (Host Modification)

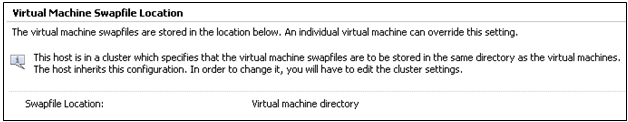

If the host is part of a cluster, and the cluster settings specify that swapfiles are to be stored in the same directory as the virtual machine, you cannot edit the swapfile location from the host configuration tab. To change the swapfile location for such a host, use the Cluster Settings dialog box.

- Click the Inventory button in the navigation bar, expand the inventory as needed, and click the appropriate managed host.

- Click the Configuration tab to display configuration information for the host.

- Click the Virtual Machine Swapfile Location link.

- The Configuration tab displays the selected swapfile location. If configuration of the swapfile location is not supported on the selected host, the tab indicates that the feature is not supported.

- Click Edit.

- Select either Store the swapfile in the same directory as the virtual machine or Store the swapfile in a swapfile datastore selected below.

- If you select Store the swapfile in a swapfile datastore selected below, select a datastore from the list.

- Click OK.

- The virtual machine swapfile is stored in the location you selected.

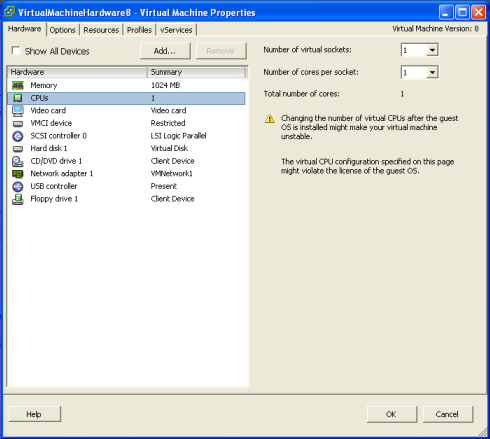

New CPU Features in vSphere 5

VMware multicore virtual CPU support lets you control the number of cores per virtual CPU in a virtual machine. This capability lets operating systems with socket restrictions use more of the host CPU’s cores, which increases overall performance.

You can configure how the virtual CPUs are assigned in terms of sockets and cores. For example, you can configure a virtual machine with four virtual CPUs in the following ways

- Four sockets with one core per socket

- Two sockets with two cores per socke

- One socket with four cores per socket

Using multicore virtual CPUs can be useful when you run operating systems or applications that can take advantage of only a limited number of CPU sockets. Previously, each virtual CPU was, by default, assigned to a single-core socket, so that the virtual machine would have as many sockets as virtual CPUs. When you configure multicore virtual CPUs for a virtual machine, CPU hot Add/remove is disabled

The ESXi CPU scheduler can detect the processor topology and the relationships between processor cores and the logical processors on them. It uses this information to schedule virtual machines and optimize performance.

The ESXi CPU scheduler can interpret processor topology, including the relationship between sockets, cores, and logical processors. The scheduler uses topology information to optimize the placement of virtual CPUs onto different sockets to maximize overall cache utilization, and to improve cache affinity by minimizing virtual CPU migrations.

In undercommitted systems, the ESXi CPU scheduler spreads load across all sockets by default. This improves performance by maximizing the aggregate amount of cache available to the running virtual CPUs. As a result, the virtual CPUs of a single SMP virtual machine are spread across multiple sockets (unless each socket is also a NUMA node, in which case the NUMA scheduler restricts all the virtual CPUs of the virtual machine to reside on the same socket.)

Understanding vSphere 5 High Availability

On the outside, the functionality of vSphere HA is very similar to the functionality of vSphere HA in vSphere 4. Now though HA uses a new VMware developed tool called FDM (Fault Domain Manager) This tool is a replacement for AAM (Automated Availability Manager)

Limitations of AAM

- Strong dependance on name resolution

- Scalability Limits

Advantages of FDM over AAM

- FDM uses a Master/Slave architecture that does not rely on Primary/secondary host designations

- As of 5.0 HA is no longer dependent on DNS, as it works with IP addresses only.

- FDM uses both the management network and the storage devices for communication

- FDM introduces support for IPv6

- FDM addresses the issues of both network partition and network isolation

- Faster install of HA once configured

FDM Agents

FDM uses the concept of an agent that runs on each ESXi host. This agent is separate from the vCenter Management Agents that vCenter uses to communicate with the the ESXi hosts (VPXA)

The FDM agent is installed into the ESXi Hosts in /opt/vmware/fdm and stores it’s configuration files at /etc/opt/vmware/fdm

How FDM works

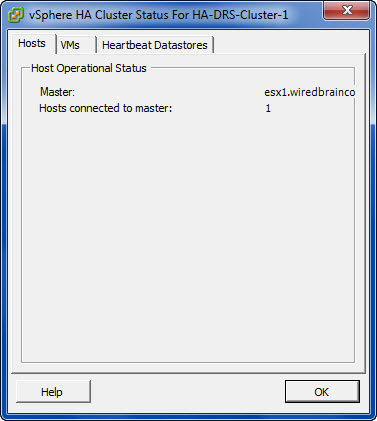

- When vSphere HA is enabled, the vSphere HA agents participate in an election to pick up a vSphere HA master.The vSphere HA Master is responsible for a number of key tasks within a vSphere HA enabled cluster

- You must now have at least two shared data stores between all hosts in the HA cluster.

- The Master monitors Slave hosts and will restart VMs in the event of a slave host failure

- The vSphere HA Master monitors the power state of all protected VMs. If a protected VM fails, it will restart the VM

- The Master manages the tasks of adding and removing hosts from the cluster

- The Master manages the list of protected VMs.

- The Master caches the cluster configuration and notifies the slaves of any changes to the cluster configuration

- The Master sends heartbeat messages to the Slave Hosts so they know that the Master is alive

- The Master reports state information to vCenter Server.

- If the existing Master fails, a new HA Master is automatically elected. If the Master went down and a Slave was promoted, when the original Master comes back up, does it become the Master again? The answer is no.

Enhancements to the User Interface

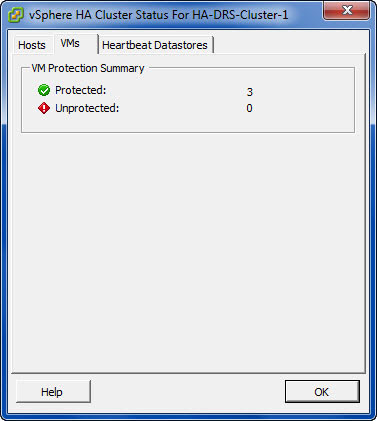

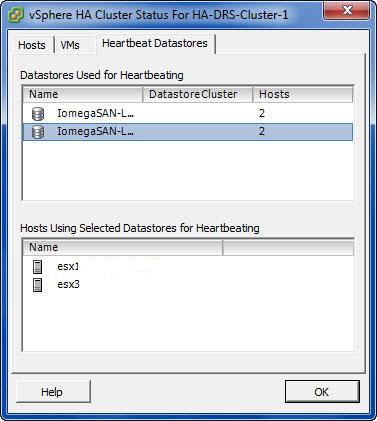

3 tabs in the Cluster Status

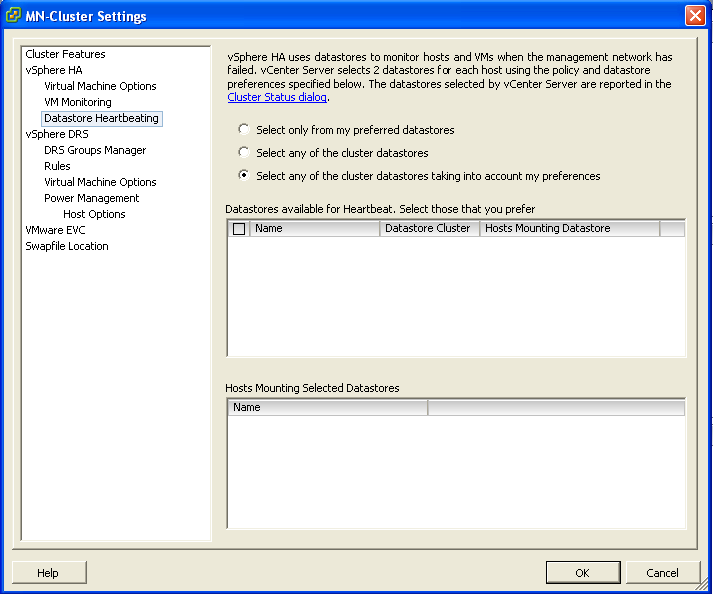

Cluster Settings showing the new Datatatores for heartbeating

How does it work in the event of a problem?

Virtual machine restarts were always initiated, even if only the management network of the host was isolated and the virtual machines were still running. This added an unnecessary level of stress to the host. This has been mitigated by the introduction of the datastore heartbeating mechanism. Datastore heartbeating adds a new level of resiliency and allows HA to make a distinction between a failed host and an isolated / partitioned host. You must now have at least two shared data stores between all hosts in the HA cluster.

Network Partitioning

The term used to describe a situation where one or more Slave Hosts cannot communicate with the Master even though they still have network connectivity. In this case HA is able to check the heartbeat datastores to detect whether the hosts are live and whether action needs to be taken

Network Isolation

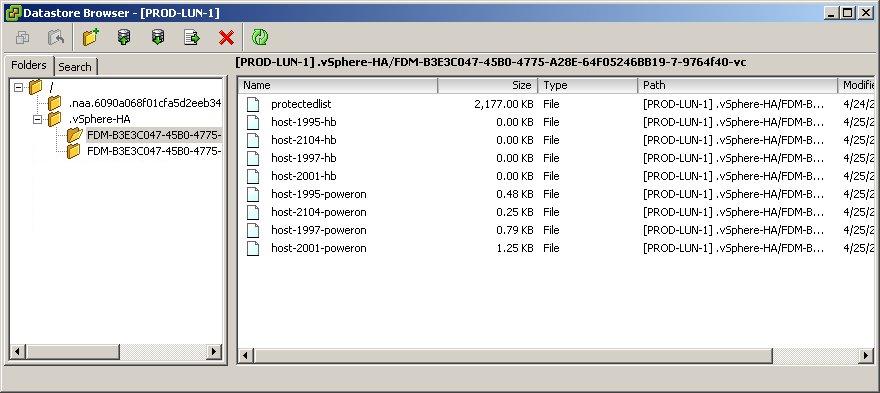

This situation involves one or more Slave Hosts losing all management connectivity. Isolated hosts can neither communicate with the vSphere HA Master or communicate with other ESXi Hosts. In this case the Slave Host uses Heartbeat Datastores to notify the master that it is isolated. The Slave Host uses a special binary file, the host-X-poweron file to notify the master. The vSphere Master can then take appropriate action to ensure the VMs are protected

- In the event that a Master cannot communicate with a slave across the management network or a Slave cannot communicate with a Master then the first thing it will try and do is contact the isolation address. By default the gateway on the Management Network

- If it can’t reach the Gateway, it considers itself isolated

- At this point, an ESXi host that has determined it is network isolated will modify a special bit in the binary host-x-poweron file which is found on all datastores which are selected for datastore heartbeating

- The Master sees this bit, used to denote isolation and is therefore notified that the slave host has been isolated

- The Master then locks another file used by HA on the Heartbeat Datastore

- When the isolated node sees that this file has been locked by a master, it knows that the master is responsible for restarting the VMs

- The isolated host is then free to carry out the configured isolation response which only happens when the isolated slave has confirmed via datastore heartbeating infrastructures that the Master has assumed responsibility for restarting the VMs.

Isolation Responses

I’m not going to go into those here but they are bulleted below

- Shutdown

- Restart

- Leave Powered On

Should you change the default Host Isolation Response?

It is highly dependent on the virtual and physical networks in place.

If you have multiple uplinks, vSwitches and physical switches, the likelihood is that only one part of the network may go down at once. In this case use the Leave Powered On setting as its unlikely that a network isolation event would also leave the VMs on the host inaccessible.

Customising the Isolation response address

It is possible to customise the isolation response address in 3 different ways

- Connect to vCenter

- Right click the cluster and select Edit Settings

- Click the vSphere HA Node

- Click Advanced

- Enter one of the 3 options below

- das.isolationaddress1 which tries the first gateway

- das.isolationaddress2 which tries a second gateway

- das.AllowNetwork which allows a different Port Group to try

DFS – Enable Access-Based Enumeration on a Namespace

Applies To: Windows Server 2008

Access-based enumeration hides files and folders that users do not have permission to access. By default, this feature is not enabled for DFS namespaces. You can enable access-based enumeration of DFS folders by using the Dfsutil command, enabling you to hide DFS folders from groups or users that you specify. To control access-based enumeration of files and folders in folder targets, you must enable access-based enumeration on each shared folder by using Share and Storage Management.

Caution

Access-based enumeration does not prevent users from getting a referral to a folder target if they already know the DFS path. Only the share permissions or the NTFS file system permissions of the folder target (shared folder) itself can prevent users from accessing a folder target. DFS folder permissions are used only for displaying or hiding DFS folders, not for controlling access, making Read access the only relevant permission at the DFS folder level

In some environments, enabling access-based enumeration can cause high CPU utilization on the server and slow response times for users.

Requirements

To enable access-based enumeration on a namespace, all namespace servers must be running at least Windows Server 2008. Additionally, domain-based namespaces must use the Windows Server 2008 mode

To use access-based enumeration with DFS Namespaces to control which groups or users can view which DFS folders, you must follow these steps:

- Enable access-based enumeration on a namespace.

- Control which users and groups can view individual DFS folders.

Method

To enable access-based enumeration on a namespace by using Windows Server 2008, you must use the Dfsutil command

- Open an elevated command prompt window on a server that has the Distributed File System role service or Distributed File System Tools feature installed.

- Type the following command, where <namespace_root> is the root of the namespace

dfsutil property abde enable \\<namespace_root>

For example, to enable access-based enumeration on the domain-based namespace \\contoso.office\public type the following command:

dfsutil property abde enable \\contoso.office\public

Controlling which users and groups can view individual DFS folders

By default, the permissions used for a DFS folder are inherited from the local file system of the namespace server. The permissions are inherited from the root directory of the system drive and grant the DOMAIN\Users group Read permissions. As a result, even after enabling access-based enumeration, all folders in the namespace remain visible to all domain users.

To limit which groups or users can view a DFS folder, you must use the Dfsutil command to set explicit permissions on each DFS folder

dfsutil property acl grant DOMAIN\Account:R (…) Protect Replace

For example, to block inherited permissions (by using the Protect parameter) and replace previously defined ACEs (by using the Replace parameter) with permissions that allow the Domain Admins and CONTOSO\Trainers groups Read (R) access to the \\contoso.office\public\training folder, type the following command:

dfsutil property acl grant \\contoso.office\public\training ”CONTOSO\Domain Admins”:R CONTOSO\Trainers:R Protect Replace

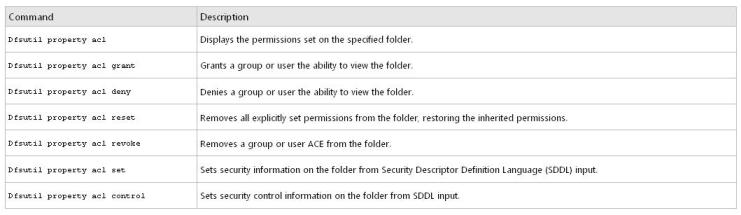

Permission table

DFS Replication

What is DFS Replication?

DFS Replication is a multimaster replication engine that supports replication scheduling and bandwidth throttling. DFS Replication uses a compression tool called Remote Differential Compression (RDC) which can be used to efficiently update files over a limited bandwidth network. RDC detects insertions, removals and re-arrangements of data in files thereby enabling DFS Replication to replicate only the changes when the files are updated. Another important feature of DFS Replication is that in choosing replication paths,it leverages the Active Directory site links configured in Active Directory Sites and Services. RDC replaced FRS (File Replication Services)

Configuration

As an example lets, use DFS Replication to replicate the contents of a share called Invoices from Server1 to Server2. That way, should the share on Server1 somehow become unavailable, users will still be able to access its content using Server2. Every file server that needs to participate in replicating DFS content must have the DFS Replication Service installed and running

Simply create a second Invoices share on Server2, replicate the contents of \\Server1\Invoices to \\Server2\Invoices, and add \\Server2\Invoices to the list of folder targets for the \\domain\Namespace\Invoices folder in the namespace. That way if a client tries to access a file named Sample.doc found in \\domain\Namespace\Invoices on Server1 but Server1 is down, it can access the copy of the file on Server2.

- To accomplish this, the first thing you need to do is install the DFS Replication component if you haven’t already done so.

- Create a new folder named C:\Invoices on Server2 and share it with Full Control permission for Everyone (this choice does not mean the folder is not secure as NTFS permission are really used to secure resources, not shared folder permissions)

- Now in the DFS Management Console, let’s add \\Server2\Invoices as a second folder target for \\Domain\Namespace\Server1\Invoices. Open the DFS Management console and select the following node in the console tree: DFS Management, Namespaces, \\r2.local\Accounting, Billing, Invoices

- Right-click the Invoices folder in the console tree and select Add Folder Target. Then specify the path to the new target -\\Server2\Invoices

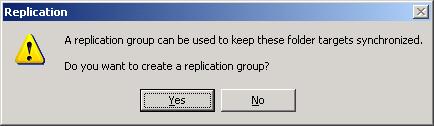

- Once the second target is added, you’ll be prompted to create a replication group

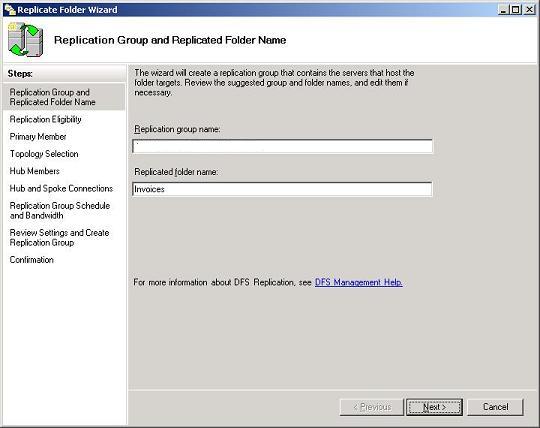

- A replication group is a collection of file servers that participate in the replication of one or more folders in a namespace. In other words, if we want to replicate the contents of \\Server1\Invoices with \\Server2\Invoices, then Server1 and Server2 must first be added to a replication group. Replication groups can be created manually by right-clicking on the DFS Replication node in the DFS Management console, but it’s easier here if we just create one on the fly by clicking Yes to this dialog box. This opens the Replicate Folder Wizard, an easy-to-use method for replicating DFS content on R2 file server

Next steps of the wizard

- Replication Eligibility. Displays which folder targets can participate in replication for the selected folder (Invoices). Here the wizard displays \\Server1\Invoices and \\Server2\Invoices as expected.

- Primary Member. Makes sure the DFS Replication Service is started on the servers where the folder targets reside. One server is initially the primary member of the replication group, but once the group is established all succeeding replication is mulitmaster. We’ll choose Server1 as the primary member since the file Sample.doc resides in the Invoices share on that server (the Invoices share on Server2 is initially empty).

- Topology Selection. Here you can choose full mesh, hub and spoke, or a custom topology you specify later.

- Replication Group Schedule and Bandwidth. Lets you replicate the content continuously up to a maximum specified bandwidth or define a schedule for replication (we’ll choose the first option, continuous replication).

Storage vMotion fails with the error: Storage vMotion failed to copy one or more of the VM’s disks

The Error

A general system error occurred: Storage vMotion failed to copy one or more of the VM’s disks. Please consult the VM’s log for more details, looking for lines starting with “CBTMotion”.

Resolution

- In the vSphere Client, right-click the virtual machine and click Snapshot > Take Snapshot.

- In the vSphere Client right-click the virtual machine and click Snapshot > Snapshot Manager.

- Select the snapshot you created in Step 1 and click Delete.

Configure a DFS NameSpace on Windows Server 2008

The DFS Management snap-in is the graphical user interface (GUI) tool for managing DFS Namespaces and DFS Replication. This snap-in is new and differs from the Distributed File System snap-in in Windows Server 2003

The DFS NameSpace will be the client facing aspect of DFS and what really makes life easier for the end users. Having a common namespace across your enterprise for the users to share files will cut down on support calls and make collaboration on documents a breeze.

Configuring DFS

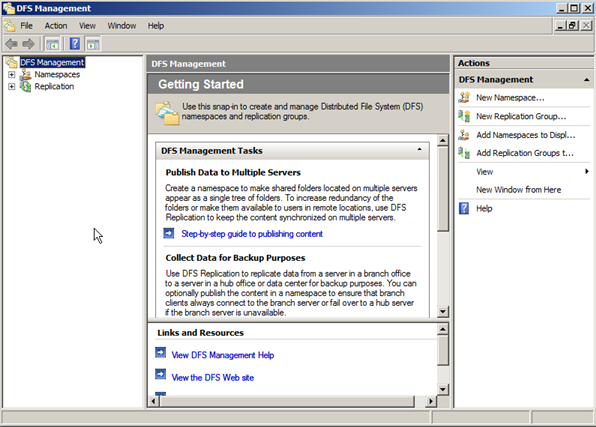

- Click Start, point to All Programs, point to Administrative Tools, and then click DFS Management.

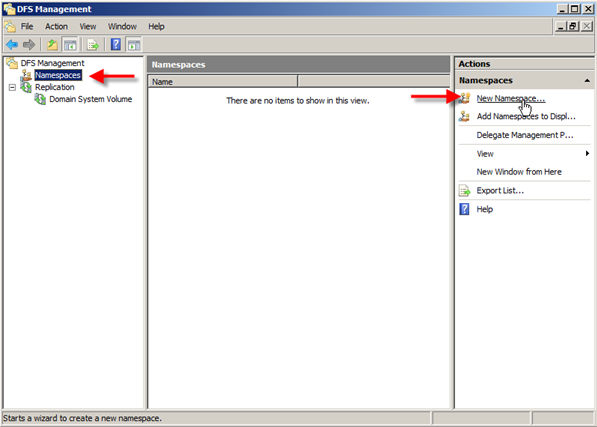

- In the left pane click on Namespaces and then in the right column click New Namespace

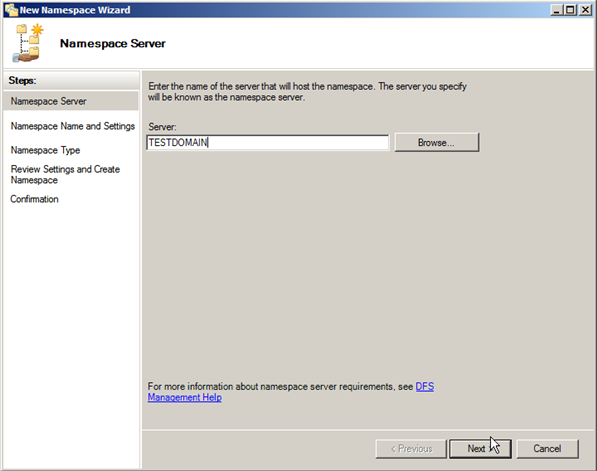

- In the New Namespace Wizard, the first thing it wants to see is your server that will host the Namespace. In this case it will be the server that you installed DFS on. Therefore enter TESTDOMAIN as your server name

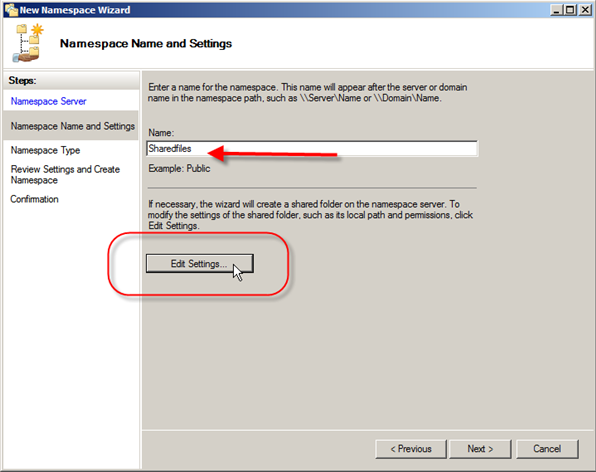

- The next window is Namespace Name and Settings, and it is asking for the name of the namespace. Depending on if this is a standalone install or a domain, this is the name that will be after the server or domain name. In this case I am going to type the namespace Sharedfiles.

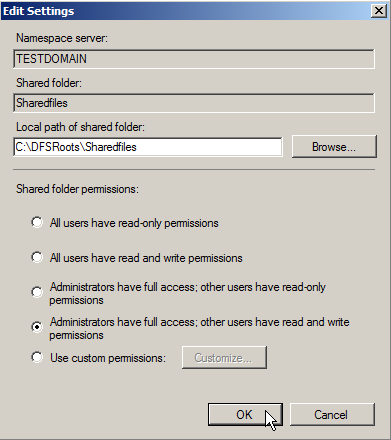

- Notice when you type in the name the Edit Settings button becomes live. This is because the wizard will create the shared folder. You can modify the settings it uses at this time by clicking Edit Settings

- You can now edit the following settings:Local path of share folder

Shared folder permissionsI am going to go with Administrators have full access; Other users have read and write permissions. If you select Custom you can choose specific groups and users and give them specific rights. Click Ok when you are done choosing permissions, then click Next.

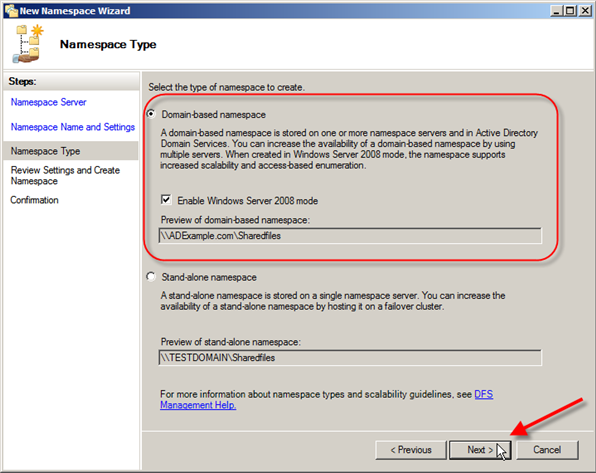

- Next > Namespace Type, there are two choices: Domain-based namespace or Stand-alone namespace. There are some big difference between the two so let’s take a quick look at them now:

- Domain Based Namespace = Stored on one of more servers and in Active Directory Domain Services.Increased scalability and access based enumeration when used in Server 208 Mode

- Standalone Namespace = Stored on only a single namespace server, for redundancy, you have to use a failover cluster

The Windows Server 2008 mode includes support for access-based enumeration and increased scalability. The domain-based namespace introduced in Windows 2000 Server is now referred to as “domain-based namespace (Windows 2000 Server mode).”

To use the Windows Server 2008 mode, the domain and namespace must meet the following minimum requirements:

- The domain uses the Windows Server 2008 domain functional level.

- All namespace servers are running Windows Server 2008.

- Choose Domain-based namespace in Windows Server 2008 mode and you can see the preview is going to be \\ADExample.com\Sharedfiles, once your choice is made click on Next.

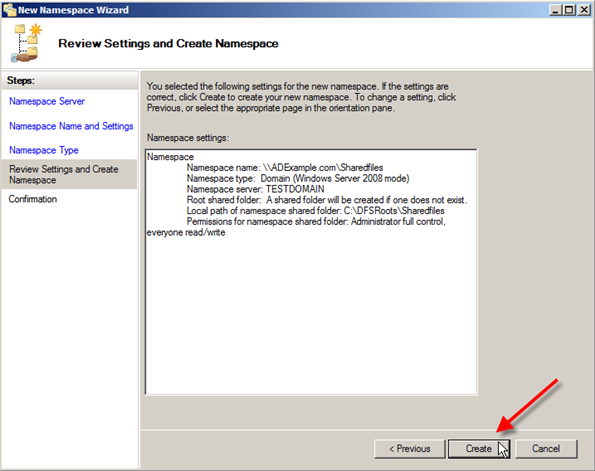

- The next screen lets you review the choices you just made, if they are correct go ahead and click Create.

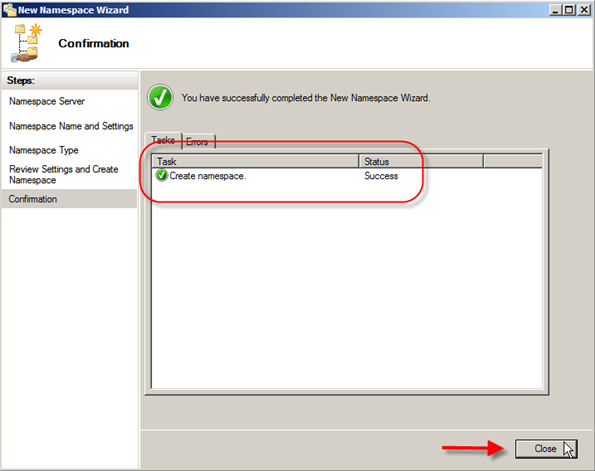

- Next you will see a screen telling you that the namespace is being created. After a few minutes you should see the status of Success, and then click Ok.

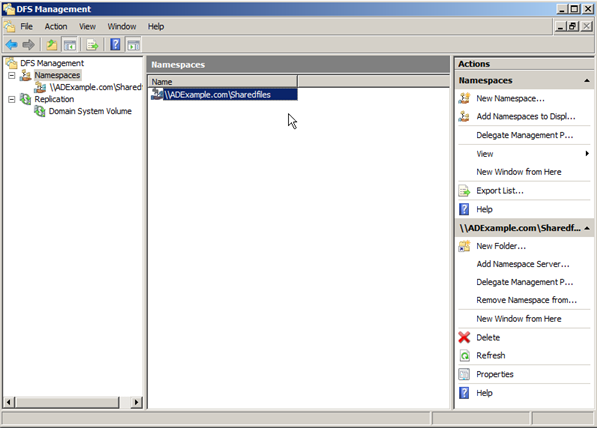

- Now in DFS Management Snap-in you can see the Namespace we just created.

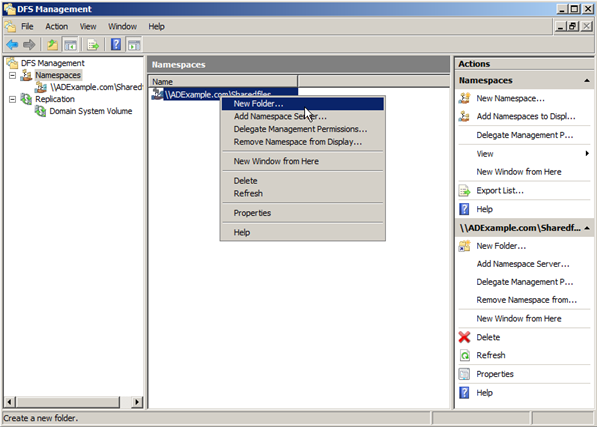

- Next try creating a folder. Right click on the namespace and click New Folder.

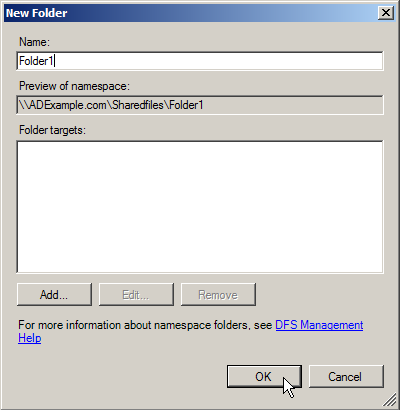

- Now type the name of the folder you want. In this case I am going to be very original and type Folder1, but hopefully you will use something more descriptive when the time comes.Below the Name field you will see a space that shows you a preview of the Namespace with this new folder. Also under that you will see Folder Targets. This allows you to point this folder at a shared folder already on your network.That way you don’t have to migrate files over, but be warned; if you setup these target folders there is no replication, so if that share goes down for any reason users will not be able to access that data. Go ahead and click Ok

- You will now see in the DFS Management Snap-in Folder1 under the namespace we just created.

Adding another Namespace Server

This has several advantages:

- If one namespace server hosting the namespace goes down, the namespace will still be available to users who need to access shared resources on your network. Adding another namespace thus increases the availability of your namespace.

- If you have a namespace that must be available to users all across your organization but your Active Directory network has more than one site, then each site should have a namespace server hosting your namespace. That way, when users in a site need to contact a namespace server for referrals, they can do so locally instead of sending traffic requests to other sites. This improves performance and reduces unnecessary WAN traffic

Instructions

- Firstly install DFS on a second server. Include replication as ticked if you need to

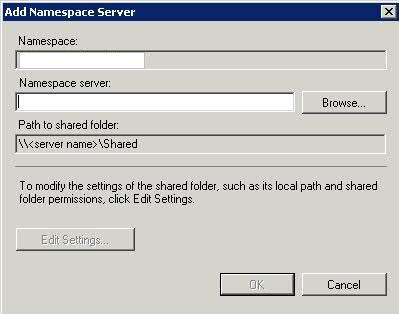

- Go back to your first DFS Server and click on Add Namespace Server

- Choose your second Namespace server

- Note that a folder named Shared (or whatever you created already) will now automatically be created on your second server and shared with the appropriate permissions (Read permission for Everyone). You can override this default behavior if you like by clicking Edit Settings.

- Now you have two namespace servers defined for your namespace.

- The question is, when a user in one department tries to access the namespace, which namespace server will it use? This brings us to the next topic—referrals.

Referrals

By default, DFS tries to connect a client with a target in the client’s own site first whenever possible to prevent the client from having to use a WAN link to access the resource. Furthermore, DFS also tries to randomly load-balance such access when there are multiple targets available in the client’s site.

- Click on the root then click Namespace Servers in the Details pane.

- Right click on the entry here and select Properties > Advanced

- Tick Override referral ordering and select First among all targets for the server you want to be the priority DFS server

Note that adding additional namespace servers is only supported for domain-based namespaces, not standalone namespaces

Finally, if your WAN links are unreliable, you might find your clients frequently accessing different targets for the same folder. This can be a problem, for by default, DFS caches referrals for a period of time (300 seconds or 5 minutes) so if a target server suddenly goes down the client will keep trying to connect to the target and give an error instead of making the resource available to the client from a different target. Eventually (by default after 300 seconds or 5 minutes) the referral will expire in the client’s cache and a new referral will be obtained to a target that is online and the client will be able to access the desired resource, but in the meantime the user may grow frustrated since (a) the user has to wait for the referral to expire and (b) after the referral expires and a new one is obtained, the referral may direct the client to access a remote target over the WAN link which is not an optimal situation. To prevent this from happening (especially non-optimal targets), you can configure client failback on the namespace (or on specific folders in your namespace) so that when the failed target comes back online the client will fail back to that target as its preferred target

Enabling Access Based Enumeration (See next Blog for more info)

- On your DFS Server right click on the root and

- Select Properties

- Select Advanced and choose “Enable access-based enumeration for this namespace”

- On each Shared Folder, right click > Properties > Advanced > Set explicit view permissions on the DFS Folder which will enable folders to be seen if the user has permission, or the folders will be hidden

Useful Link

http://www.youtube.com/watch?v=KQ_oW7JlRRU

http://www.youtube.com/watch?v=yPyfQ_NkyNw

Installing DFS (Distributed File System)

What is DFS?

DFS stands for Distributed File System and provides two very important benefits for system administrators of Wide Area Networks (WAN) with multiple sites that have a need to easily store, replicate, and find files across all locations.

- The first is the benefit of being able to have one Namespace that all users can use, no matter what their location, to locate the files they share and use.

- The second is a configurable automatic replication service that keeps files in sync across various locations to make sure that everyone is using the same version.

Distributed File System (DFS) allows administrators to group shared folders located on different servers by transparently connecting them to one or more DFS namespaces. A DFS namespace is a virtual view of shared folders in an organization. Using the DFS tools, an administrator selects which shared folders to present in the namespace, designs the hierarchy in which those folders appear, and determines the names that the shared folders show in the namespace. When a user views the namespace, the folders appear to reside on a single, high-capacity hard disk. Users can navigate the namespace without needing to know the server names or shared folders hosting the data. DFS also provides many other benefits, including fault tolerance and load-sharing capabilities, making it ideal for all types of organizations.

Two very important aspects of DFS

DFS NameSpaces

Each namespace appears as a folder with subfolders underneath.

The trick to this is that those folders and files can be on any shared folder on any server in your network without the user having to do any complicated memorization of server and share names. This logical grouping of your shares will also make it easier for users at different sites to share files without resorting to emailing them back and forth.

DFS Replication

This service keeps multiple copies of files in sync.

Why would you need this? Well if you want to improve performance for your DFS users you can have multiple copies of your files at each site. That way a user would be redirected to the file local to them, even though they came through the DFS Namespace. If the user changed the file it would then replicate out to keep all copies out in the DFS Namespace up to date. This feature of course is completely configurable.

DFS Namespaces Illustrated

The following figure illustrates a physical view of file servers and shared folders in the Contoso.com domain. Without a DFS namespace in place, users need to know the names of six different file servers, and they need to know which shared folders reside on each file server.

When the IT group in Contoso.com implements DFS, they must first decide the type of namespace to implement. Windows Server 2003 offers two types of namespaces: stand-alone and domain-based. The IT group also chooses a root name, which is similar to the shared folder name in a Universal Naming Convention (UNC) path \\ServerName\SharedFolderName.

The following figure illustrates two namespaces as users would see them. Notice how the address format differs — one begins with a server name, Software, and the other begins with a domain name, Contoso.com. These differences illustrate the two types of roots: stand-alone roots, which begin with a server name, and domain-based roots, which begin with a domain name. Valid formats for domain names include \\NetBIOSDomainName\RootName and \\DNSDomainName\RootName

Installing DFS

Installing DFS Management also installs Microsoft .NET Framework 2.0, which is required to run the DFS Management snap-in.

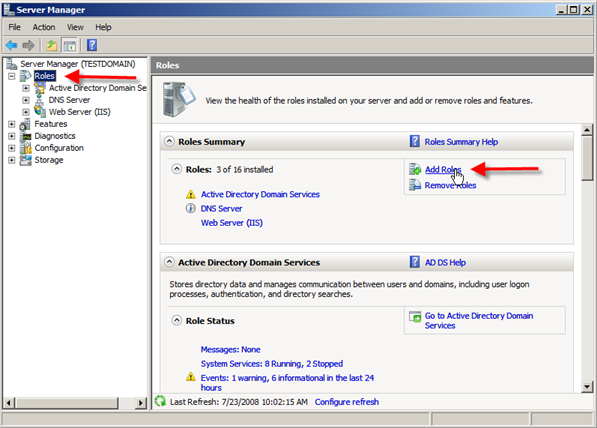

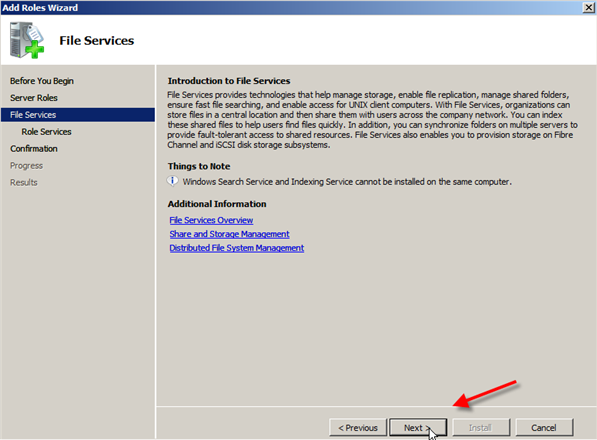

- Open Server Manager.

- Click Roles > Click Add Roles

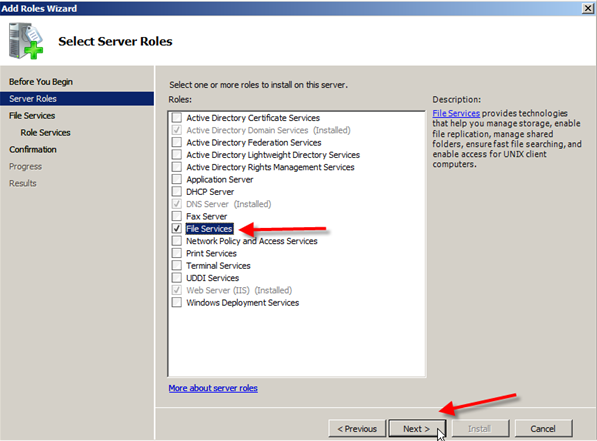

- Select File Services from the list of roles.

- Now you will get an Introduction to File Services information screen; read through it and move on by clicking Next.

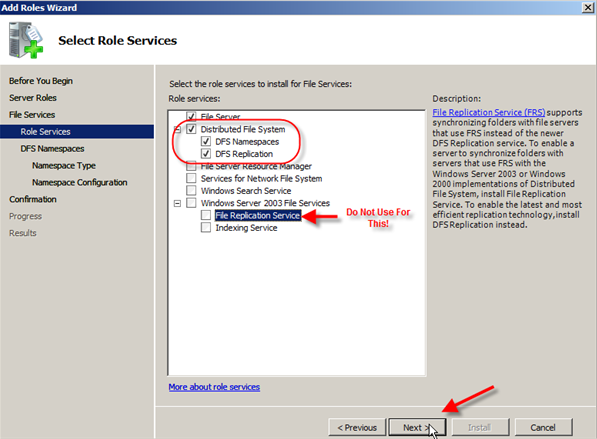

- In Select Service Roles you can click on Distributed File System and it should also place a check next to DFS Namespaces & DFS Replication; after this click Next.NOTE: At the bottom you will see Windows Server 2003 File Services and File Replication Service. You would only choose this if you were going to be synchronizing the 2008 server with old servers using the FRS service.

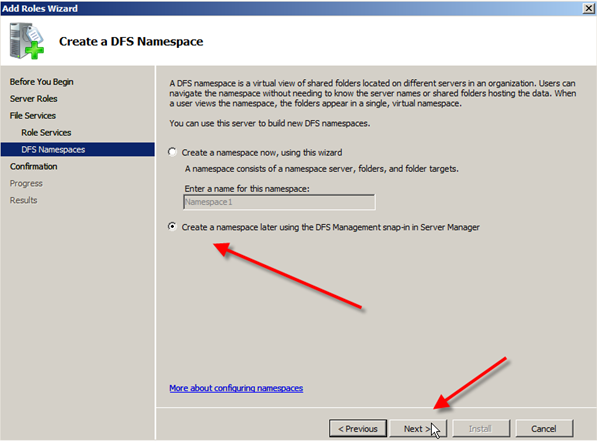

- On the Create a DFS Namespace screen you can choose to create a namespace now or later.I am going to create one later. So I am going to choose Create a namespace later using the DFS Management snap-in in Server Manager and then click Next.

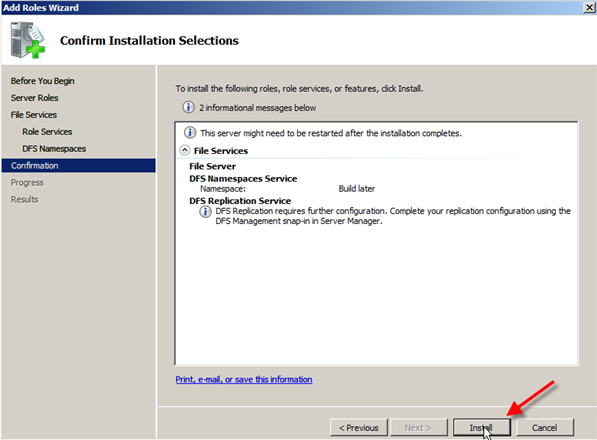

- The next screen allows you to confirm your installation selections, so review and then click Install.

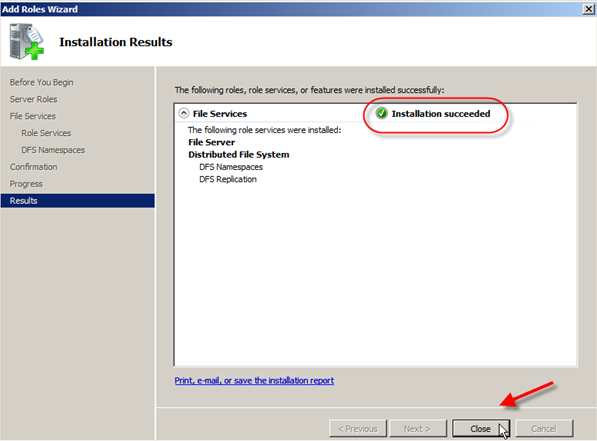

- After a short interval of loading you will see the Installation Results screen which will hopefully have Installation succeeded in the top right. Go ahead and click Close.

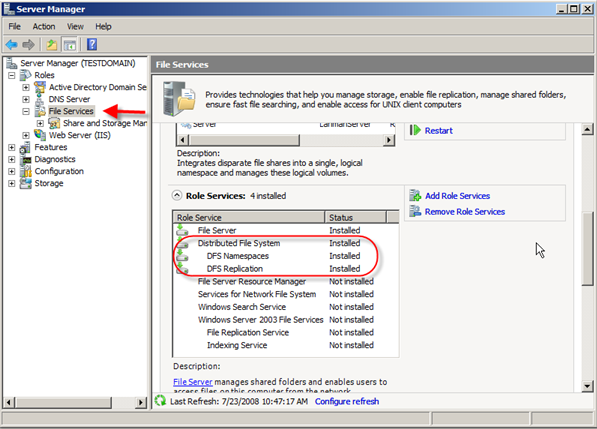

- In Server Manager you should now see File Services and under the Role Services you will see the installed components:

DFS has the following dependencies:

- Active Directory replication. Domain-based DFS requires that Active Directory replication is working properly so that the DFS object resides on all domain controllers in the domain.

- Server Message Block (SMB). Clients must access DFS root servers by using the SMB protocol.

- Remote Procedure Call (RPC) service and Remote Procedure Call Locater service. The DFS tools use RPC to communicate with the DFS service running on DFS root servers.

- Distributed File System service dependencies. The Distributed File System service must be running on all DFS root servers and domain controllers so that DFS can work properly. This service depends on the following services:

The Server service, Workstation service, and Security Accounts Manager (SAM) service on DFS root servers. The Distributed File System service also requires an NTFS volume to store the physical components of DFS on root servers.

The Server service and Workstation service on domain controllers.

See the next Blog Post for information on Configuring DFS

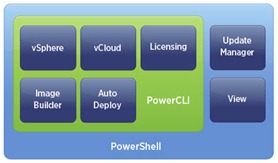

PowerCLI Poster 4.0 and 5.0

Poster of PowerCLI commands for VMware 4.0 +

Poster of PowerCLI commands for VMware 5.0

The new poster adds to the original vSphere PowerCLI core cmdlets and allow you to quickly reference cmdlets from the following :

- vSphere

- Image Builder

- Auto Deploy

- Update Manager

- Licensing

- View

- vCloud