Distributed Switches Overview

A distributed switch functions as a single virtual switch across all associated hosts. A distributed switch allows virtual machines to maintain a consistent network configuration as they migrate across multiple hosts.

Like a vSphere standard switch, each distributed switch is a network hub that virtual machines can use. A distributed switch can forward traffic internally between virtual machines or link to an external network by connecting to uplink adapters.

Each distributed switch can have one or more distributed port groups assigned to it. Distributed port groups group multiple ports under a common configuration and provide a stable anchor point for virtual machines that are connecting to labeled networks. Each distributed port group is identified by a network label, which is unique to the current datacenter. A VLAN ID, which restricts port group traffic to a logical Ethernet segment within the physical network, is optional.

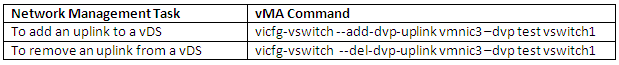

Valid Commands

You can create distributed switches by using the vSphere Client. After you have created a distributed switch, you can

- Add hosts by using the vSphere Client

- Create distributed port groups with the vSphere Client

- Edit distributed switch properties and policies with the vSphere Client

- Add and remove uplink ports by using vicfg-vswitch

You cannot

- Create a Distributed Virtual Switches with ESXCLI

- Add and Remove Uplink Ports with ESXCLI

Note: With the release of 5.0, the majority of the legacy esxcfg-*/vicfg-* commands have been migrated over to esxcli. At some point, hopefully not in the distant future, esxcli will be parity complete and the esxcfg-*/vicfg-* commands will be completely deprecated and removed including the esxupdate/vihostupdate utilities.

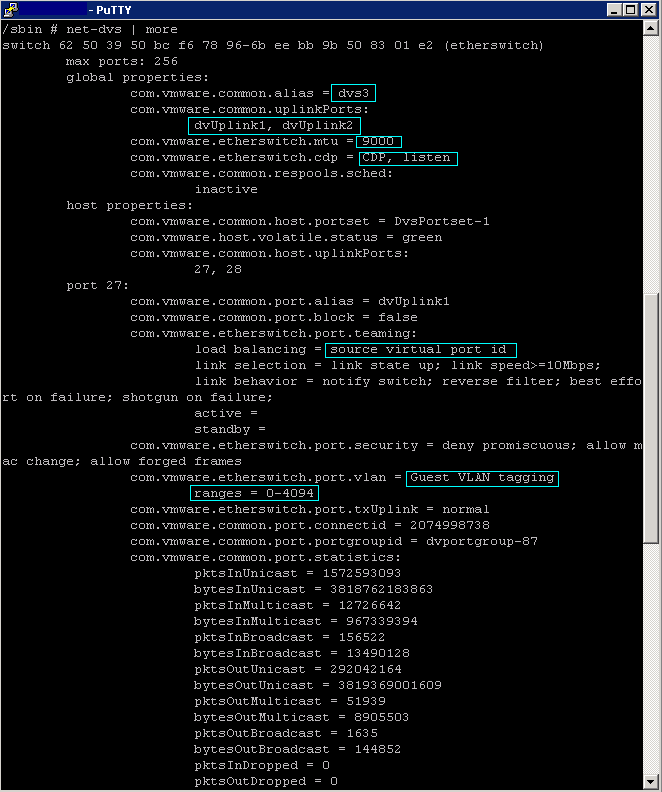

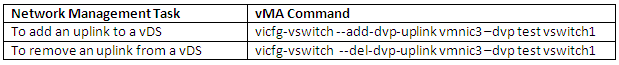

Managing uplinks in Distributed switches

Add an Uplink

- vicfg-vswitch <conn_options> –add-dvp-uplink <adapter_name> –dvp <dvport_id> <dvswitch_name>

Remove an Uplink

- vicfg-vswitch <conn_options> –del-dvp-uplink –dvp <dvport_id> <dvswitch_name>

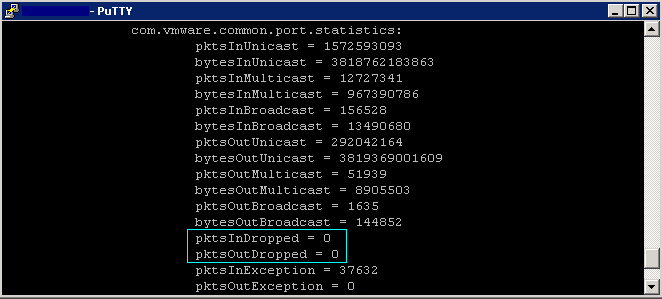

Examples