How can we monitor Java Applications on Virtualised Systems?

We can’t determine all we need to know about a Java workload from system tools such as System Monitor. We need to use specialized Java monitoring tools such as the below tools which helps us see inside the Java Heap, Garbage Collection, and other relevant Java metrics.

- JConsole

- vCenter Operations Suite

What is the Java Heap?

The Java Heap is used to store objects that the program is working on. For example, an object could be a customer record, a file or anything else the program has to manipulate. As objects are created, used and discarded by the program, you will see the Heap memory size change. Discarded objects (referred to as dead objects) are not immediately removed from the heap when the program is done with them. Instead, a special task called Garbage Collection, runs through the heap to detect dead objects. Once it detects a dead object, it deletes the object and frees up the memory.

The Java Heap is divided in to pools of memory, referred to as generations. There are three generations called

- Eden Space

- Survivor Space

- Tenured Gen

This helps the Garbage collection (GC) process become more efficient by reducing the amount of memory it has to scan each time a GC is run. GC is run on the ‘Eden Space’ more often as this is where new objects are stored. GC runs less often on the Survivor space and even less often on the Tenured Gen space. If an object survives one GC run in the Eden Space, it is moved to the Survivor Space. If an object exists in the Survivor Space for some time, it is moved to the Tenured Gen.

Memory Reclamation Techniques

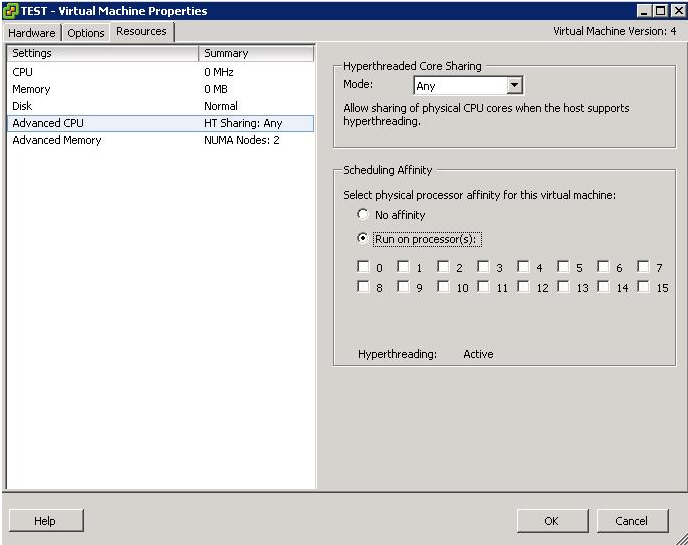

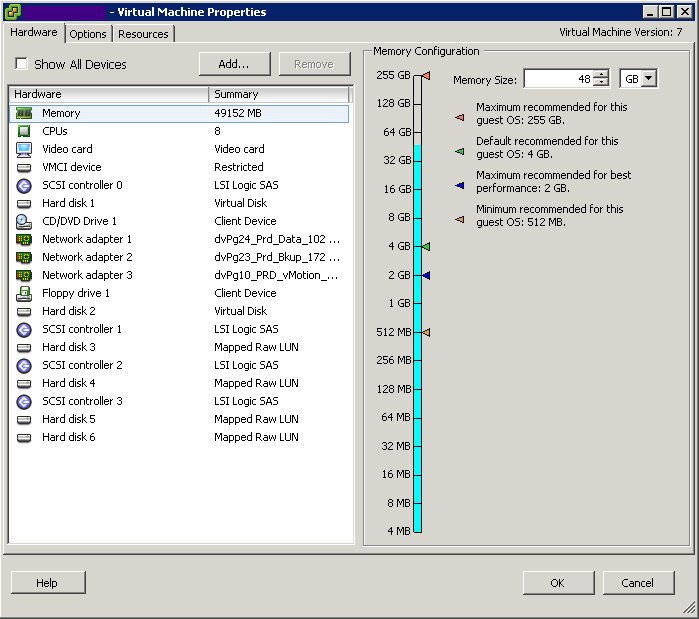

When running Java workloads on in an x86 Virtual Machine (i.e. a VM in the VMware sense of the word), it is recommended that you do not overcommit memory because the JVM memory is an active space where objects are constantly being created and garbage collected. Such an active memory space requires its memory to be available all the time. If you overcommit memory, memory reclamation techniques such as compression, ballooning or swapping may occur and impede performance

- Memory compression involves compressing pages of memory (zipping) and storing them compressed instead of in native format. It has a performance impact because resources are used to compress and uncompress memory as it is being accessed. The host attempts to only compress inactive memory pages if at all possible. As GC runs through the java heap, it accesses lots of memory that may behave been marked as inactive. This causes any memory that has been compressed to decompress using up further VM resources.

- Ballooning employs the memory balloon driver (vmmemctl), which is part of the VMware Tools package. This is loaded into the guest operating system on boot. When memory resources on the host become scarce (contended), the host tells the balloon driver to request memory (inflate) up to a target size (balloon target). The target is based on the amount of inactive memory the host believes the guest is holding on to. The memory balloon driver starts to request memory from the guest OS to force the guest to clean up inactive memory. Once the balloon driver has been allocated memory by the guest OS, it releases this back to other VMs by telling the Hypervisor that the memory is available. Once again, what appears to be inactive memory to the host may soon be subject to garbage collection, and become active again. If the guest has no inactive memory to release, it starts paging memory to disk in response to the request for memory from the balloon driver. This has a very negative impact on java performance

- Swapping. This is a last resort memory reclamation technique that no application wants to be faced with. A serious decline in performance is likely with swapping

Best Practices

- Enterprise Java Applications on VMware Best Practice Guide, which says you should not exceed 80% CPU utilization on the ESX host.

- Reserving memory at the VM level is in general not a good idea, but essential for Java workloads due to the highly active java memory heap space. However, creating a memory reservation is a manual intervention step that we should try to avoid. Consider the situation in a large, dynamic, automated self-service environment (i.e. Cloud). Also, if we’re reserving memory for peak workloads within our java applications, we’re wasting resources as our applications don’t run at peak workload all the time. It would be good if the Java VM would just talk to the vSphere VM to let it know what memory is active, and what memory is idle so that vSphere could manage memory better, and the administrator could consolidate Java workloads without the fear of memory contention, or reserving memory for peak times.

- Introducing VMware vFabric Elastic Memory for Java (EM4J). With EM4J, the traditional memory balloon driver is replaced with the EM4J balloon driver. The EM4J memory balloon sits directly in the Java heap and works with new memory reclamation capabilities introduced in ESXi 5.0. EM4J works with the hypervisor to communicate system-wide memory pressure directly into the Java heap, forcing Java to clean up proactively and return memory at the most appropriate times—when it is least active. You no longer have to be so conservative with your heap sizing because unused heap memory is no longer wasted on uncollected garbage objects. And you no longer have to give Java 100% of the memory that it needs; EM4J ensures that memory is used more efficiently, without risking sudden and unpredictable performance problems.

vFabric Elastic Memory for Java (EM4J)

vFabric Elastic Memory for Java (EM4J) is a set of technologies that helps optimize memory utilization for ESXi virtual machines running Java workloads.

EM4J provides vSphere administrators with the following tools:

- The EM4J plug-in for the vSphere Web Client, together with the EM4J Console Guest Collector, provides a detailed, historical view of virtual machine and JVM memory usage, which helps vSphere administrators size the VM and Java heap memory optimally.

- The EM4J agent establishes a memory balloon in the Java heap, which helps maintain predictable Java application performance when host memory becomes scarce. The balloon works with the ESXi hypervisor to reclaim memory from the Java heap when other VMs need memory.

- The EM4J plug-in and the EM4J agent can be used together or independently.

For more information about EM4J, see vFabric Elastic Memory for Java Documentation at the link below

http://www.vmware.com/support/pubs/vfabric-em4j.html