DFS Troubleshooting

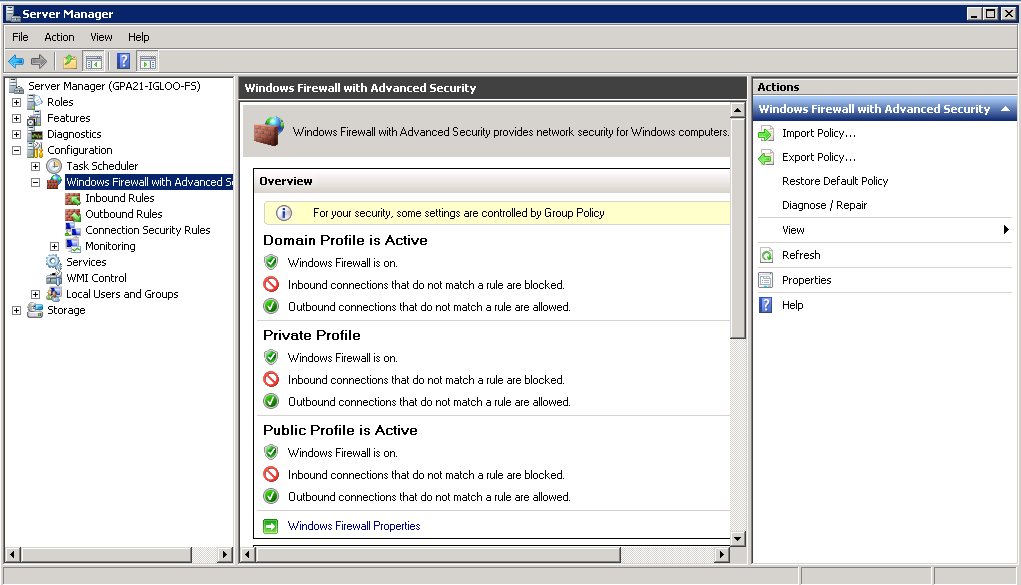

The DFS Management MMC is the tool that can manage most common administration activities related to DFS-Namespaces. This will show up under “Administrative Tools” after you add the DFS role service in Server Manager. You can also add just the MMC for remote management of a DFS namespace server. You can find this in Server Manager, under Add Feature, Remote Server Administration Tools (RSAT), Role Administration Tools, File Services Tools.

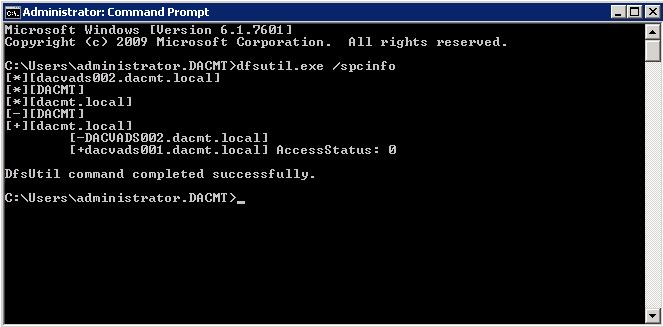

Another option to manage DFS is to use DFSUTIL.EXE, which is a command line tool. There are many options and you can perform almost any DFS-related activity, from creating a namespace to adding links to exporting the entire configuration to troubleshooting. This can be very handy for automating tasks by writing scripts or batch files. DFSUTIL.EXE is an in-box tool in Windows Server 2008.

What can go wrong?

- Access to the DFS namespace

- Finding shared folders

- Access to DFS links and shared folders

- Security-related issues

- Replication latency

- Failure to connect to a domain controller to obtain a DFSN namespace referral

- Failure to connect to a DFS server

- Failure of the DFS server to provide a folder referral

Methods of Troubleshooting

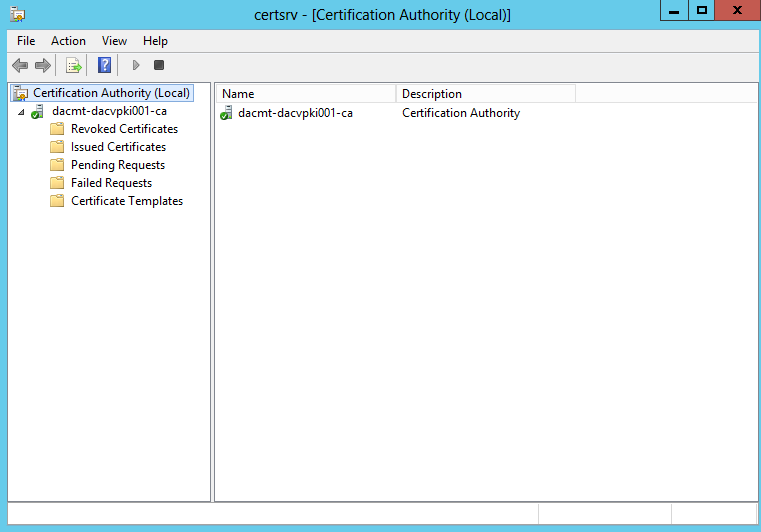

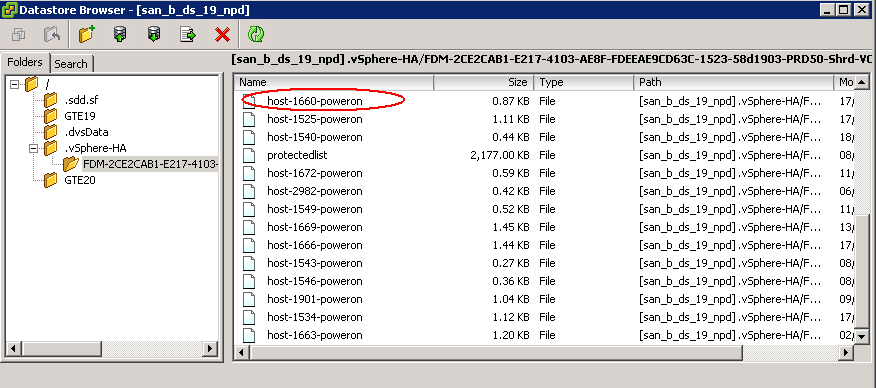

I have a very basic lab set up with DFS running on 2 servers. I will be using this to demonstrate the troubleshooting methods

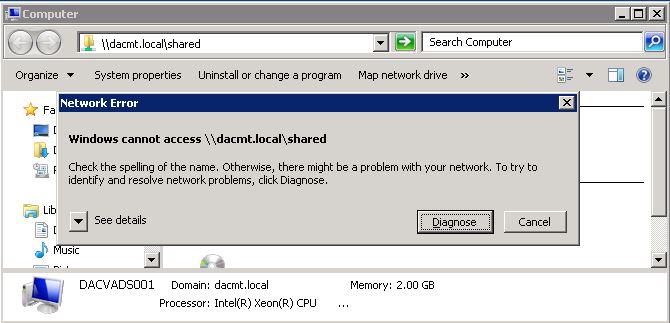

My DFS Namespace is \\dacmt.local\shared

Troubleshooting Commands

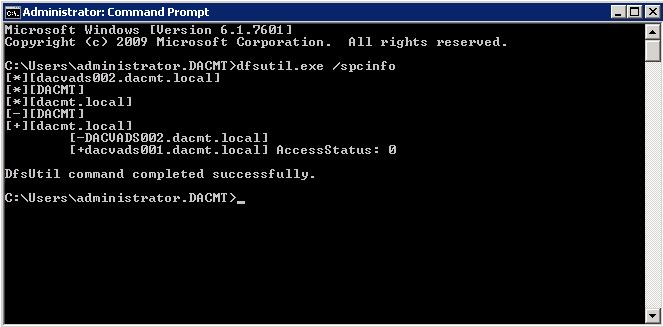

Determine whether the client was able to connect to a domain controller for domain information by using the DFSUtil.exe /spcinfo command. The output of this command describes the trusted domains and their domain controllers that are discovered by the client through DFSN referral queries. This is known as the “Domain Cache”

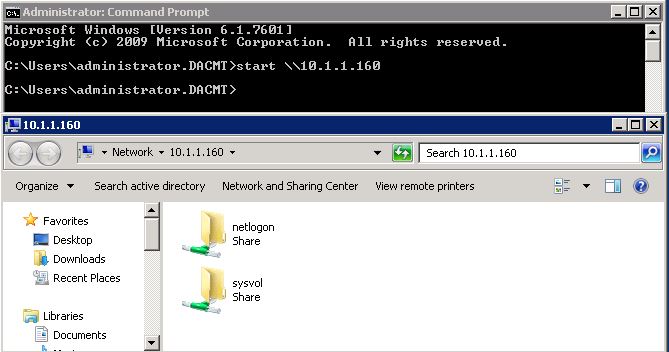

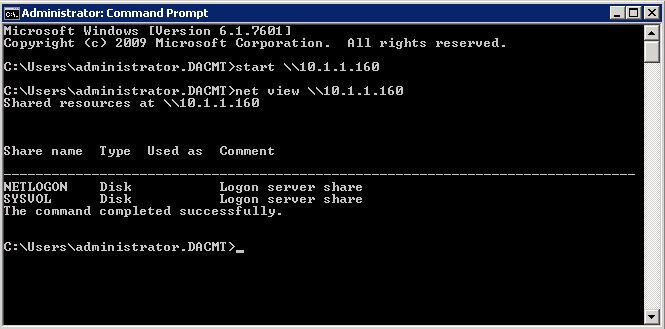

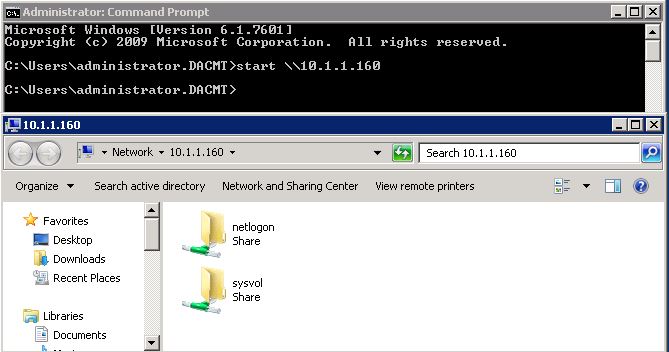

- start \\10.1.1.160 (where 10.1.1.160 is your DC)

This should pop up with an Explorer box listing the shares hosted by your Domain Controller

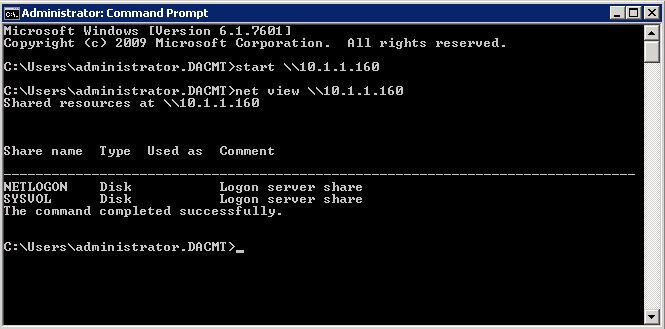

- netview \\10.1.1.160 (where 10.1.1.160 is your DC)

A successful connection lists all shares that are hosted by the domain controller.

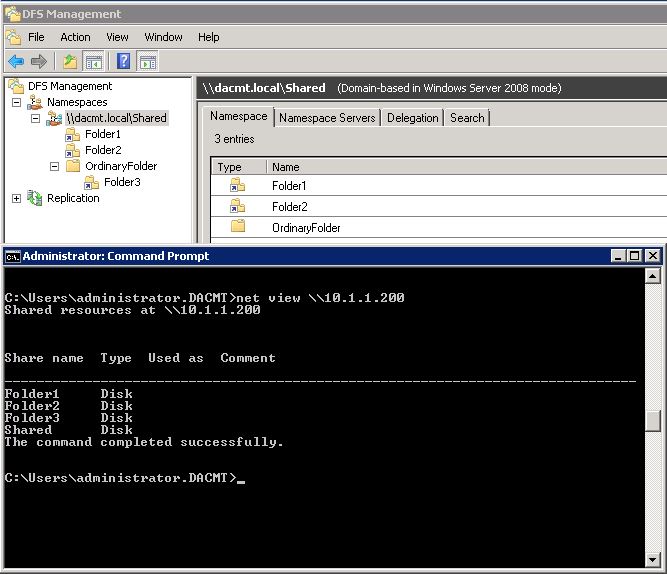

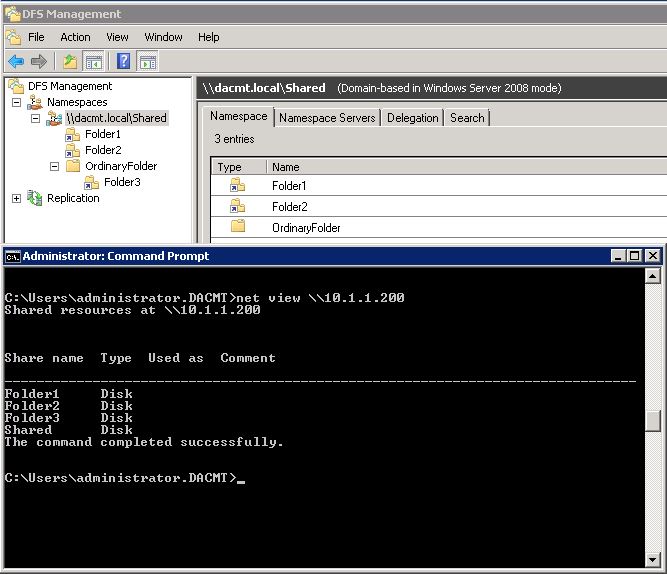

- net view \\10.1.1.200 (Where 10.1.1.200 is your DFS Server)

You can see this shows you your namespace and your shares held on your DFS Server

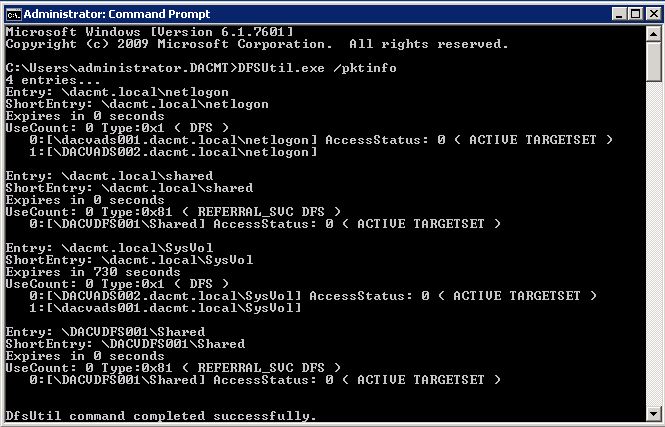

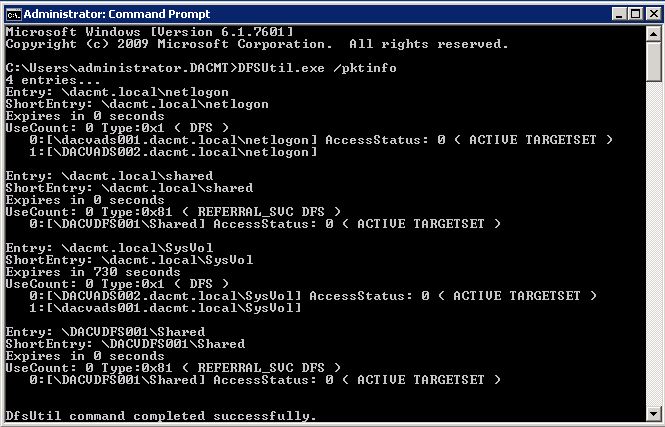

If the above connection tests are successful, determine whether a valid DFSN referral is returned to the client after it accesses the namespace. You can do this by viewing the referral cache (also known as the PKT cache) by using the DFSUtil.exe /pktinfo command

If you cannot find an entry for the desired namespace, this is evidence that the domain controller did not return a referral

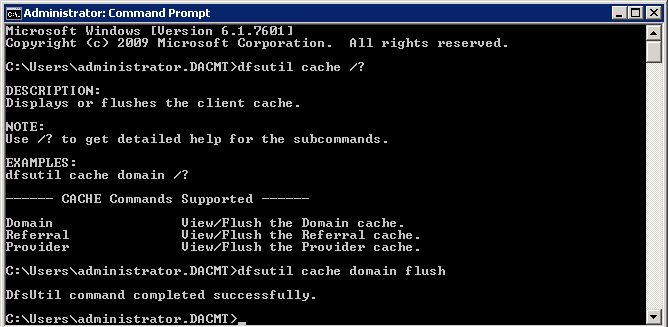

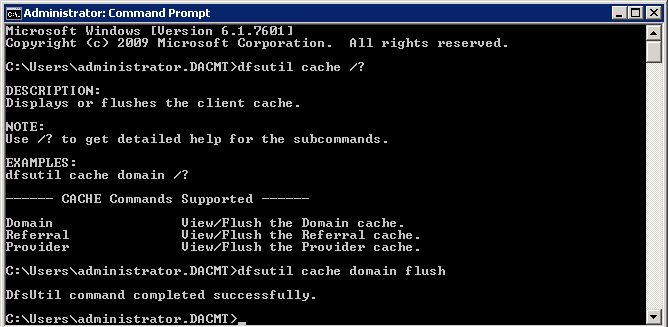

- dfsutil.exe cache domain flush

- dfsutil.exe cache referral flush

- dfsutil.exe cache provider flush

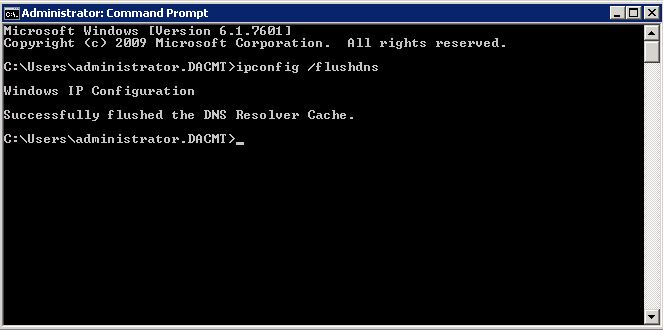

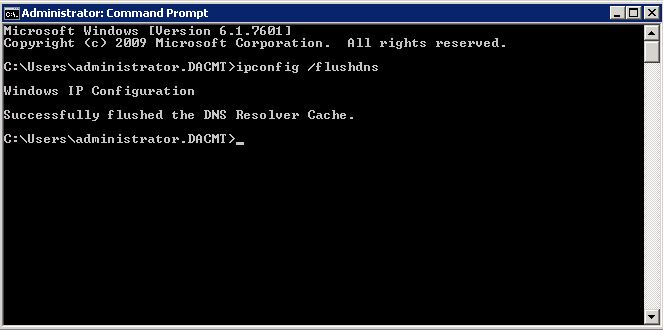

- ipconfig /flushdns and dfsutil.exe /pktflush and dfsutil.exe /spcflush

By default, DFSN stores NetBIOS names for root servers. DFSN can also be configured to use DNS names for environments without WINS servers. For more information, click the underlined link to view the article in the Microsoft Knowledge Base:

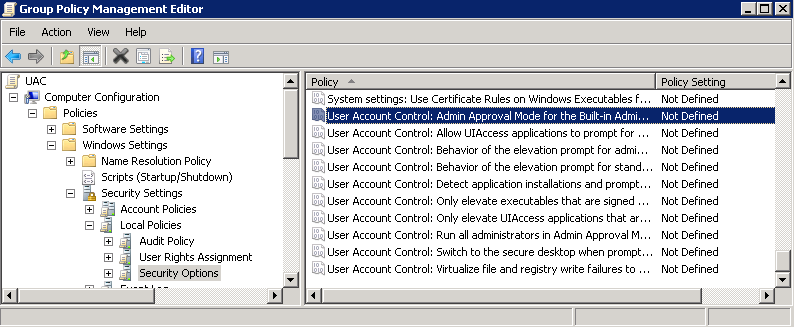

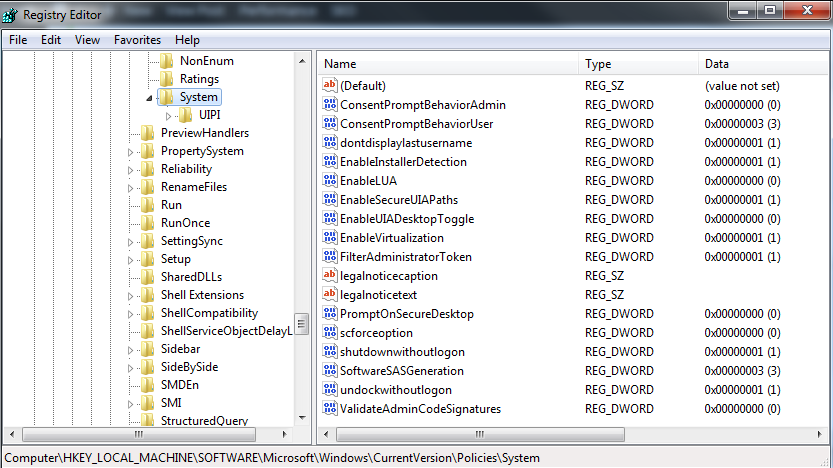

- DFS and System Configuration

Even when connectivity and name resolution are functioning correctly, DFS configuration problems may cause the error to occur on a client. DFS relies on up-to-date DFS configuration data, correctly configured service settings, and Active Directory site configuration.

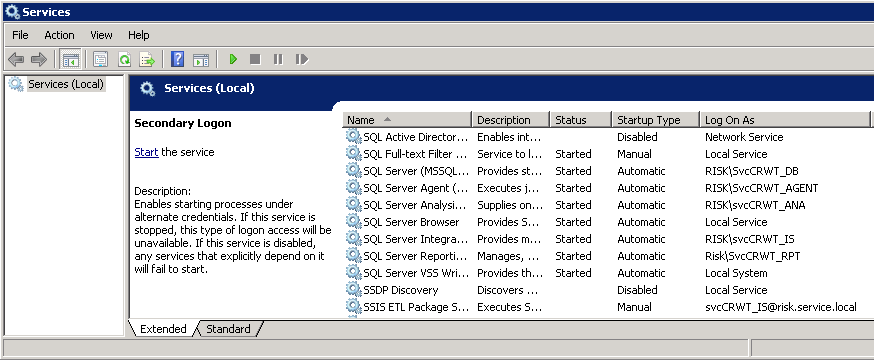

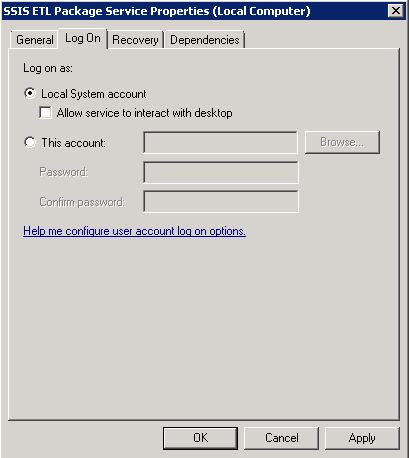

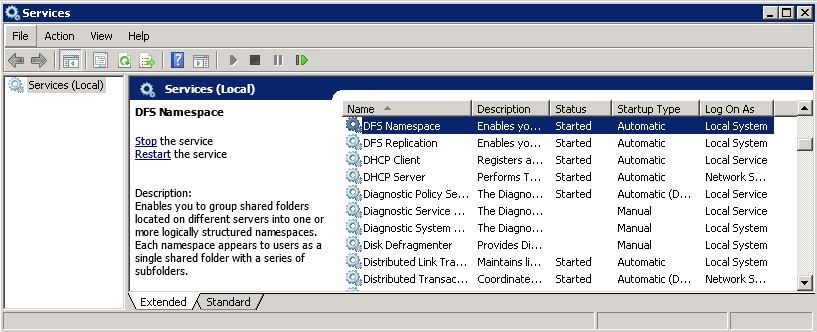

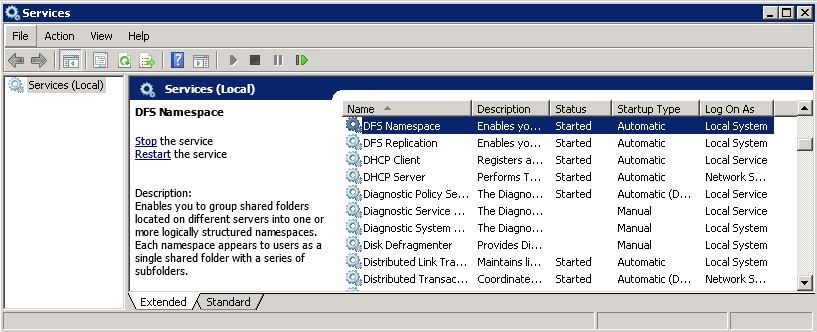

First, verify that the DFS service is started on all domain controllers and on DFS namespace/root servers. If the service is started in all locations, make sure that no DFS-related errors are reported in the system event logs of the servers.

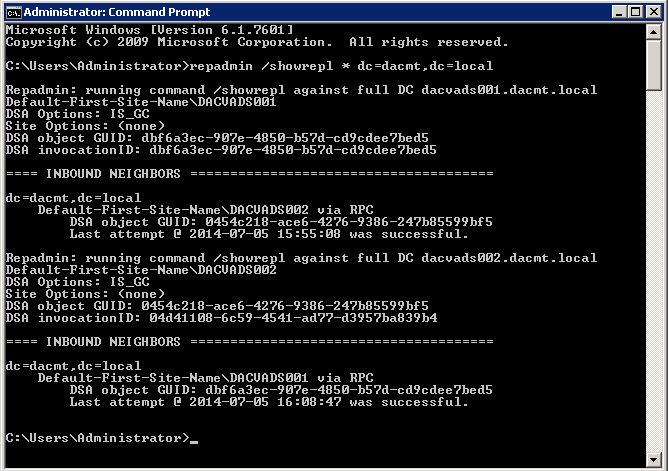

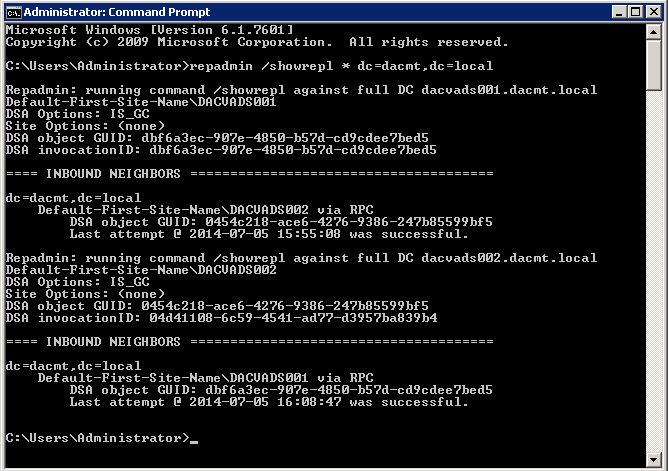

- repadmin /showrepl * dc=dacmt,dc=local

When an administrator makes a change to the domain-based namespace, the change is made on the Primary Domain Controller (PDC) emulator master. Domain controllers and DFS root servers periodically poll PDC for configuration information. If the PDC is unavailable, or if “Root Scalability Mode” is enabled, Active Directory replication latencies and failures may prevent servers from issuing correct referrals.

If a client cannot gain access to a shared folder specified by a DFS link, check the following:

- Use the DFS administrative tool to identify the underlying shared folder.

- Check status to confirm that the DFS link and the shared folder (or replica set) to which it points are valid. For more information, see “Checking Shared Folder Status” earlier in this chapter.

- The user should go to the Windows Explorer DFS property page to determine the actual shared folder that he or she is attempting to connect to.

- The user should attempt to connect to the shared folder directly by way of the physical namespace. By using a command such as ping, net view or net use, you can establish connectivity with the target computer and shared folder.

- If the DFS link has a replica set configured, then be aware of the latency involved in content replication. Files and folders that have been modified on one replica might not yet have replicated to other replicas.

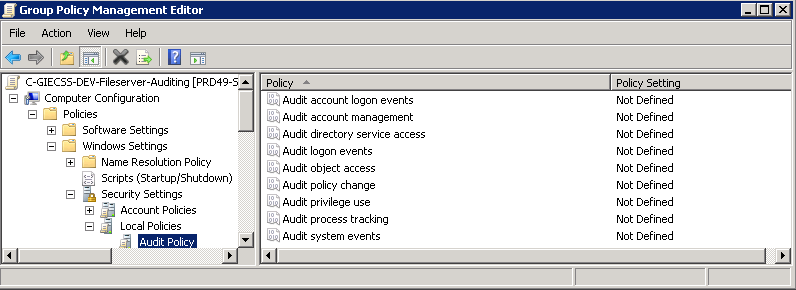

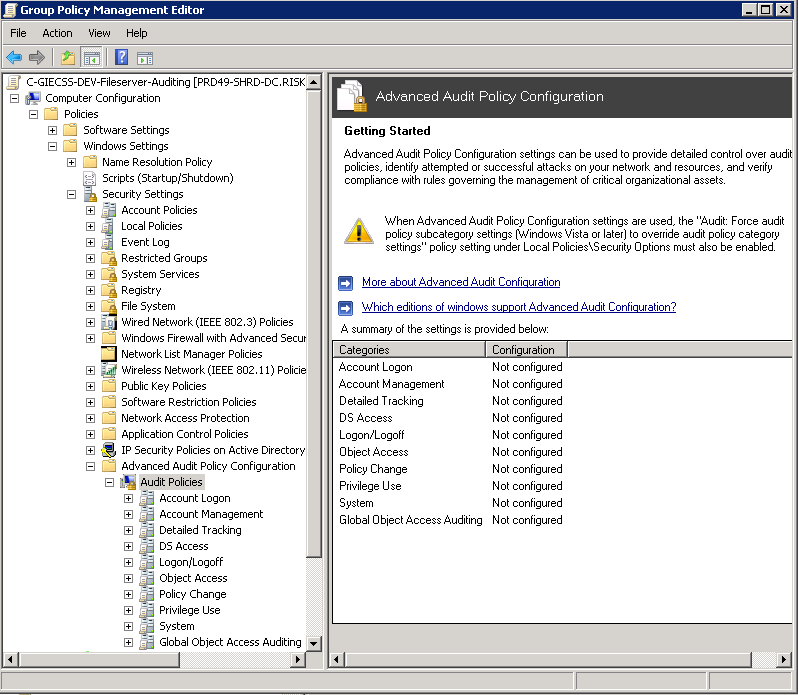

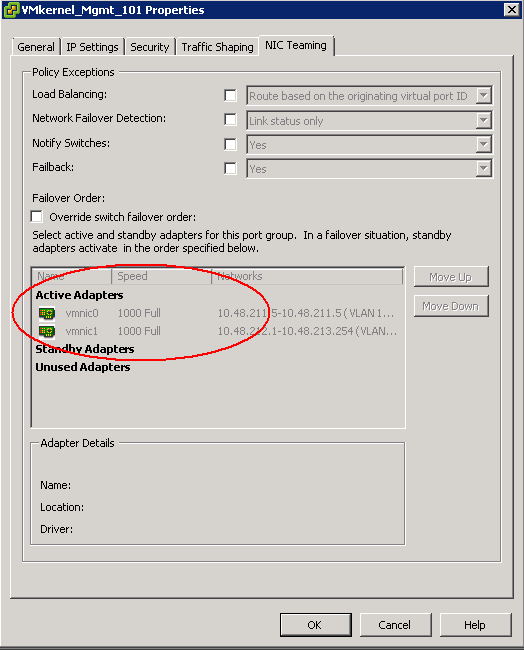

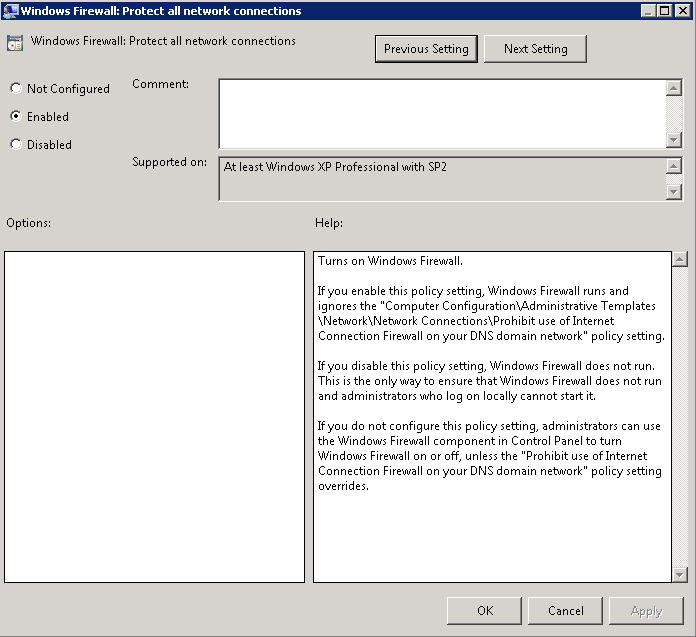

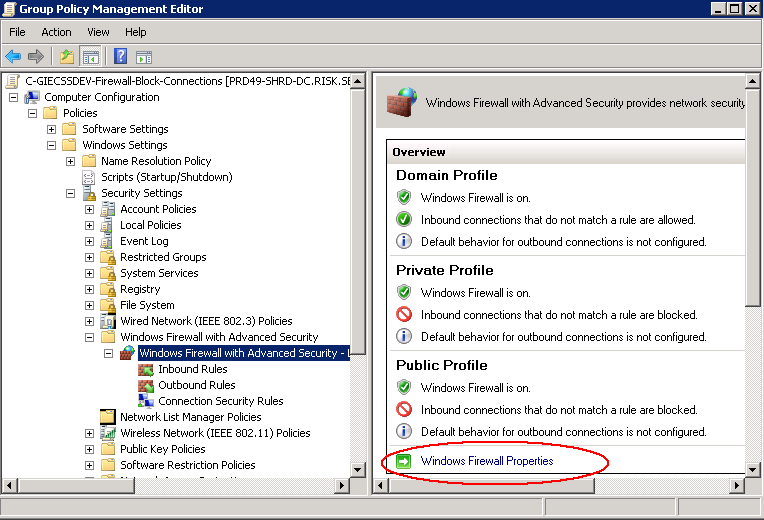

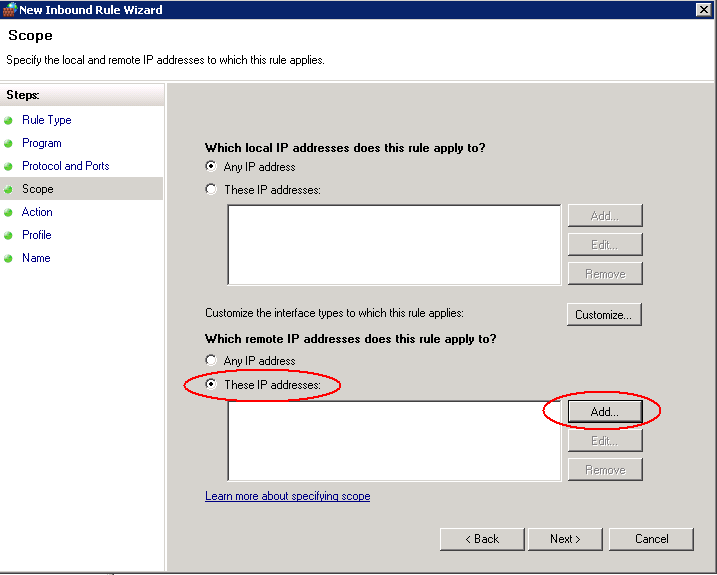

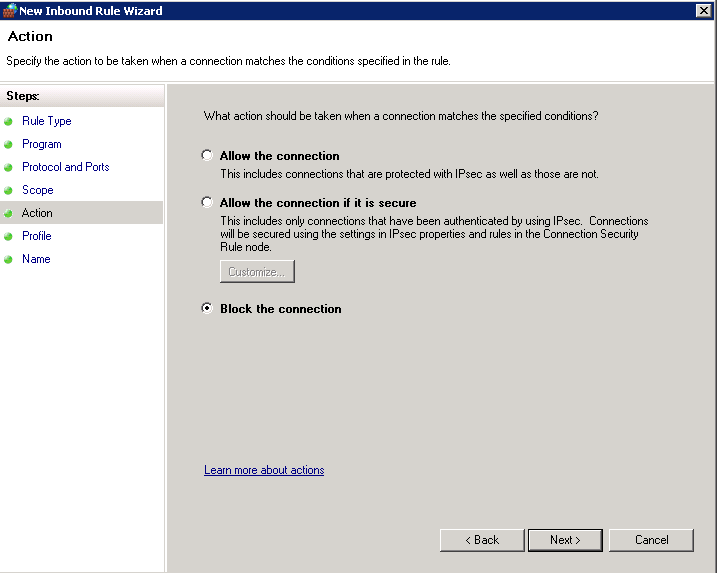

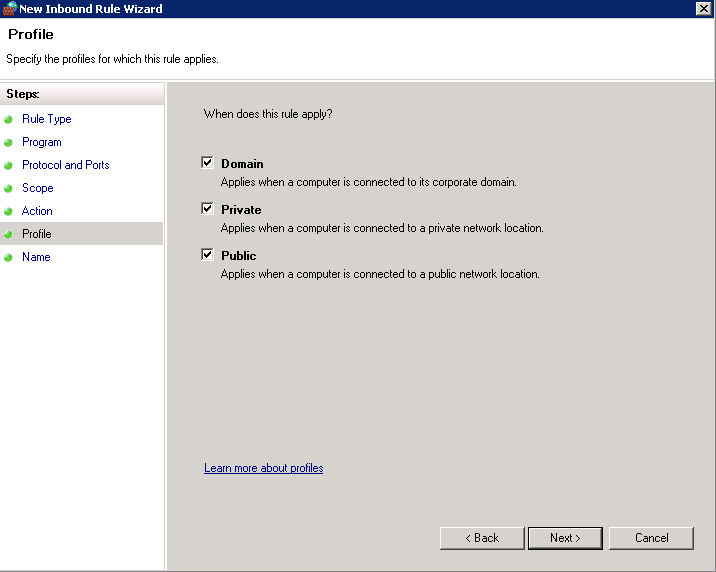

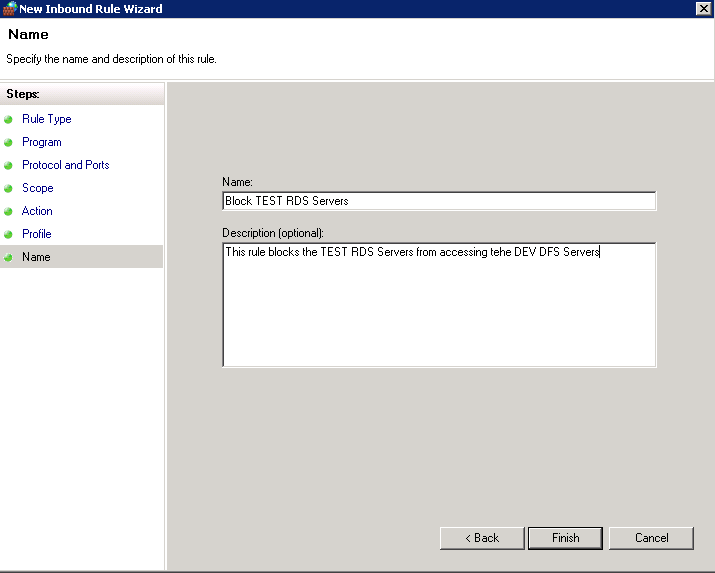

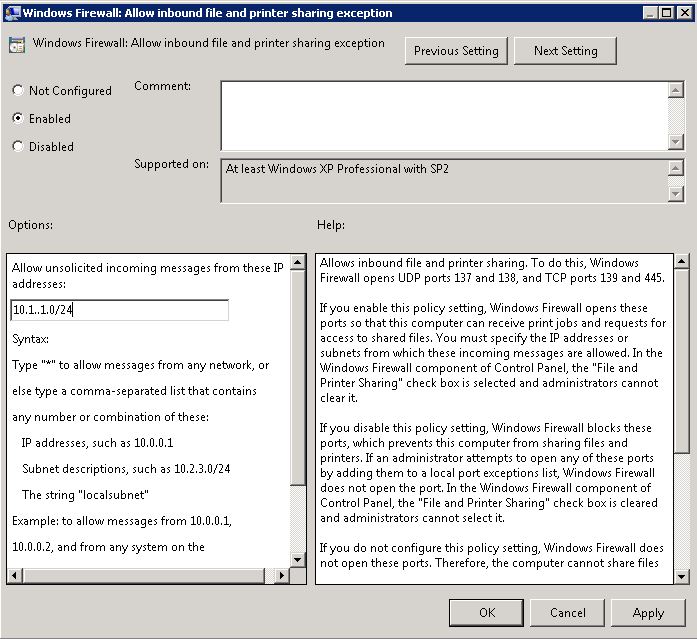

It is also worth checking you do not have any general networking issues on the server you are connecting from and also that there are no firewall rules or Group Policies blocking File and Printer Sharing!

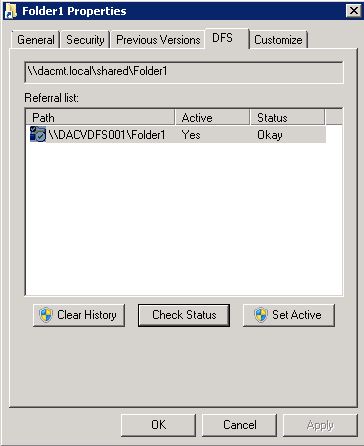

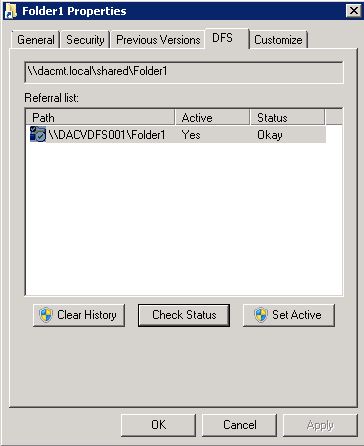

- DFS Tab on DFS folders accessed through the DFS Namespace

It is recommended that one of the first things that you determine when tracking an access-related issue with DFS is the name of the underlying shared folder that the client has been referred to. In Windows 2000, there is a shell extension to Windows Explorer for precisely this purpose. When you right-click a folder that is in the DFS namespace, there is a DFS tab available in the Properties window. From the DFS tab, you can see which shared folder you are referencing for the DFS link. In addition, you can see the list of replicas that refer to the DFS link, so you can disconnect from one replica and select another. Finally, you can also refresh the referral cache for the specified DFS link. This makes the client obtain a new referral for the link from the DFS server.

Because the topology knowledge is stored in the domain’s Active Directory, there is some latency before any modification to the DFS namespace is replicated to all domain controllers.

From an administrator’s perspective, remember that the DFS administrative console connects directly to a domain controller. Therefore, the information that you see on one DFS administrative console might not be identical with the information about another DFS administrative console (which might be obtaining its information from a different domain controller).

From a client’s perspective, you have the additional possibility that the client itself might have cached the information before it was modified. So, even though the information about the modification might have replicated to all the domain controllers, and even if the DFS servers have obtained updates about the modification, the client might still be using an older cached copy. The ability to manually flush the cache before the referral time-out has expired, which is done from the DFS tab in the Properties window in Windows Explorer, can be useful in this situation.

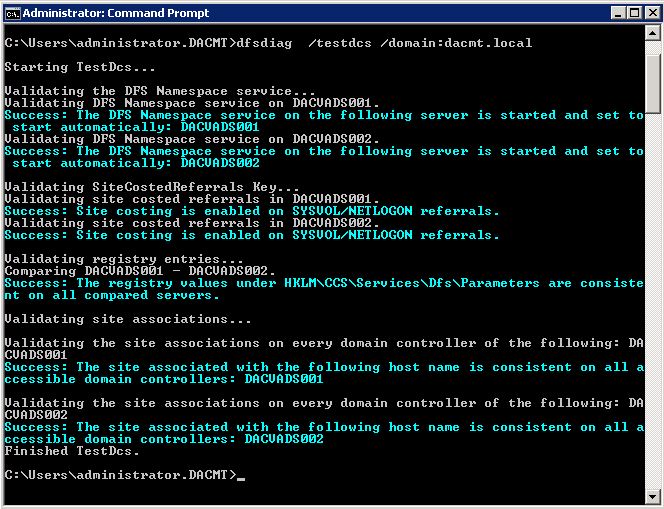

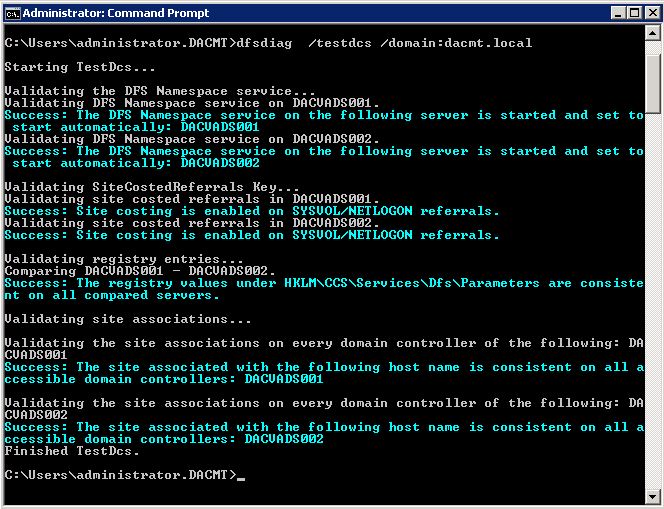

- dfsdiag /testdcs /domain:dacmt.local

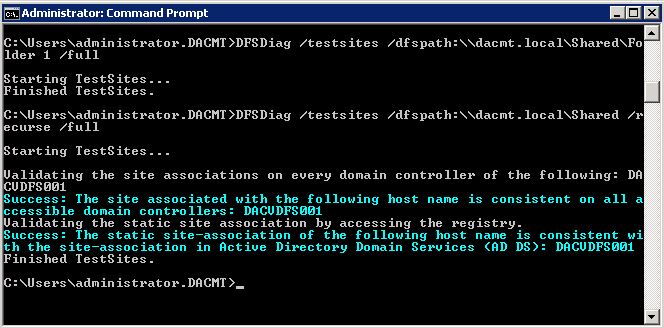

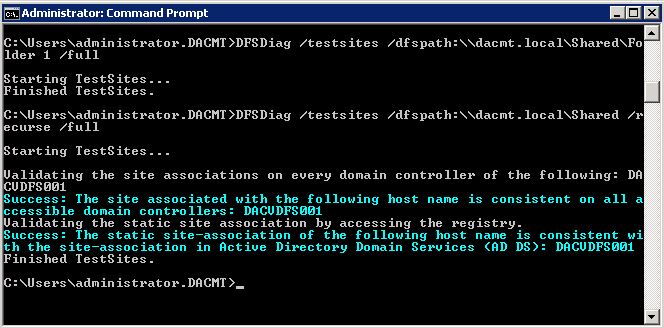

- DFSDiag /testsites /dfspath:\\dacmt.local\Shared\Folder 1 /full

- DFSDiag /testsites /dfspath:\\dacmt.local\Shared /recurse /full

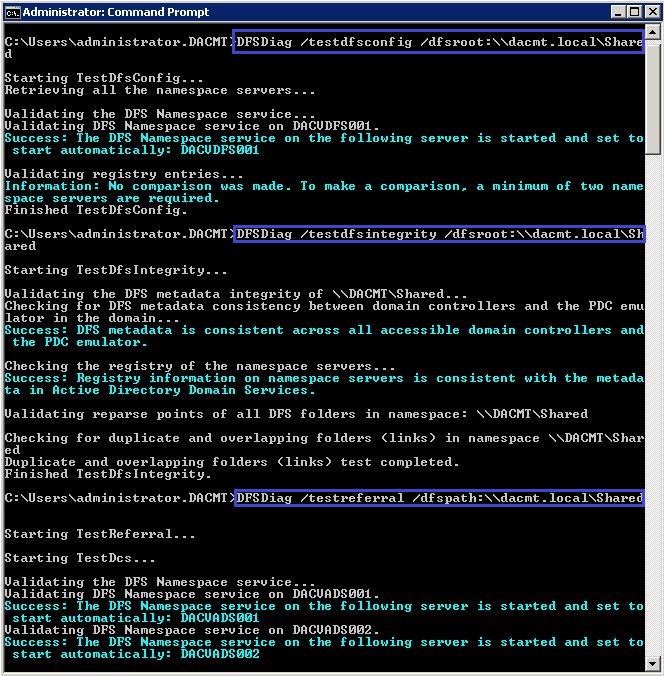

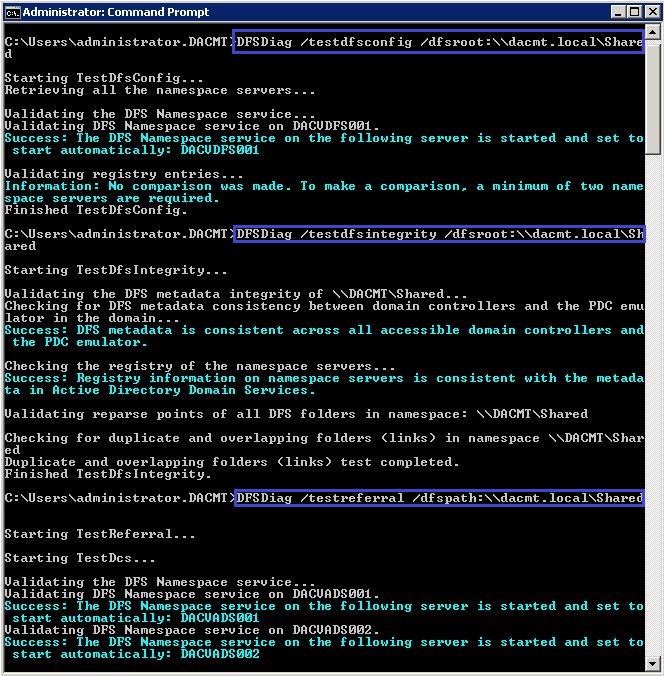

- DFSDiag /testdfsconfig /dfsroot:\\dacmt.local\Shared

- DFSDiag /testdfsintegrity /dfsroot:\\dacmt.local\Shared

- DFSDiag /testreferral /dfspath:\\dacmt.local\Shared

With this you can check the configuration of the domain controllers on your DFS Server. It verifies that the DFS Namespace service is running on all the DCs and its Startup Type is set to Automatic, checks for the support of site-costed referrals for NETLOGON and SYSVOL and verifies the consistency of site association by hostname and IP address on each DC.

and

and

DFSR and File Locking

DFS lacks a central feature important for a collaborative environment where inter-office file servers are mirrored and data is shared: File Locking. Without integrated file locking, using DFS to mirror file servers exposes live documents to version conflicts. For example, if a colleague in Office A can open and edit a document at the same time that a colleague in Office B is working on the same document, then DFS will only save the changes made by the person closing the file last.

There is also another version conflict potential which arises even when the two colleagues are not working on the same file at the same time. DFS Replication is a single-threaded operation, a “pull” process. The result, synchronisation tasks are able to quite easily “queue” up and create a backlog. As a result changes made at one location are not immediately replicated to the other side. It is this time delay which creates yet another opportunity for file version conflicts to occur.

http://blogs.technet.com/b/askds/archive/2009/02/20/understanding-the-lack-of-distributed-file-locking-in-dfsr.aspx

NETBIOS Considerations

In terms of NetBios, the default behavior of DFS is to use NetBIOS names for all target servers in the namespace. This allows clients that support NetBios only name resolution to locate and connect to targets in a DFS namespace. Administrators can use NetBIOS names when specifying target names and those exact paths are added to the DFS metadata. For example, an administrator can specify a target \\dacmt\Users, where dacmt is the NetBIOS name of a server whose DNS or FQDN name is dacmt.local

http://support.microsoft.com/kb/244380