Tuning Configurations

- Always use the Vendors recommendations whether it be EMC, NetApp or HP etc

- Document all configurations

- In a well-planned virtual infrastructure implementation, a descriptive naming convention aids in identification and mapping through the multiple layers of virtualization from storage to the virtual machines. A simple and efficient naming convention also facilitates configuration of replication and disaster recovery processes.

- Make sure your SAN fabric is redundant (Multi Path I/O)

- Separate networks for storage array management and storage I/O. This concept applies to all storage protocols but is very pertinent to Ethernet-based deployments (NFS, iSCSI, FCoE). The separation can be physical (subnets) or logical (VLANs), but must exist.

- If leveraging an IP-based storage protocol I/O (NFS or iSCSI), you might require more than a single IP address for the storage target. The determination is based on the capabilities of your networking hardware.

- With IP-based storage protocols (NFS and iSCSI) you channel multiple Ethernet ports together. NetApp refers to this function as a VIF. It is recommended that you create LACP VIFs over multimode VIFs whenever possible.

- Use CAT 6 cabling rather than CAT 5

- Enable Flow-Control (should be set to receive on switches and

transmit on iSCSI targets) - Enable spanning tree protocol with either RSTP or portfast

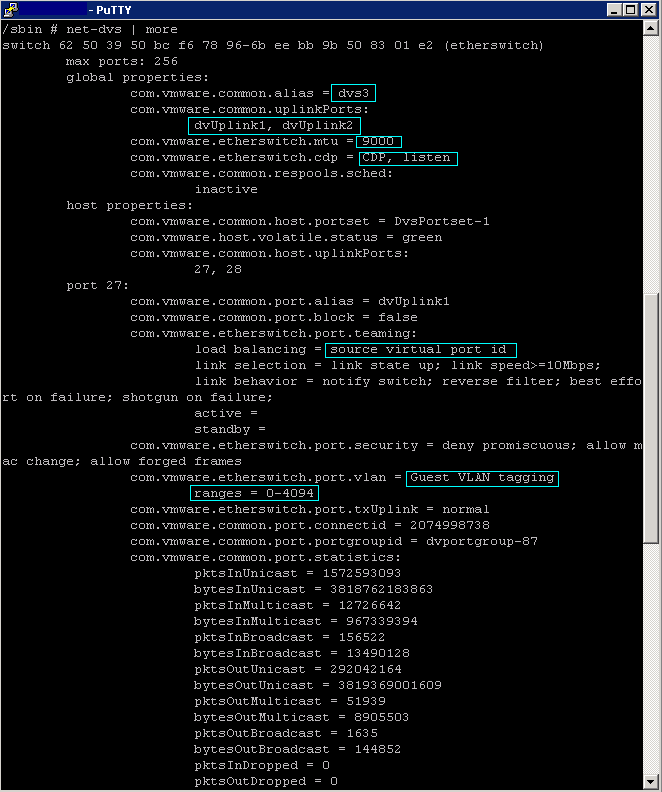

enabled. Spanning Tree Protocol (STP) is a network protocol that makes sure of a loop-free topology for any bridged LAN - Configure jumbo frames end-to-end. 9000 rather than 1500 MTU

- Ensure Ethernet switches have the proper amount of port

buffers and other internals to support iSCSI and NFS traffic

optimally - Use Link Aggregation for NFS

- Maximum of 2 TCP sessions per Datastore for NFS (1 Control Session and 1 Data Session)

- Ensure that each HBA is zoned correctly to both SPs if using FC

- Create RAID LUNs according to the Applications vendors recommendation

- Use Tiered storage to separate High Performance VMs from Lower performing VMs

- Choose Virtual Disk formats as required. Eager Zeroed, Thick and Thin etc

- Choose RDMs or VMFS formatted Datastores dependent on supportability and Aplication vendor and virtualisation vendor recommendation

- Utilise VAAI (vStorage APIs for Array Integration) Supported by vSphere 5

- No more than 15 VMs per Datastore

- Extents are not generally recommended

- Use De-duplication if you have the option. This will manage storage and maintain one copy of a file on the system

- Choose the fastest storage ethernet or FC adaptor (Dependent on cost/budget etc)

- Enable Storage I/O Control

- VMware highly recommend that customers implement “single-initiator, multiple storage target” zones. This design offers an ideal balance of simplicity and availability with FC and FCoE deployments.

- Whenever possible, it is recommended that you configure storage networks as a single network that does not route. This model helps to make sure of performance and provides a layer of data security.

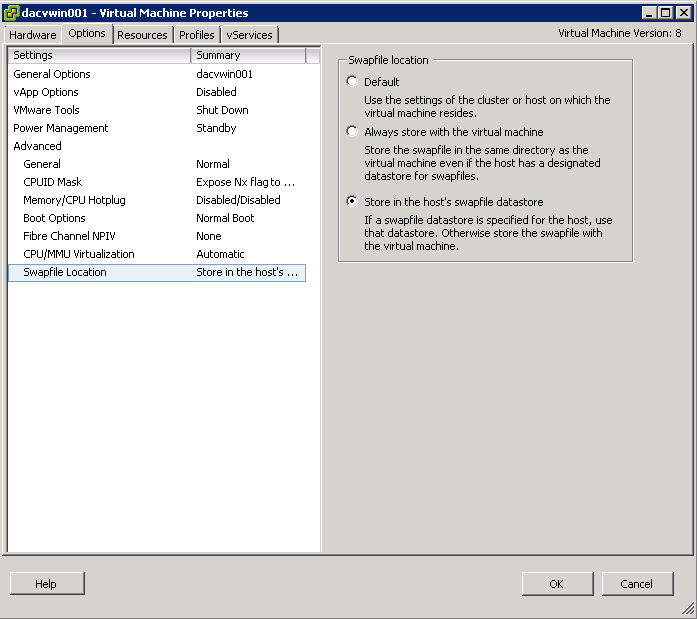

- Each VM creates a swap or pagefile that is typically 1.5 to 2 times the size of the amount of memory configured for each VM. Because this data is transient in nature, we can save a fair amount of storage and/or bandwidth capacity by removing this data from the datastore, which contains the production data. In order to accomplish this design, the VM’s swap or pagefile must be relocated to a second virtual disk stored in a separate datastore

- It is the recommendation of NetApp, VMware, other storage vendors, and VMware partners that the partitions of VMs and the partitions of VMFS datastores are to be aligned to the blocks of the underlying storage array. You can find more information around VMFS and GOS file system alignment in the following documents from various vendors

- Failure to align the file systems results in a significant increase in storage array I/O in order to meet the I/O requirements of the hosted VMs

- Try using sDRS

- Turn on Storage I/O Control (SIOC) to split up disk shares globally across all hosts accessing that datastore

- Make sure your multipathing is correct. Active/Active arrays use Fixed, Active/Passive use Most Recently used and then you have ALUA

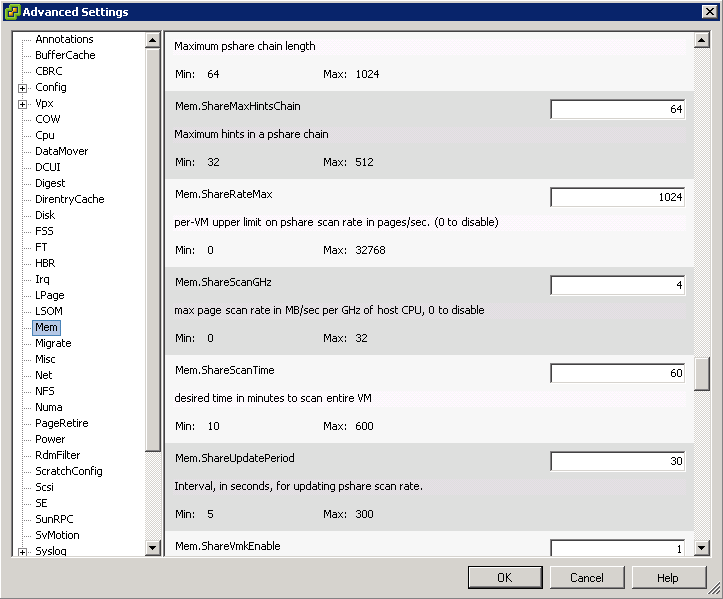

- Change queue depths to 64 rather than the default 32 if required. Set the parameter Disk.SchedNumReqOutstanding to 64 in vCenter

- VMFS and RDM are both good for Random Reads/Writes

- VMFS and RDM are also good for sequential Reads/Writes of small I/O block sizes

- VMFS best for sequential Reads/Writes at larger I/O block sizes