What does NPIV stand for?

(N_Port ID Virtualization)

What is an N_Port?

An N_Port is an end node port on the Fibre Channel fabric. This could be an HBA (Host Bus Adapter) in a server or a target port on a storage array.

What is NPIV?

N_Port ID Virtualization or NPIV is a Fibre Channel facility allowing multiple N_Port IDs to share a single physical N_Port. This allows multiple Fibre Channel initiators to occupy a single physical port, easing hardware requirements in Storage Area Network design, especially where virtual SANs are called for. NPIV is defined by the Technical Committee T11 in the Fibre Channel – Link Services (FC-LS) specification

NPIV allows a single host bus adaptor (HBA) or target port on a storage array to register multiple World Wide Port Names (WWPNs) and N_Port identification numbers. This allows each virtual server to present a different world wide name to the storage area network (SAN), which in turn means that each virtual server will see its own storage — but no other virtual server’s storage

How NPIV-Based LUN Access Works

NPIV enables a single FC HBA port to register several unique WWNs with the fabric, each of which can be assigned to an individual virtual machine.

SAN objects, such as switches, HBAs, storage devices, or virtual machines can be assigned World Wide Name (WWN) identifiers. WWNs uniquely identify such objects in the Fibre Channel fabric. When virtual machines have WWN assignments, they use them for all RDM traffic, so the LUNs pointed to by any of the RDMs on the virtual machine must not be masked against its WWNs. When virtual machines do not have WWN assignments, they access storage LUNs with the WWNs of their host’s physical HBAs. By using NPIV, however, a SAN administrator can monitor and route storage access on a per virtual machine basis. The following section describes how this works.

When a virtual machine has a WWN assigned to it, the virtual machine’s configuration file (.vmx) is updated to include a WWN pair (consisting of a World Wide Port Name, WWPN, and a World Wide Node Name, WWNN). As that virtual machine is powered on, the VMkernel instantiates a virtual port (VPORT) on the physical HBA which is used to access the LUN. The VPORT is a virtual HBA that appears to the FC fabric as a physical HBA, that is, it has its own unique identifier, the WWN pair that was assigned to the virtual machine. Each VPORT is specific to the virtual machine, and the VPORT is destroyed on the host and it no longer appears to the FC fabric when the virtual machine is powered off. When a virtual machine is migrated from one ESX/ESXi to another, the VPORT is closed on the first host and opened on the destination host.

If NPIV is enabled, WWN pairs (WWPN & WWNN) are specified for each virtual machine at creation time. When a virtual machine using NPIV is powered on, it uses each of these WWN pairs in sequence to try to discover an access path to the storage. The number of VPORTs that are instantiated equals the number of physical HBAs present on the host. A VPORT is created on each physical HBA that a physical path is found on. Each physical path is used to determine the virtual path that will be used to access the LUN. Note that HBAs that are not NPIV-aware are skipped in this discovery process because VPORTs cannot be instantiated on them

Requirements

- The fibre switch must support NPIV

- The HBA must support NPIV.

- RDMs must be used (Raw Device mapping)

- Use HBAs of the same type, either all QLogic or all Emulex. VMware does not support heterogeneous HBAs on the same host accessing the same LUNs

- If a host uses multiple physical HBAs as paths to the storage, zone all physical paths to the virtual machine. This is required to support multipathing even though only one path at a time will be active

- Make sure that physical HBAs on the host have access to all LUNs that are to be accessed by NPIV-enabled virtual machines running on that host

- When configuring a LUN for NPIV access at the storage level, make sure that the NPIV LUN number and NPIV target ID match the physical LUN and Target ID

- Keep the RDM on the same datastore as the VM configuration file.

NPIV Capabilities

- NPIV supports vMotion. When you use vMotion to migrate a virtual machine it retains the assigned WWN.

- If you migrate an NPIV-enabled virtual machine to a host that does not support NPIV, VMkernel reverts to using a physical HBA to route the I/O

- If your FC SAN environment supports concurrent I/O on the disks from an active-active array, the concurrent I/O to two different NPIV ports is also supported.

NPIV Limitations

- Because the NPIV technology is an extension to the FC protocol, it requires an FC switch and does not work on the direct attached FC disks

- When you clone a virtual machine or template with a WWN assigned to it, the clones do not retain the WWN.

- NPIV does not support Storage vMotion.

- Disabling and then re-enabling the NPIV capability on an FC switch while virtual machines are running can cause an FC link to fail and I/O to stop

Assign WWNs to Virtual Machines

You can create from 1 to 16 WWN pairs, which can be mapped to the first 1 to 16 physical HBAs on the host.

- Open the New Virtual Machine wizard.

- Select Custom, and click Next.

- Follow all steps required to create a custom virtual machine.

- On the Select a Disk page, select Raw Device Mapping, and click Next.

- From a list of SAN disks or LUNs, select a raw LUN you want your virtual machine to access directly.

- Select a datastore for the RDM mapping file.

- You can place the RDM file on the same datastore where your virtual machine files reside, or select a different datastore.

Note: If you want to use vMotion for a virtual machine with enabled NPIV, make sure that the RDM file is located on the same datastore where the virtual machine configuration file resides.

- Follow the steps required to create a virtual machine with the RDM.

- On the Ready to Complete page, select the Edit the virtual machine settings before completion check box and click Continue.

- The Virtual Machine Properties dialog box opens.

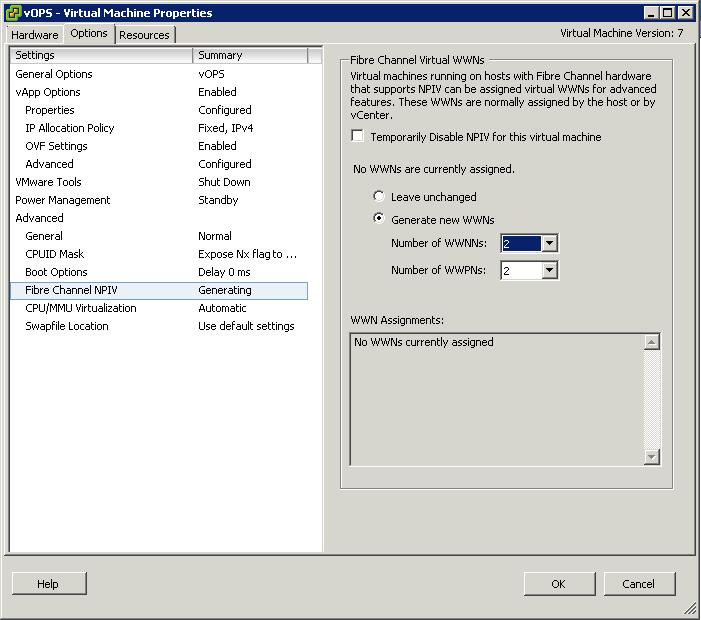

- Click the Options tab, and select Fibre Channel NPIV

- (Optional) Select the Temporarily Disable NPIV for this virtual machine check box

- Select Generate new WWNs.

- Specify the number of WWNNs and WWPNs.

- A minimum of 2 WWPNs are needed to support failover with NPIV. Typically only 1 WWN is created for each virtual machine.

- Click Finish.

- The host creates WWN assignments for the virtual machine.

What to do next

Register newly created WWN in the fabric so that the virtual machine is able to log in to the switch, and assign storage LUNs to the WWN

NPIV Advantages

- Granular security: Access to specific storage LUNs can be restricted to specific VMs using the VM WWN for zoning, in the same way that they can be restricted to specific physical servers.

- Easier monitoring and troubleshooting: The same monitoring and troubleshooting tools used with physical servers can now be used with VMs, since the WWN and the fabric address that these tools rely on to track frames are now uniquely associated to a VM.

- Flexible provisioning and upgrade: Since zoning and other services are no longer tied to the physical WWN “hard-wired” to the HBA, it is easier to replace an HBA. You do not have to reconfigure the SAN storage, because the new server can be pre-provisioned independently of the physical HBA WWN.

- Workload mobility: The virtual WWN associated with each VM follows the VM when it is migrated across physical servers. No SAN reconfiguration is necessary when the work load is relocated to a new server.

- Applications identified in the SAN: Since virtualized applications tend to be run on a dedicated VM, the WWN of the VM now identifies the application to the SAN.

- Quality of Service (QoS): Since each VM can be uniquely identified, QoS settings can be extended from the SAN to VMs