What is NFS?

ESXi hosts can access a designated NFS volume located on a NAS (Network Attached Storage) server, can mount the volume, and can use it for its storage needs. You can use NFS volumes to store and boot virtual machines in the same way that you use VMFS datastores.

NAS stores virtual machine files on remote file servers that are accessed over a standard TCP/IP network. The NFS client built into the ESXi system uses NFS version 3 to communicate with NAS/NFS servers. For network connectivity, the host requires a standard network adapter.

Mounting

To use NFS as a shared repository, you create a directory on the NFS server and then mount the directory as a datastore on all hosts. If you use the datastore for ISO images, you can connect the virtual machineʹs CD‐ROM device to an ISO file on the datastore and install a guest operating system from the ISO file.

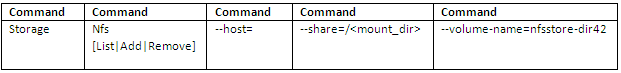

ESXCLI Command Set

Troubleshooting

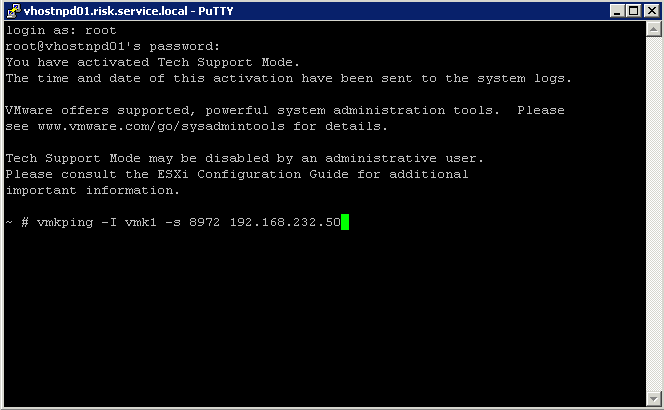

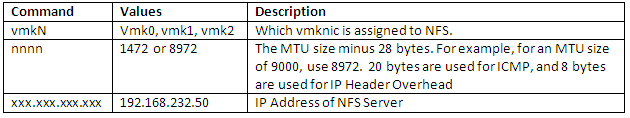

- Check the MTU size configuration on the port group which is designated as the NFS VMkernel port group. If it is set to anything other than 1500 or 9000, test the connectivity using the vmkping command

- See table below for command explanation

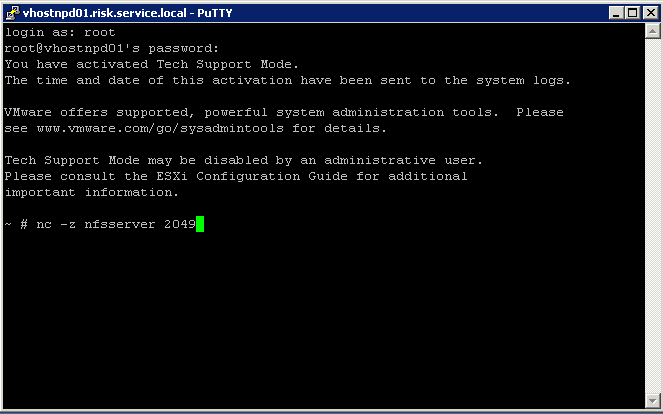

- Verify connectivity to the NFS server and ensure that it is accessible through the firewalls

- Use netcat (nc) to see if you can reach the NFS server nfsd TCP/UDP port (default 2049) on the storage array from the host:

- Verify that the ESX host can vmkping the NFS server

- Verify that the virtual switch being used for storage is configured correctly

- Ensure that there are enough available ports on the virtual switch.

- Verify that the storage array is listed in the Hardware Compatibility Guide

- Verify that the physical hardware functions correctly.

- If this is a Windows server, verify that it is correctly configured for NFS.

- Verify that the permissions of the NFS server have not been set to read-only for this ESX host.

- Verify that the NFS share was not mounted with the read-only box selected.

- Ensure the access on the NFS server is set to Anonymous user, Root Access (no_root_squash), and Read/Write

- If you cannot connect to an NFS Share there may be a misconfiguration on the Switch port. In this case, try using a different vmnic (or move NICs to Unused/Standby in the NIC teaming tab of the vSwitch or Portgroup properties).

- The name of the NAS server is not resolved from the host side or vice versa. In this case, ensure that the DNS server and host-side entries are set properly.