All file systems that Windows uses to organize the hard disk are based on cluster (allocation unit) size, which represents the smallest amount of disk space that can be allocated to hold a file. The smaller the cluster size, the more efficiently your disk stores information.

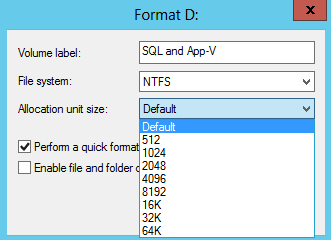

If you do not specify a cluster size for formatting, Windows XP Disk Management bases the cluster size on the size of the volume. Windows XP uses default values if you format a volume as NTFS by either of the following methods:

- By using the format command from the command line without specifying a cluster size.

- By formatting a volume in Disk Management without changing the Allocation Unit Size from Default in the Format dialog box.

The maximum default cluster size under Windows XP is 4 kilobytes (KB) because NTFS file compression is not possible on drives with a larger allocation size. The Format utility never uses clusters that are larger than 4 KB unless you specifically override that default either by using the /A: option for command-line formatting or by specifying a larger cluster size in the Format dialog box in Disk Management.

What’s the difference between doing a Quick Format and a Full Format?

http://support.microsoft.com/kb/302686

Procedure

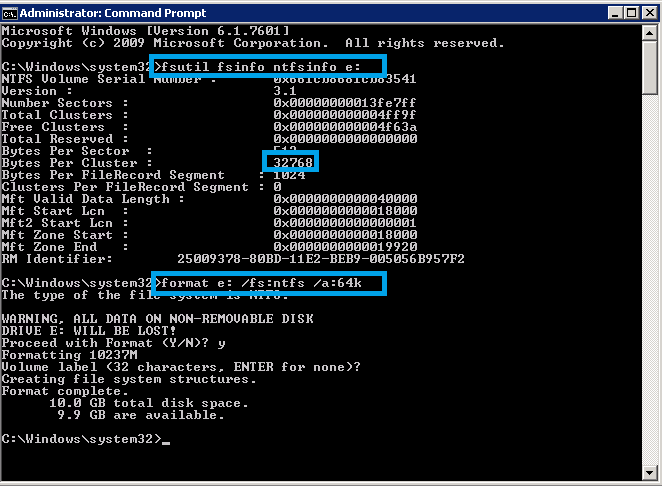

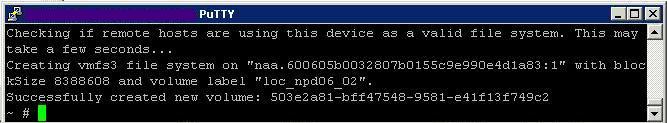

- To check what cluster size you are using already type the below line into a command prompt

- fsutil fsinfo ntfsinfo :

- You can see that this drive I am using has a cluster size of 32K. Normally Windows drives default to 4K

- Remember that the following procedure will reformat your drive and wipe out any data on it

- Type format : /fs:ntfs /a:64k

- In this command, is the drive you want to format, and /a:clustersize is the cluster size you want to assign to the volume: 2K, 4K, 8K, 16KB, 32KB, or 64KB. However, before you override the default cluster size for a volume, be sure to test the proposed modification via a benchmarking utility on a nonproduction machine that closely simulates the intended target.

Other Information

- As a general rule there’s no dependency between the I/O size and NTFS cluster size in terms of performance. The NTFS cluster size affects the size of the file system structures which track where files are on the disk, and it also affects the size of the freespace bitmap. But files themselves are normally stored contiguously, so there’s no more effort required to read a 1MB file from the disk whether the cluster size is 4K or 64K.

- In one case the file header says “the file starts at sector X and takes 256 clusters” an in the other case the headers says “the file starts at sector X and takes 16 clusters”. The system will need to perform the same number of reads on the file in either case no matter what the I/O size is. For example, if the I/O size is 16K then it will take 128 reads to get all the data regardless of the cluster size.

- In a heavily fragmented file system the cluster size may start to affect performance, but in that case you should run a disk defragmenter such as Windows or DiskKeeper for example.

- On a drive that performs a lot of file additions/deletions or file extensions then cluster size can have a performance impact because of the number of I/Os required to update the file system metadata (bigger clusters generally = less I/Os). But that’s independent of the I/O size used by the application – the I/Os to update the metadata are part of NTFS itself and aren’t something that the application performs.

- If you’re hard drive is formatted NTFS then you can’t use NTFS compression if you raise the cluster size above 4,096 bytes (4KB)

- Also keep in mind that increasing cluster size can potentially waste more hard drive space

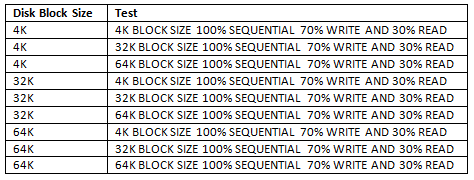

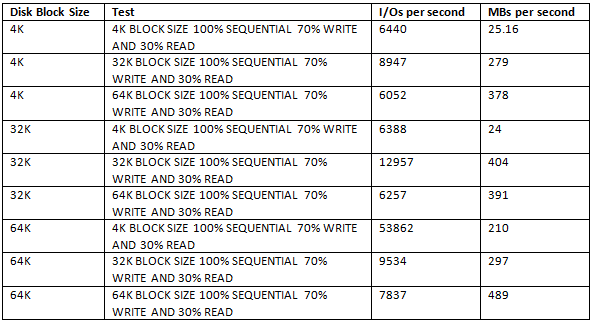

Iometer Testing on different Block Sizes

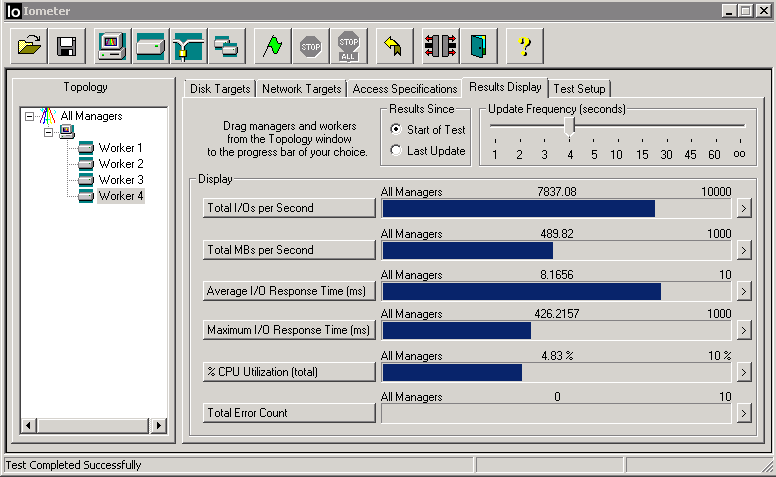

The following 9 tests were carried out on one Windows Server 2008 R2 Server (4 vCPUs and 4GB RAM) which is used to page Insurance Modelling data onto a D Drive which is located on the local disk on a VMware Host Server. The disk is an IBM 300GB 10K 6Gps SAS 2.5” SFF Slim-HS HDD

The Tests

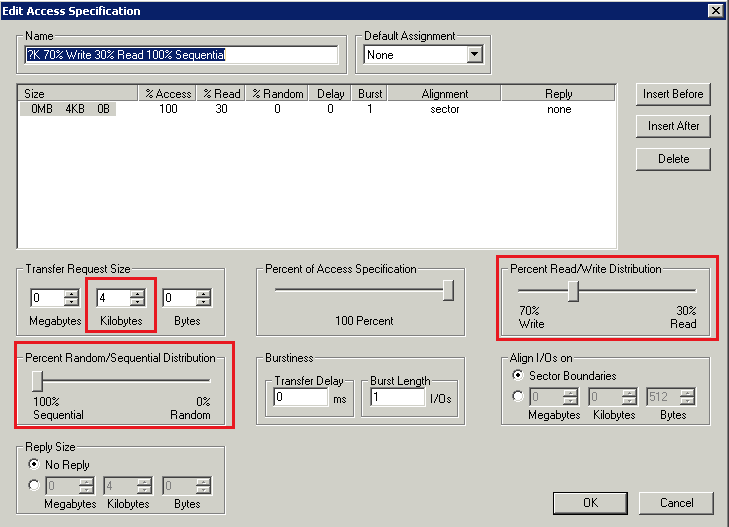

The Testing Spec in Iometer

Just adjusted for Disk Block Size which is the Transfer Request Size in the spec below

Testing and Results

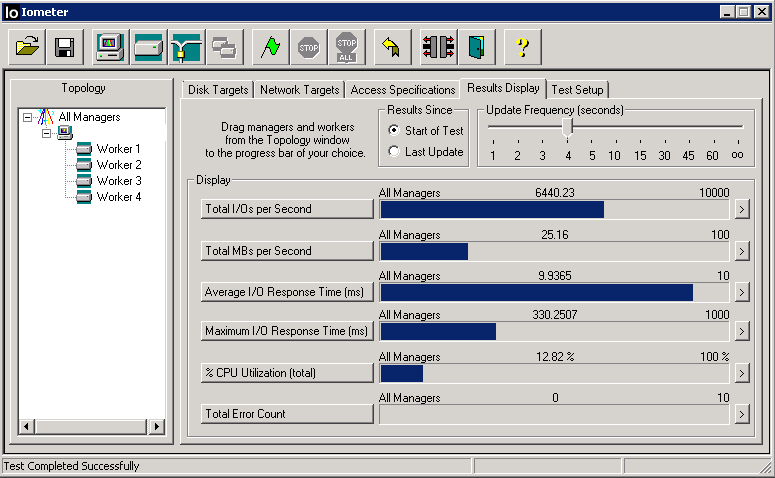

- 4K Block Size on Disk

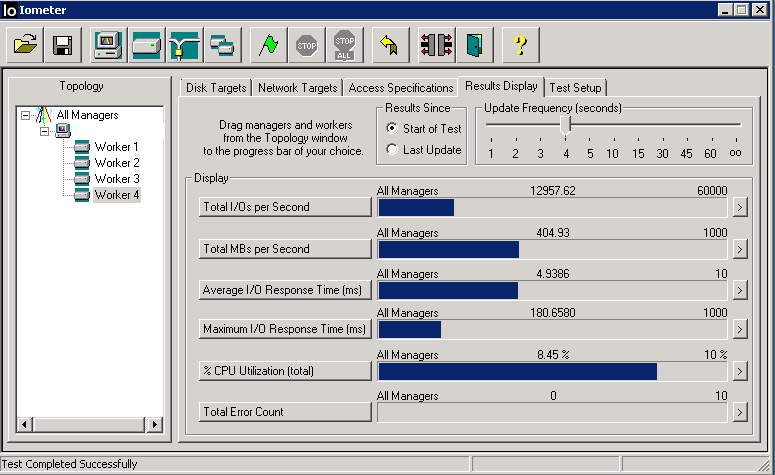

- 4K BLOCK SIZE 100% SEQUENTIAL 70% WRITE AND 30% READ

- 4K Block Size on Disk

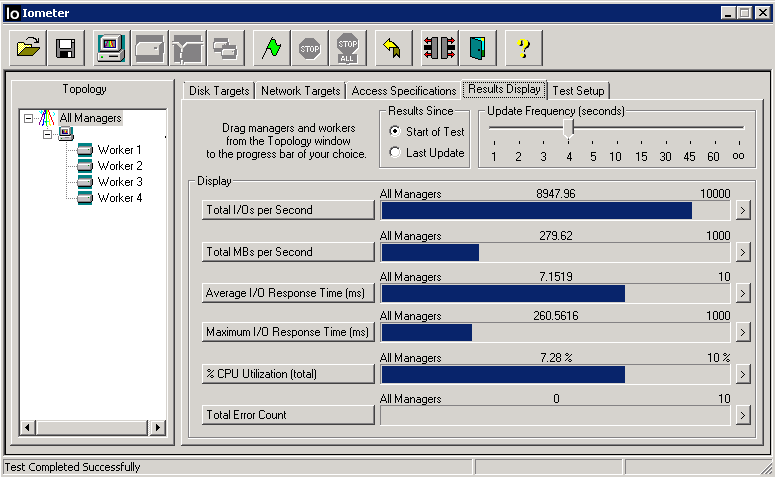

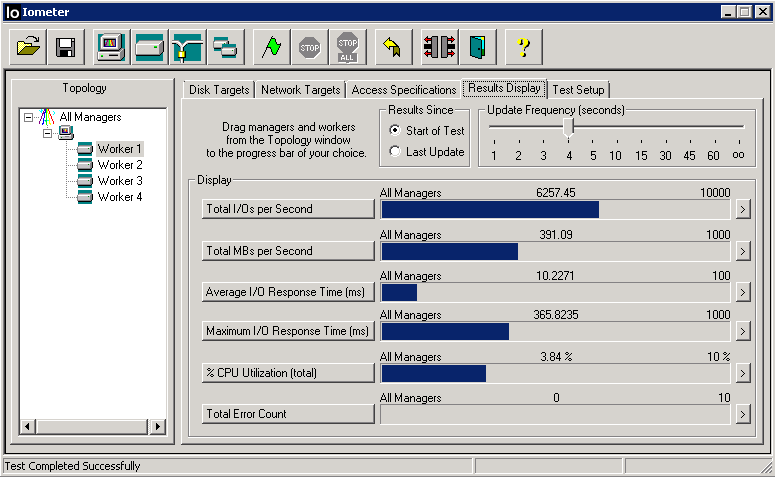

- 32K BLOCK SIZE 100% SEQUENTIAL 70% WRITE AND 30% READ

- 4K Block Size on Disk

- 64K BLOCK SIZE 100% SEQUENTIAL 70% WRITE AND 30% READ

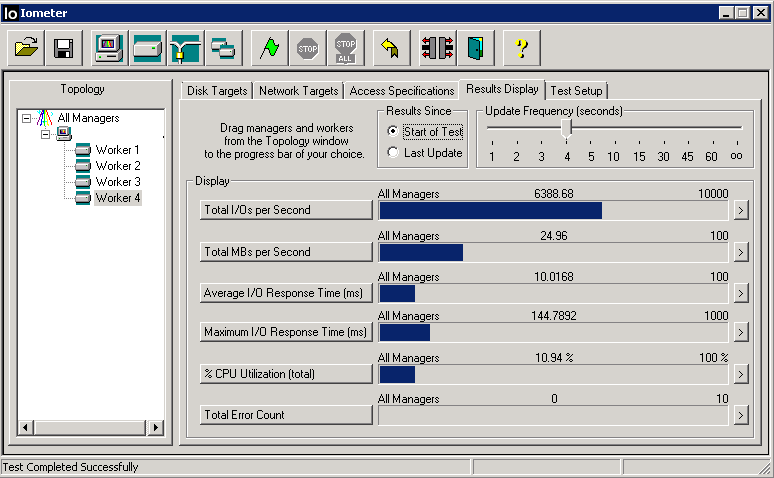

- 32K Block Size on Disk

- 4K BLOCK SIZE 100% SEQUENTIAL 70% WRITE AND 30% READ

- 32K Block Size on Disk

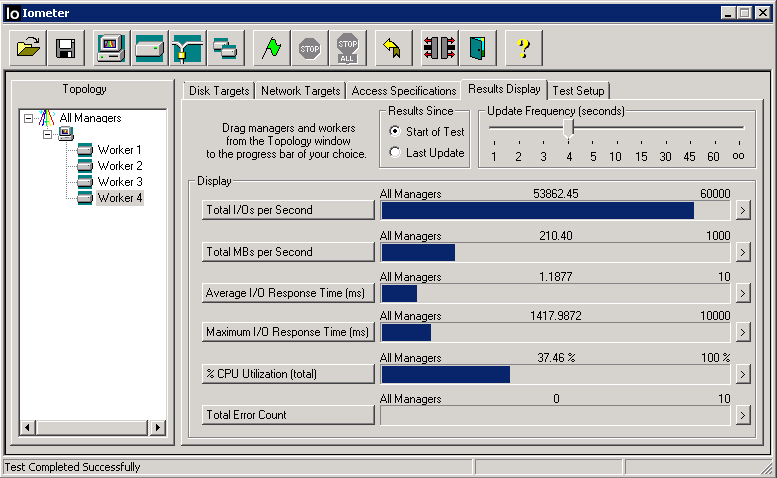

- 32K BLOCK SIZE 100% SEQUENTIAL 70% WRITE AND 30% READ

- 32K Block Size on Disk

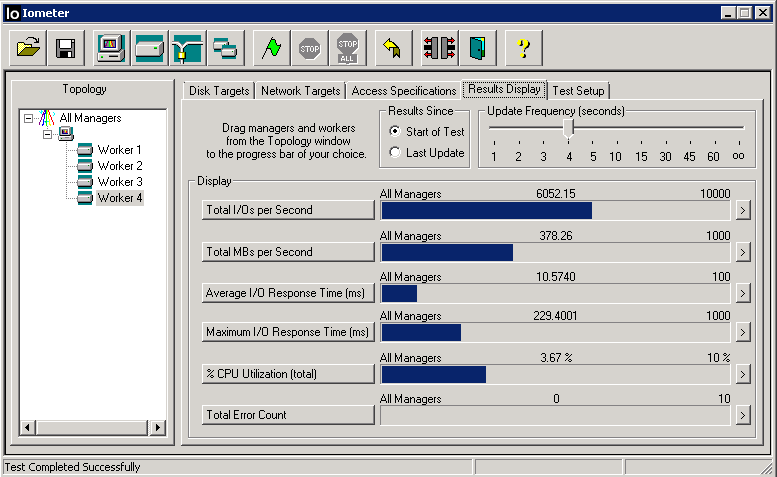

- 64K BLOCK SIZE 100% SEQUENTIAL 70% WRITE AND 30% READ

- 64K Block Size on Disk

- 4K BLOCK SIZE 100% SEQUENTIAL 70% WRITE AND 30% READ

- 64K Block Size on Disk

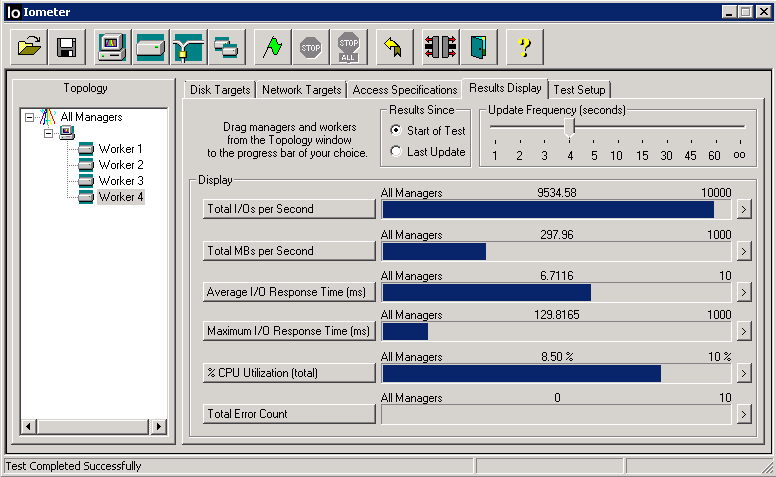

- 32K BLOCK SIZE 100% SEQUENTIAL 70% WRITE AND 30% READ

- 64K Block Size on Disk

- 64K BLOCK SIZE 100% SEQUENTIAL 70% WRITE AND 30% READ

The Results

The best thing to do seems to be to match up the expected data size with the disk block size in order to achieve the higher outputs. E.g 32K workloads with a 32K Block Size and 64K workloads with a 64K Block size.

Fujitsu Paper (Worth a read)

https://sp.ts.fujitsu.com/dmsp/Publications/public/wp-basics-of-disk-io-performance-ww-en.pdf