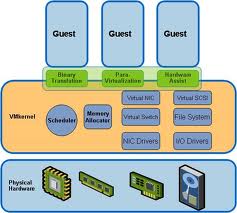

Memory Hardening – The ESX/ESXi kernel, user-mode applications, and executable components such as drivers and libraries are located at random, non-predictable memory addresses. Combined with the nonexecutable memory protections made available by microprocessors, this provides protection that makes it difficult for malicious code to use memory exploits to take advantage of vulnerabilities.

Kernel Module Integrity – Digital signing ensures the integrity and authenticity of modules, drivers and applications as they are loaded by the VMkernel. Module signing allows ESXi to identify the providers of modules, drivers, or applications and whether they are VMware-certified. Trusted Platform Module (ESXi ONLY and BIOS enabled) – This module is a hardware element that represents the core of trust for a hardware platform and enables attestation of the boot process, as well as cryptographic key storage and protection. Each time ESXi boots, TPM measures the VMkernel with which ESXi booted in one of its Platform Configuration Registers (PCRs). TPM measurements are propagated to vCenter Server when the host is added to the vCenter Server system

Archive for VMware

How is the VMKernel secured?

Ingress and Egress Traffic Shaping

The terms Ingress source and Egress source are with respect to the VDS.

For example:

Ingress – When you want to monitor the traffic that is going out of a virtual machine towards the VDS, it is called Ingress Source traffic. The traffic seeks ingress to the VDS and hence the source is called Ingress.

Egress – When you want to monitor the traffic that is going out of the VDS towards the VM, it is called egress

Traffic Shaping concepts:

Average Bandwidth: Kbits/sec

Target traffic rate cap that the switch tries to enforce. Every time a client uses less than the defined Average Bandwidth, credit builds up.

Peak Bandwidth: Kbits/sec

Extra bandwidth available, above the Average Bandwidth, for a short burst. The availability of the burst depends on credit accumulated so far.

Burst Size: Kbytes

Amount of traffic that can be transmitted or received at Peak speed (Combining Peak Bandwidth and Burst Size you can calculate the maximum allowed time for the burst

Traffic Shaping on VSS and VDS

VSS

Traffic Shaping can be applied to a vNetwork Standard Switch port group or the entire vSwitch for outbound traffic only

VDS

Traffic Shaping can be applied to a vNetwork Distributed Switch dvPort or the entire dvPort Group for inbound and outbound traffic

Network Time Sync for VMware ESXi Hosts

In a virtual infrastructure, network time synchronization is critical to keep servers on the same schedule as the services they rely on. For VMware ESXi hosts, you can implement Network Time Protocol (NTP) synchronization using the vSphere Client.

More on VMware networking

There are many reasons you should synchronize time for ESXi hosts. If they are integrated with Active Directory, for instance, you need time to be properly synchronized. You also need the time to be consistent when creating and resuming snapshots, because snapshots take point-in-time images of the server state. Luckily, setting up network time synchronization with the vSphere Client is pretty easy.

VMware network time synchronization: A walkthrough

To configure NTP synchronization, select the host, and on the Configuration tab, select Time Configuration under Software. You’ll now see the existing time synchronization status on that host. Next, click Properties. This selection shows the Time Configuration screen, where you can see the current time on the host. Make sure it’s not too different from the actual time, because a host that’s more than 1,000 seconds is considered “insane” and won’t synchronize.

After you set the local time on the host, select NTP Client Enabled. This activates NTP time synchronization for your host. Reboot the server, then go to Options to make sure NTP has been enabled. This gives you access to the NTP Startup Policy, where you should select “Start and stop with host.”

You’re not done with network time synchronization yet, though. Now, you need to choose NTP servers that your VMware ESXi hosts should synchronize with. Click NTP Settings and you’ll see the current list of NTP servers. By default, it’s empty. Click Add to add the name or address of the NTP server you’d like to use. The interface prompts you for an address, but you can enter a name that can be resolved by DNS as well.

If you’re not sure which NTP server to use for VMware network time synchronization, the Internet NTP servers in pool.ntp.org work well. You only need to choose one server from this group to add to the NTP servers list. If you want to synchronize with an internal or proprietary NTP server, however, you should specify at least two NTP servers.

At this point, make sure the option to restart the NTP server is selected. Click OK three times to save and apply your changes. From the Configuration screen on your ESXi host, you should now see that the NTP Client is running, and it will also show the list of current NTP servers your host is using.

With your ESXi hosts synchronized to the correct time, all the services and events that depend on time will function properly. More importantly, you won’t waste any more time because of misconfigured network time

Mastering VMware vSphere 5.0

This book has proved invaluable to my understanding of VMware. Well recommended.

Virtual vCenter – Pros and Cons

Over the years there have been some controversy over this topic. Should vCenter Server be a physical or a virtual machine?

The most important aspect is that both solutions are supported by VMware.

http://www.vmware.com/pdf/vi3_vc_in_vm.pdf

Physical Solution Pro’s

- More scalable

- Hardware upgrades can be carried out

- It is not susceptible to a potential VI outage

Physical Solution Cons

- A dedicated physical server is required

- Extra Power usage

- Extra cooling considerations

- UPS considerations

- Backup must be done using tradition tools

- DR may be more difficult

Virtual Solution Pro’s

- You do not need a dedicated physical server (a way to reach a greater consolidation)

- Server Consolidation: instead of dedicating an entire physical server to VirtualCenter, you can run it in a virtual machine along with others on the same ESX Server host.

- Mobility: by encapsulating the VirtualCenter server in a virtual machine, you can transfer it from one host to another, enabling maintenance and other activities.

- Each backup solution that works for a VM work also in this case

- Snapshots: A snapshot of the VirtualCenter virtual machine can be used for backup,

archiving, and other similar purposes. - Availability: using VMware HA, you can provide high availability for the VirtualCenter server

- You can via DRS rules place the vCenter on certain hosts so you know where it is.

Virtual Solution Con’s

- It is susceptible to a potential VI outage

- No cold migration

- No cloning

- It must contend for resources along with other VMs

- If you wish to modify the hardware properties for the VirtualCenter virtual machine, you will need to schedule downtime for VirtualCenter. Then, you will need to connect to the ESX Server host directly with the VI Client, shut down the VirtualCenter virtual machine, and make the modifications.

- Careful consideration and design thinking needs to built into a vSphere environment where a vDS will be used – See below

Virtual vCenter and vDS

VMware specifically state about running vCenter within a distributed switch and they said point blank, “it is not supported”. They said “Because vCenter governs the distributed switch environment, you can’t have vCenter within the distributed switch.”

If you lose your Virtual Center you will have no way in moving virtual machines between different port groups on the vNetwork Distributed Switch. In addition, you will not be able to get a virtual machine from the traditional virtual switch to a port group on the vNetwork Distributed Switch. Extra to that, you can’t move a VM to another VMware vNetwork Distributed Switch. So that means if you are using VMware vSphere vNetwork Distributed Switches & you lose virtual center you are almost disabled on the networking part. If you lose connectivity on the classic virtual switch & your adapter on the distributed switch are OK you still can’t move your virtual machines to that distributed switch till Virtual Center is back.”

Does this mean a virtual infrastructure design should keep a vSS around? I would say “yes!”. Perhaps it’s now more important to dedicate 2 of the ESX host’s pNICs for the ESX Service Console / ESXi Management VMKernel isolated as a vSS. The 2 pNICs are not only for redundancy anymore, but also to support one or more standby VM portgroups in case they’re needed as a recovery network for VMs normally using the vDS. Of course, that means creating the appropriate trunking, and VLANs ahead of time. Have everything ready for a quick and easy change of critical VMs when needed.

Therefore, a hybrid design using both a vSS and a vDS is a smart “safety net” to have. Especially when an admin has to point the vSphere client directly at an ESX/ESXi host. The “safety net” vSS portgroups will be available from each host and the VMs can be easily switched via the vSphere Client GUI.

See this useful article by Duncan Epping

http://www.yellow-bricks.com/2012/02/08/distributed-vswitches-and-vcenter-outage-whats-the-deal/

In the event that the worst happens and you lose connectivity

VMware has provided a KB Article 1010555 which will allow an admin to create a vSS and move the vCenter VM on to this switch