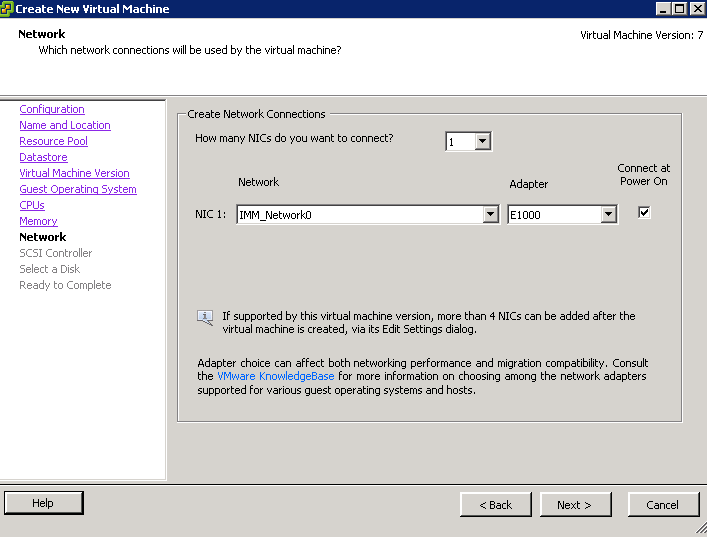

When creating a Virtual machine, VMware will normally offer you several choices of network adaptor depending on what O/S you select.

Network Adaptor Types

- Vlance – An emulated version of the AMD 79C970 PCnet32- LANCE NIC, an older 10Mbps NIC with drivers available in most 32-bit guest operating systems except Windows Vista and later. A virtual machine configured with this network adapter can use its network immediately.

- VMXNET – The VMXNET virtual network adapter has no physical counterpart. VMXNET is optimized for performance in a virtual machine. Because operating system vendors do not provide built-in drivers for this card, you must install VMware Tools to have a driver for the VMXNET network adapter available.

- Flexible – The Flexible network adapter identifies itself as a Vlance adapter when a virtual machine boots, but initializes itself and functions as either a Vlance or a VMXNET adapter, depending on which driver initializes it. With VMware Tools installed, the VMXNET driver changes the Vlance adapter to the higher performance VMXNET adapter.

- E1000— An emulated version of the Intel 82545EM Gigabit Ethernet NIC. A driver for this NIC is not included with all guest operating systems. Typically Linux versions 2.4.19 and later, Windows XP Professional x64 Edition and later, and Windows Server 2003 (32-bit) and later include the E1000 driver.Note: E1000 does not support jumbo frames prior to ESX/ESXi 4.1.

- E1000e – This feature would emulate a newer model of Intel gigabit NIC (number 82574) in the virtual hardware. This would be known as the “e1000e” vNIC. e1000e would be available only on hardware version 8 (and newer) VMs in vSphere5. It would be the default vNIC for Windows 8 and newer (Windows) guest OSes. For Linux guests, e1000e would not be available from the UI (e1000, flexible vmxnet, enhanced vmxnet, and vmxnet3 would be available for Linux).

- VMXNET 2 (Enhanced) – The VMXNET 2 adapter is based on the VMXNET adapter but provides some high-performance features commonly used on modern networks, such as jumbo frames and hardware offloads. This virtual network adapter is available only for some guest operating systems on ESX/ESXi 3.5 and late

- VMXNET 3– The VMXNET 3 adapter is the next generation of a paravirtualized NIC designed for performance, and is not related to VMXNET or VMXNET 2. It offers all the features available in VMXNET 2, and adds several new features like multiqueue support (also known as Receive Side Scaling in Windows), IPv6 offloads, and MSI/MSI-X interrupt delivery.VMXNET 3 is supported only for virtual machines version 7 and later, with a limited set of guest operating systems:

- 32- and 64-bit versions of Microsoft Windows XP,7, 2003, 2003 R2, 2008, and 2008 R2

- 32- and 64-bit versions of Red Hat Enterprise Linux 5.0 and later

- 32- and 64-bit versions of SUSE Linux Enterprise Server 10 and later

- 32- and 64-bit versions of Asianux 3 and later

- 32- and 64-bit versions of Debian 4

- 32- and 64-bit versions of Ubuntu 7.04 and later

- 32- and 64-bit versions of Sun Solaris 10 U4 and later

New Features

- TSO, Jumbo Frames, TCP/IP Checksum Offload

You can enable Jumbo frames on a vSphere Distributed Switch or Standard switch by changing the maximum MTU. TSO (TCP Segmentation Offload is enabled on the VMKernel interface by default but must be enabled at the VM level. Just change the nic to VMXNet 3 to take advantage of this feature

- MSI/MSI‐X support (subject to guest operating system

kernel support)

A Message Signaled Interrupt is a write from the device to a special address which causes an interrupt to be received by the CPU. The MSI capability was first specified in PCI 2.2 and was later enhanced in PCI 3.0 to allow each interrupt to be masked individually. The MSI-X capability was also introduced with PCI 3.0. It supports more interrupts – 26 per device than MSI and allows interrupts to be independently configured.

MSI, Message Signaled Interrupts, uses in-band pci memory space message to raise interrupt, instead of conventional out-band pci INTx pin. MSI-X is an extension to MSI, for supporting more vectors. MSI can support at most 32 vectors while MSI-X can support up to 2048. Using msi can lower interrupt latency, by giving every kind of interrupt its own vector/handler. When kernel see the message, it will directly vector to the interrupt service routine associated with the address/data. The address/data (vector) were allocated by system, while driver needs to register handler with the vector.

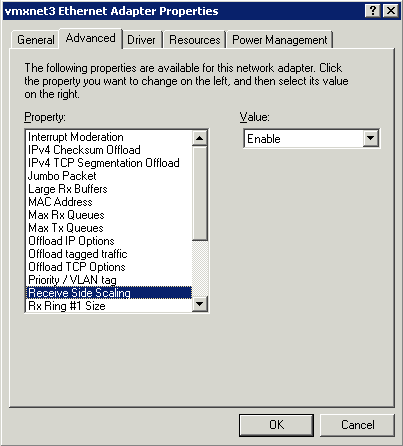

- Receive Side Scaling (RSS, supported in Windows 2008 when explicitly enabled)

When Receive Side Scaling (RSS) is enabled, all of the receive data processing for a particular TCP connection is shared across multiple processors or processor cores. Without RSS all of the processing is performed by a single processor, resulting in inefficient system cache utilization

RSS is enabled on the Advanced tab of the adapter property sheet. If your adapter does not support RSS, or if your operating system does not support it, the RSS setting will not be displayed.

- IPv6 TCP Segmentation Offloading (TSO over IPv6)

IPv6 TCP Segmentation Offloading significantly helps to reduce transmit processing performed by the vCPUs and improves both transmit efficiency and throughput. If the uplink NIC supports TSO6, the segmentation work will be offloaded to the network hardware; otherwise, software segmentation will be conducted inside the VMkernel before passing packets to the uplink. Therefore, TSO6 can be enabled for VMXNET3 whether or not the hardware NIC supports it

- NAPI (supported in Linux)

The VMXNET3 driver is NAPI‐compliant on Linux guests. NAPI is an interrupt mitigation mechanism that improves high‐speed networking performance on Linux by switching back and forth between interrupt mode and polling mode during packet receive. It is a proven technique to improve CPU efficiency and allows the guest to process higher packet loads

New API (also referred to as NAPI) is an interface to use interrupt mitigation techniques for networking devices in the Linux kernel. Such an approach is intended to reduce the overhead of packet receiving. The idea is to defer incoming message handling until there is a sufficient amount of them so that it is worth handling them all at once.

A straightforward method of implementing a network driver is to interrupt the kernel by issuing an interrupt request (IRQ) for each and every incoming packet. However, servicing IRQs is costly in terms of processor resources and time. Therefore the straightforward implementation can be very inefficient in high-speed networks, constantly interrupting the kernel with the thousands of packets per second. Overall performance of the system as well as network throughput can suffer as a result.

Polling is an alternative to interrupt-based processing. The kernel can periodically check for the arrival of incoming network packets without being interrupted, which eliminates the overhead of interrupt processing. Establishing an optimal polling frequency is important, however. Too frequent polling wastes CPU resources by repeatedly checking for incoming packets that have not yet arrived. On the other hand, polling too infrequently introduces latency by reducing system reactivity to incoming packets, and it may result in the loss of packets if the incoming packet buffer fills up before being processed.

As a compromise, the Linux kernel uses the interrupt-driven mode by default and only switches to polling mode when the flow of incoming packets exceeds a certain threshold, known as the “weight” of the network interface

- LRO (supported in Linux, VM‐VM only)

VMXNET3 also supports Large Receive Offload (LRO) on Linux guests. However, in ESX 4.0 the VMkernel backend supports large receive packets only if the packets originate from another virtual machine running on the same host.