VMware ESXi 5.0 only installs and runs on servers with 64-bit x86 CPUs. It also only supports LAHF and SAHF CPU instructions. These are known 64-bit processors:

- All AMD Opteron processors

- All Intel Xeon 3000/3200, 3100/3300, 5100/5300, 5200/5400, 5500/5600, 7100/7300, 7200/7400, and 7500 processor

Early AMD64 and Intel 64 CPUs lacked LAHF and SAHF instructions. AMD introduced the instructions with their Athlon 64, Opteron and Turion 64 revision D processors in March 2005 while Intel introduced the instructions with the Pentium 4 G1 stepping in December 2005.

LAHF and SAHF are load and store instructions, respectively, for certain status flags. These instructions are used for virtualization and floating-point condition handling.

1. Flag Control Instructions

The flag control instructions provide a method for directly changing the state of bits in the flag register.

2. Carry and Direction Flag Control Instructions

The carry flag instructions are useful in conjunction with rotate-with-carry instructions RCL and RCR. They can initialize the carry flag, CF, to a known state before execution of a rotate that moves the carry bit into one end of the rotated operand.

The direction flag control instructions are specifically included to set or clear the direction flag, DF, which controls the left-to-right or right-to-left direction of string processing. If DF=0, the processor automatically increments the string index registers, ESI and EDI, after each execution of a string primitive. If DF=1, the processor decrements these index registers. Programmers should use one of these instructions before any procedure that uses string instructions to insure that DF is set properly

STC (Set Carry Flag) CF <- 1 CLC (Clear Carry Flag) CF <- 0 CMC (Complement Carry Flag) CF <- NOT (CF) CLD (Clear Direction Flag) DF <- 0 STD (Set Direction Flag) DF <- 1 is set properly.

3. Flag Transfer Instructions

Though specific instructions exist to alter CF and DF, there is no direct method of altering the other applications-oriented flags. The flag transfer instructions allow a program to alter the other flag bits with the bit manipulation instructions after transferring these flags to the stack or the AH register.

The instructions LAHF and SAHF deal with five of the status flags, which are used primarily by the arithmetic and logical instructions.

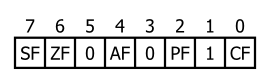

LAHF (Load AH from Flags) copies SF, ZF, AF, PF, and CF to AH bits 7, 6, 4, 2, and 0, respectively (see Figure below). The contents of the remaining bits (5, 3, and 1) are undefined. The flags remain unaffected.

SAHF (Store AH into Flags) transfers bits 7, 6, 4, 2, and 0 from AH into SF, ZF, AF, PF, and CF, respectively (below).

The PUSHF and POPF instructions are not only useful for storing the flags in memory where they can be examined and modified but are also useful for preserving the state of the flags register while executing a procedure.

PUSHF (Push Flags) decrements ESP by two and then transfers the low-order word of the flags register to the word at the top of stack pointed to by ESP (see Figure below). The variant PUSHFD decrements ESP by four, then transfers both words of the extended flags register to the top of the stack pointed to by ESP (the VM and RF flags are not moved, however).

POPF (Pop Flags) transfers specific bits from the word at the top of stack into the low-order byte of the flag register (see Figure below), then increments ESP by two. The variant POPFD transfers specific bits from the double word at the top of the stack into the extended flags register (the RF and VM flags are not changed, however), then increments ESP by four

4. LAHF and SAHF

LAHF loads 5 flags from the flag register into Register AH. SAHF stores these same 5 flags from AH into the Flag Register. The bit position of each flag is the same in AH as it is in the Flag Register. The remaining bits (marked 0) are reserved and you don’t define them