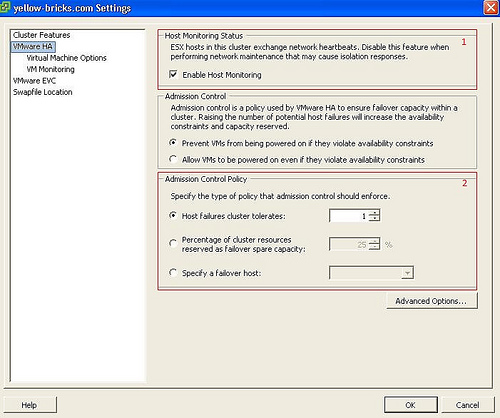

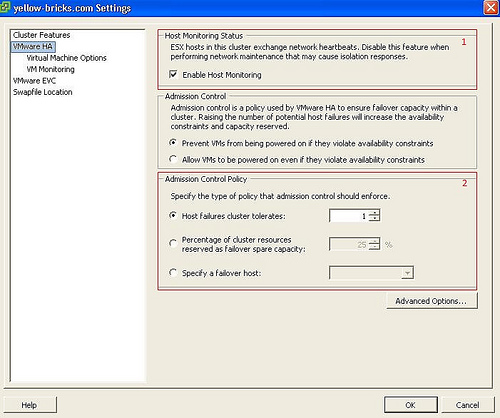

VMware HA clusters enable a collection of ESX/ESXi hosts to work together so that, as a group, they provide higher levels of availability for virtual machines than each ESX/ESXi host could provide individually. When you plan the creation and usage of a new VMware HA cluster, the options you select affect the way that cluster responds to failures of hosts or virtual machines.

Before creating a VMware HA cluster, you should be aware of how VMware HA identifies host failures andisolation and responds to these situations. You also should know how admission control works so that you can choose the policy that best fits your failover needs. After a cluster has been established, you can customize its behavior with advanced attributes and optimize its performance by following recommended best practices.

How VMware HA works

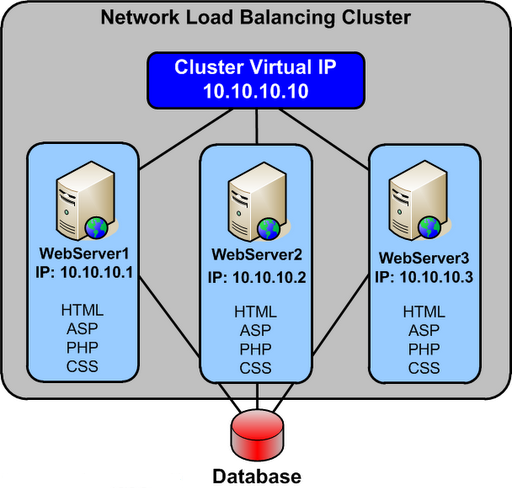

VMware HA provides high availability for virtual machines by pooling them and the hosts they reside on into a cluster. Hosts in the cluster are monitored and in the event of a failure, the virtual machines on a failed host are restarted on alternate hosts.

Primary and Secondary Hosts in a VMware HA Cluster

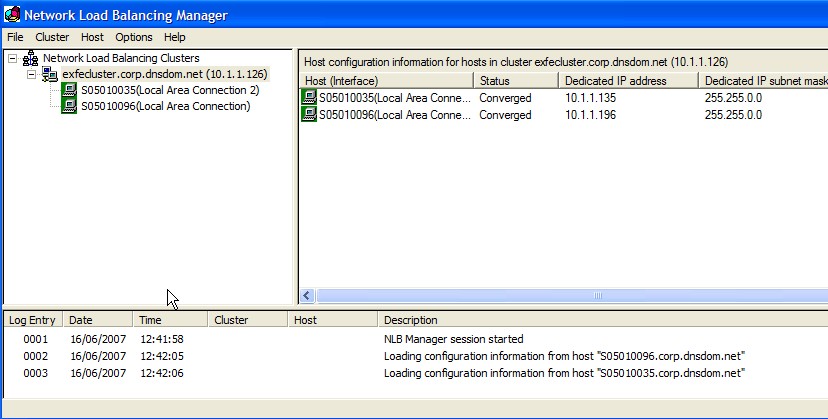

When you add a host to a VMware HA cluster, an agent is uploaded to the host and configured to communicate with other agents in the cluster. The first five hosts added to the cluster are designated as primary hosts, and all subsequent hosts are designated as secondary hosts. The primary hosts maintain and replicate all cluster state and are used to initiate failover actions. If a primary host is removed from the cluster, VMware HA promotes another host to primary status.

Any host that joins the cluster must communicate with an existing primary host to complete its configuration (except when you are adding the first host to the cluster). At least one primary host must be functional for VMware HA to operate correctly. If all primary hosts are unavailable (not responding), no hosts can be successfully configured for VMware HA.

Failure Detection and Host Network Isolation

Agents communicate with each other and monitor the liveness of the hosts in the cluster. This is done through the exchange of heartbeats, by default, every second. If a 15-second period elapses without the receipt of heartbeats from a host, and the host cannot be pinged, it is declared as failed. In the event of a host failure, the virtual machines running on that host are failed over, that is, restarted on the alternate hosts with the most available unreserved capacity (CPU and memory.)

Note: In the event of a host failure, VMware HA does not fail over any virtual machines to a host that is in maintenance mode, because such a host is not considered when VMware HA computes the current failover level. When a host exits maintenance mode, the VMware HA service is re-enabled on that host, so it becomes available for failover again.

Host network isolation occurs when a host is still running, but it can no longer communicate with other hosts in the cluster. With default settings, if a host stops receiving heartbeats from all other hosts in the cluster for more than 12 seconds, it attempts to ping its isolation addresses. If this also fails, the host declares itself as isolated from the network.

When the isolated host’s network connection is not restored for 15 seconds or longer, the other hosts in the cluster treat it as failed and attempt to fail over its virtual machines. However, when an isolated host retains access to the shared storage it also retains the disk lock on virtual machine files. To avoid potential data corruption, VMFS disk locking prevents simultaneous write operations to the virtual machine disk files and attempts to fail over the isolated host’s virtual machines fail. By default, the isolated host shuts down its virtual

machines, but you can change the host isolation response to Leave powered on or Power off

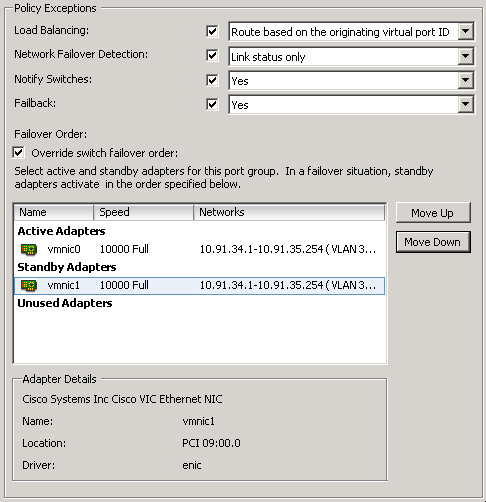

Redundancy and Reducing Isolation

If you ensure that your network infrastructure is sufficiently redundant and that at least one network path is available at all times, host network isolation should be a rare occurrence.

Which setting should I use?

It depends. Some people prefer “Shut down” because they do not want to use a deprecated host and it will shut down your VMs in a clean manner.

The isolation response is a setting that needs to be taken into account when you create your design. For instance when using an iSCSI array or NFS choosing “leave powered on” as your default isolation response might lead to a split-brain situation depending on the version of ESX used. The reason for this being that the disk lock times out if the iSCSI network is also unavailable. In this case the VM is being restarted on a different host while it is not being powered off on the original host. In a normal situation this should not lead to problems as the VM is restarted and the host on which it runs owns the lock on the VMDK, but for some weird reason when disaster strikes you will not end up in a normal situation but you might end up in an exceptional situation

Many people prefer to use “Leave powered on” because it reduces the chances of a false positive. A false positive in this case is an isolated heartbeat network but a non-isolated VM network and a non-isolated iSCSI / NFS network.

How does HA knows if the host is isolated or completely unavailable when you have selected “leave powered on”?

HA actually does not know the difference. HA will try to restart the affected VMs in both cases. When the host has failed a restart will take place, but if a host is merely isolated the non-isolated hosts will not be able to restart the affected VMs. This is because of the VMDK file lock; no other host will be able to boot a VM when the files are locked. When a host fails this lock starves and a restart can occur.

Isolation Response Considerations

The default value for isolation/failure detection is 15 seconds. In other words the failed or isolated host will be declared dead by the other hosts in the HA cluster on the fifteenth second and a restart will be initiated by one of the primary hosts.

For now let’s assume the isolation response is “power off”. The “power off”(isolation response) will be initiated by the isolated host 1 second before the das.failuredetectiontime. A “power off” will be initiated on the fourteenth second and a restart will be initiated on the fifteenth second.

Does this mean that you can end up with your VMs being down and HA not restarting them?

Yes, when the heartbeat returns between the 14th and 15th second the “power off” could already have been initiated. The restart however will not be initiated because the heartbeat indicates that the host is not isolated anymore.

How can you avoid this?

Pick “Leave VM powered on” as an isolation response. Increasing the das.failuredetectiontime will also decrease the chances of running in to issues like these.

Basic design principle: Increase “das.failuredetectiontime” to 30 seconds (30000) to decrease the likely-hood of a false positive

Please see the below link for further information on this

http://rickardnobel.se/vmware-ha-das-failuredetectiontime/