Useful to have for any drawings or diagrams

Archive for VMware

What is an ODBC Connection?

ODBC stands for Open Data Base Connectivity. It is a connection that is created to define a connection between a computer and a database stored on another system. The ODBC connection contains information needed to allow a computer user to access the information stored in a database that is not local to that computer. You need to define the type of the database application – like Microsoft SQL or Oracle or FoxPro or mySQL. Once you have defined the type of database you need to select or supply the appropriate driver for a connection (Windows already contains many of these) and then supply the name of the database file and the credentials needed to access the database.

Once the ODBC connection is created, you can tell specific programs to use that ODBC connection to access information in that database.

It was developed by the SQL Access Group in 1992 to standardize the use of a DBMS by an application. ODBC provides a universal middlewarelayer between the application and DBMS, allowing the application developer to use a single interface. If changes are made to the DBMS specification, only the driver needs updating. An ODBC driver can be thought of as analogous to a printer or other driver, providing a standard set of functions for the application to use, and implementing DBMS-specific functionality.

An application that can use ODBC is referred to as “ODBC-compliant”. Any ODBC-compliant application can access any DBMS for which a driver is installed. Drivers exist for all major DBMSs and even for text or CSV files.

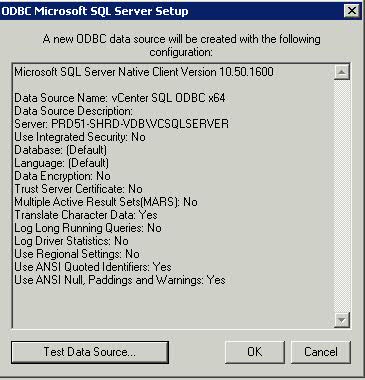

VMware ODBC Connection Example

The database used for vCenter can be installed on a dedicated host, or the same host depending on the size of your environment. For scalability, I recommend installing vCenter and SQL Server on separate hosts unless your running a very small environment and know that it will never grow

Instructions

- Note SQL Server must be installed and a DB created for vCenter

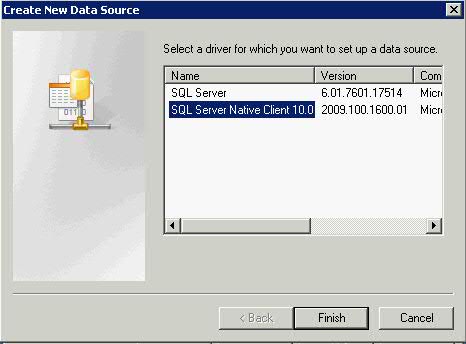

- Note: The ODBC source vCenter uses must be created with the SQL Native Client driver (the SQL driver provided with Windows will not work.) The SQL Native Client driver can be found on the SQL Server 2005 installation media. Install this first

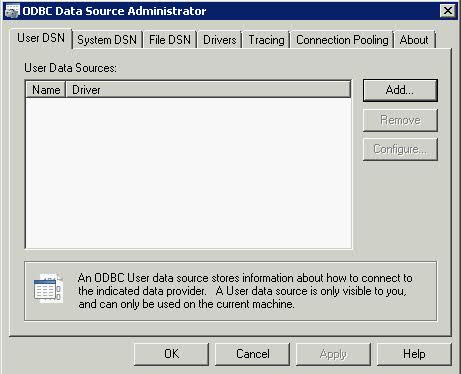

- Open Control Panel on your vCenter Server followed by Administrative Tools

- Open the ODBC Connection icon

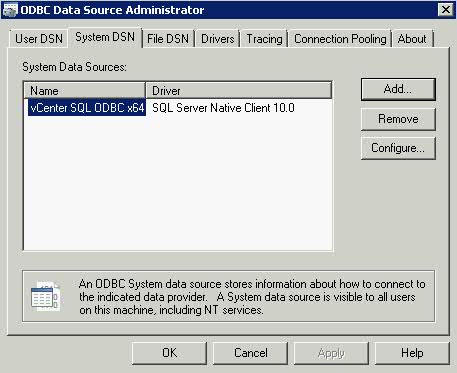

- Click the System DSN tab and click the “Add” button

- Choose SQL Native Client

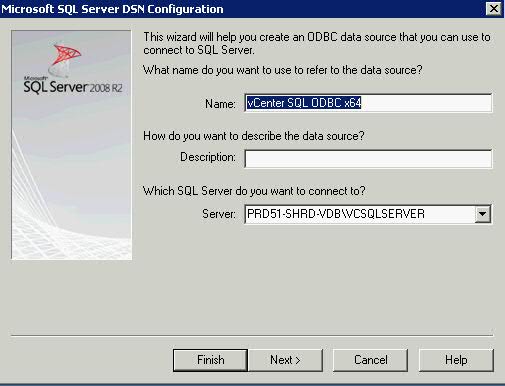

- Provide a Name for the driver (you will reference this name during the vCenter install) and provide the Hostname/IP of the SQL Server instance you created when you installed SQL Server

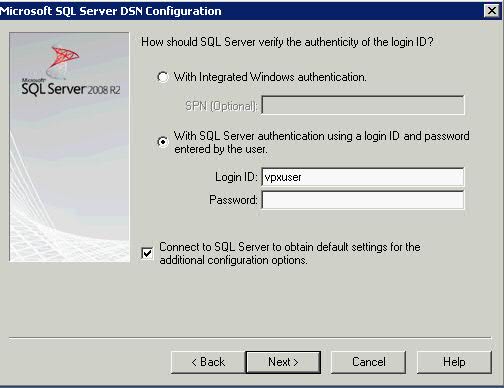

- Click Next and Select the “SQL Server authentication” type and provide the Login and password created from installing SQL Server – You will have logged into SQL Management Studio and created a new user (SQL Server Authentication)

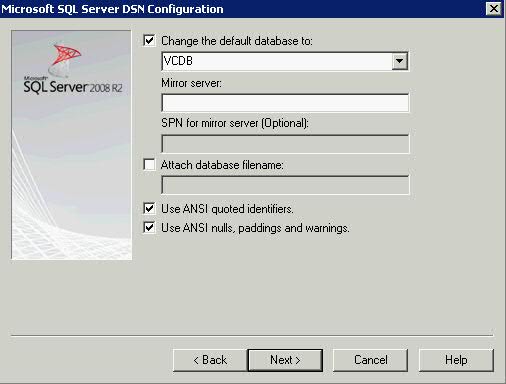

- Click Next and Change the default database to the name of your previously created vCenter database

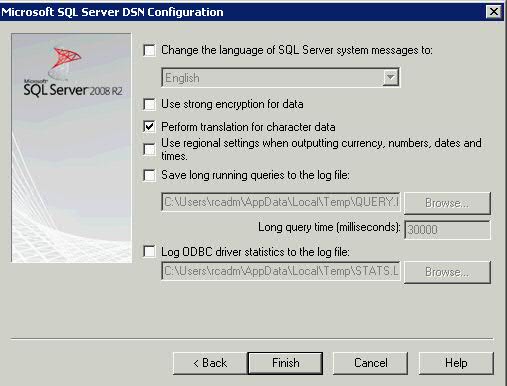

- Click Next and keep the following settings

- Click Finish

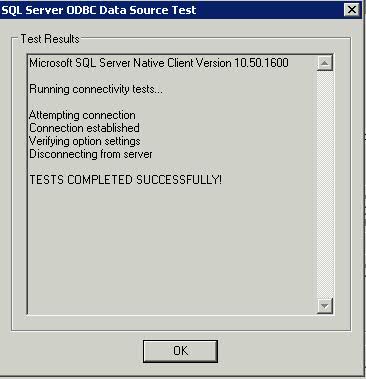

- Test Data Source. It should show the below

Deleting a VM with Raw Disk Mappings

How do you delete a VM with Raw Disk Mappings?

To the best of my knowledge, if you delete the VM it will only delete the pointer file and you would have to delete the RDM on the SAN.

Before deleting the VM, you could always click on Edit Settings and select the RDM and Remove Disk – Delete from disk. This should delete both the pointer file and the RDM.

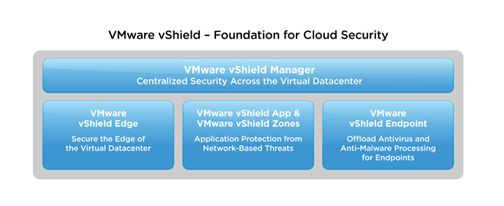

Should you put Antivirus on your VMware Hosts?

Installing Antivirus software on the service console its like choosing between security and performance for ESX(i) host. It will impact your performance dramatically since it will use RAM/CPU and potentially causes performance degradation. ESX(i) is very secure platform and if you can lock-down your SC than you’re pretty safe. I wouldn’t recommend deploying any antivirus to ESX(i) service console at all. To maximize security on ESX(i)/SC, you can apply Tripwire Checkconfig tool, CIS security guide or even DoD UNIX SRR scripts that scan and remediate in depth with security world.

VMKernel Security

The below security features are inbuilt into VMware ESXi which indicate that VMware have already thought the security aspect through very well and should be an indication as to why adding Antivirus to these systems may not be absolutely necessary

- Memory Hardening

The ESX/ESXi kernel, user-mode applications, and executable components such as drivers and libraries are located at random, non-predictable memory addresses. Combined with the nonexecutable memory protections made available by microprocessors, this provides protection that makes it difficult for malicious code to use memory exploits to take advantage of vulnerabilities.

- Kernel Module Integrity

Digital signing ensures the integrity and authenticity of modules, drivers and applications as they are loaded by the VMkernel. Module signing allows ESXi to identify the providers of modules, drivers, or applications and whether they are VMware-certified. Trusted Platform Module (ESXi ONLY and BIOS enabled) – This module is a hardware element that represents the core of trust for a hardware platform and enables attestation of the boot process, as well as cryptographic key storage and protection. Each time ESXi boots, TPM measures the VMkernel with which ESXi booted in one of its Platform Configuration Registers (PCRs). TPM measurements are propagated to vCenter Server when the host is added to the vCenter Server system

Recommendations

- Lock down the service console to only those that require direct access to it.

- Don’t allow root logins, and use sudo instead, and integrate the logins into an LDAP or AD infrastructure.

- There are very few viruses that will affect a Linux system, and they require specialized privileges to infect, and then run on a *nix system.

- You want to minimize the agents running on an ESX(i) host, even though it has a service console that is Linux, you want to minimize any additional software that may interfere with the running of the VMkernel.

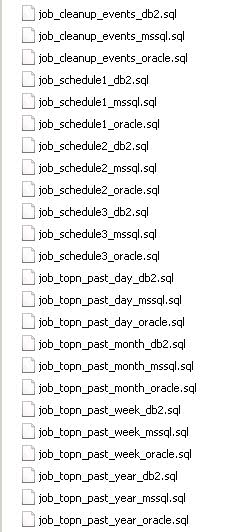

VMware DB Scripts for Performance Stats

We reinstalled our SQL Server 2008 Database completely and restored the Virtual Center DB and the Virtual Update Manager DB mainly due to someone installing the SQL Server 2008 software in Evaluation Mode which then expired and caused us no end of problems!

What you need to remember if you rebuild and restore the databases is to add the performance scripts back into SQL Server Management Studio for Weekly, Monthly and Yearly

The Issue

Following a SQL DB re-installation and restore we were doing the following

- Click on Host

- Click on Performance Tab

- Click Advanced

- Click on Chart Options

- Choose Week, or Past Month

It comes up with “Performance Data is currently not available for this entity”

Instructions

- Depending on how you are setup. (We had a separate VM for our DB and vCenter Server)

- Log into SQL Management Studio on your Database Server

- On the DB Server, create a shortcut to your vCenter Server to the following path. (This contains the scripts you need and may be on the C or D Drive)

- D:\Program Files\VMware\Infrastructure\VirtualCenter Server

- Make sure you have the Virtual Center DB selected in SQL Management

Studio. Then just double click the file, it should open against the VCDB. - Check this link for the script names

- If you have problems with that for any reason you can create a new query

against the VCDB and then open up the SQL scripts in notepad and copy

across. - The scheduled jobs trigger the stored procedures which are already part of the DB. Amongst other things they clear out the raw data after it’s been processed and is no longer needed, they will also remove old tasks and events from the DB.

Scripts

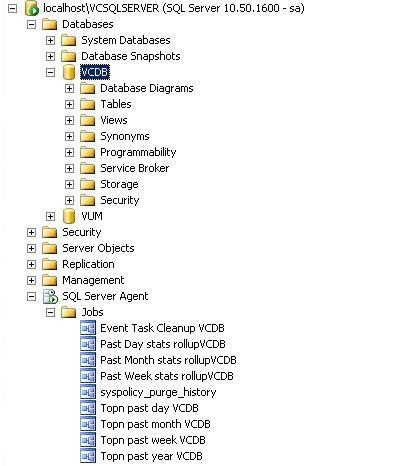

This is what you should see in your SQL Server Management Studio Application

SQL Database Tables getting too big in VMware

Checking vCenter DB Table sizes

Sometimes you can experience issues with the vCenter Database getting too large. If you want to check the table sizes to confirm this, please do the following.

- Open SQL Management Studio

- Select vCenter DB name

- Execute the following query to get Table sizes

Create Table #Temp(Name sysname, rows int, reserved varchar(100), data varchar(100), index_size varchar(100), unused varchar(100))

exec sp_msforeachtable ‘Insert Into #Temp Exec sp_spaceused ”?”, ”true”’

Select * From #Temp

Drop Table #Temp

Purge Scripts

There are a set of purge scripts where you can set how much historical data you keep and it will remove everything else from the DB.

Take a look at this KB, it runs through the process and has a link to the scripts:

http://kb.vmware.com/kb/1025914

One thing I will say is that the scripts can take a long time to run, If the size of your DB is quite large, it could take quite a while to complete. I would kick it off and let it run over the weekend if it hasn’t completed by the end of the day. Also the scripts are perfectly safe but I would take a backup of the DB before doing anything just for peace of mind

DB Maintenance

You should run a shrink on the DB to remove empty space and a regular backup is a good idea as MS SQL uses this to do maintenance on the DB. If you check out the Microsoft documentation for your version of MS SQL there should be detail and recommendation for setting that up

Useful Links

Defragmenting VirtualCenter performance data indexes on a Microsoft SQL database:

http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1003990

Unable to get an exclusive access to the vCenter Server repository:

http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1006369

ESXi 4.1 Active Directory Integration

Although day-to-day vSphere management operations are usually done on vCenter Server logged in through the vSphere Client, there are instances when users must work with ESXi directly, such as with configuration backup and log file access. Then there are monitoring solutions, which sometimes require direct access to the ESXi host; these would typically be configured to use service accounts. Prior to ESXi 4.1, you could only create local users, which each had separate locally-stored passwords per host. Since this is cumbersome and doesn’t scale, VMware decided to address this in the vSphere 4.1 release.

With ESXi 4.1, you can configure the host to join an Active Directory domain, and any user trying to access the host will automatically be authenticated against the centralized user directory. Any time you are asked to provide credentials (e.g., logging in directly to the ESXi host using vSphere Client, or running a vCLI command or script), you can enter the username and password of a user in the domain to which the host is joined. The big advantage of this is that you can now continue to manage user accounts using Active Directory, which is significantly easier and more secure than trying to manage accounts independently on a per-host basis.

You can still have local users defined and managed on a host-by-host basis and configured using the vSphere client, vCLI or PowerCLI. This can be used in place of, or in addition to, the Active Directory integration. I’m not sure if there is really a good reason to do this if the host is already joined to an AD domain, but this capability is available.

If the host is integrated with Active Directory, local roles can also be granted to Active Directory users and groups. For example, an Active Directory group can be created to include users who should have an administrator role on a subset of ESXi servers. On those servers, the administrator role can be granted to that Active Directory group; for all other servers, those users would not have an administrator role. ESXi 4.1 also automatically grants administrator access to the Active Directory group named “ESX Admins,” which allows the creation of a global administrators group. (If you want to override this behavior, simply configure the roles on the ESXi host to give the “No Access” role to the “ESX Admins” group — this will override the default behavior).

Instructions

- Select a Host > Click Configuration > Click Authentication Services

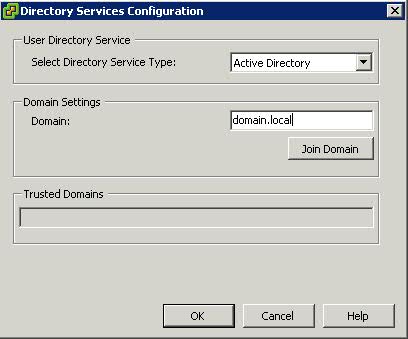

- Click Properties and choose Active Directory from thr Drop Down Option and then type in the domain. In this example, it is domain.local

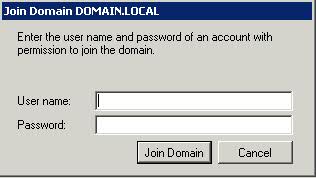

- Click Join Domain and Enter a username and password which has authority to join machines to the domain

- You have now joined the host to the AD Domain

- Log into Active Directory and you now need to create a new Global Security Group called ESX Admins. This is the default security group that the ESX host is going to be looking for

- Right Click Group and Add Members

- Now you can test this by opening up the vSphere client and test this

- If you then click on the Hosts and click on Permissions, you will see the ESX Admins Group Listed

- You can then add other Permissions by right clicking in the screen and selecting Add Permission

- Choose your AD user and then select the VMware Role you want this AD user to have

Memory Over Allocation for VM’s – What Happens?

ESX employs a share-based allocation algorithm to achieve efficient memory utilization for all virtual machines and to guarantee memory to those virtual machines which need it most

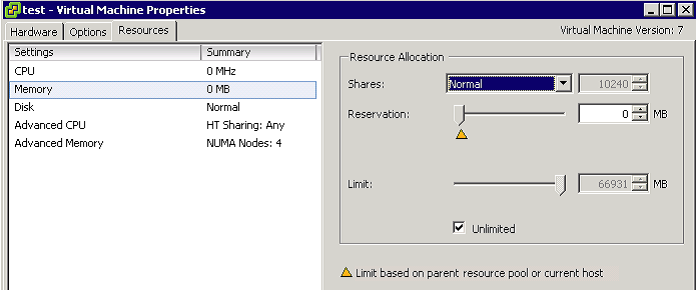

ESX provides three configurable parameters to control the host memory allocation for a virtual machine

- Shares

- Reservation

- Limit

Limit is the upper bound of the amount of host physical memory allocated for a virtual machine. By default, limit is set to unlimited, which means a virtual machine’s maximum allocated host physical memory is its specified virtual machine memory size

Reservation is a guaranteed lower bound on the amount of host physical memory the host reserves for a virtual machine even when host memory is overcommitted.

Memory Shares entitle a virtual machine to a fraction of available host physical memory, based on a proportional-share allocation policy. For example, a virtual machine with twice as many shares as another is generally entitled to consume twice as much memory, subject to its limit and reservation constraints.

Periodically, ESX computes a memory allocation target for each virtual machine based on its share-based entitlement, its estimated working set size, and its limit and reservation. Here, a virtual machine’s working set size is defined as the amount of guest physical memory that is actively being used. When host memory is undercommitted, a virtual machine’s memory allocation target is the virtual machine’s consumed host physical memory size with headroom

VMware Resource Management (Memory)

VMware® ESX(i)™ is a hypervisor designed to efficiently manage hardware resources including CPU, memory, storage, and network among multiple, concurrent virtual machines.

Memory Overcommittment

The concept of memory overcommitment is fairly simple: host memory is overcommitted when the total amount of guest physical memory of the running virtual machines is larger than the amount of actual host memory. ESX supports memory overcommitment from the very first version, due to two important benefits it provides:

- Higher memory utilization: With memory overcommitment, ESX ensures that host memory is consumed by active guest memory as much as possible. Typically, some virtual machines may be lightly loaded compared to others. Their memory may be used infrequently, so for much of the time their memory will sit idle. Memory overcommitment allows the hypervisor to use memory reclamation techniques to take the inactive or unused host physical memory away from the idle virtual machines and give it to other virtual machines that will actively use it.

- Higher consolidation ratio: With memory overcommitment, each virtual machine has a smaller footprint in host memory usage, making it possible to fit more virtual machines on the host while still achieving good performance for all virtual machines. For example, as shown in Figure 3, you can enable a host with 4G host physical memory to run three virtual machines with 2G guest physical memory each. Without memory overcommitment, only one virtual machine can be run because the hypervisor cannot reserve host memory for more than one virtual machine, considering that each virtual machine has overhead memory.

ESX uses several innovative techniques to reclaim virtual machine memory, which are:

- Transparent page sharing (TPS)

- Reclaims memory by removing redundant pages with identical content

- Ballooning

- Reclaims memory by artificially increasing the memory pressure inside the guest

- Hypervisor/Host swapping

- Reclaims memory by having ESX directly swap out the virtual machine’s memory

- Memory compression

- Reclaims memory by compressing the pages that need to be swapped out

Transparent Page Sharing

When multiple virtual machines are running, some of them may have identical sets of memory content. This presents opportunities for sharing memory across virtual machines (as well as sharing within a single virtual machine). For example, several virtual machines may be running the same guest operating system, have the same applications, or contain the same user data. With page sharing, the hypervisor can reclaim the redundant copies and keep only one copy, which is shared by multiple virtual machines in the host physical memory. As a result, the total virtual machine host memory consumption is reduced and a higher level of memory overcommitment is possible.

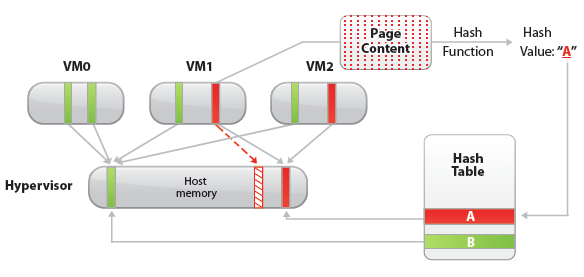

In ESX, the redundant page copies are identified by their contents. This means that pages with identical content can be shared regardless of when, where, and how those contents are generated. ESX scans the content of guest physical memory for sharing opportunities. Instead of comparing each byte of a candidate guest physical page to other pages, an action that is prohibitively expensive, ESX uses hashing to identify potentially identical pages.

A hash value is generated based on the candidate guest physical page’s content. The hash value is then used as a key to look up a global hash table, in which each entry records a hash value and the physical page number of a shared page. If the hash value of the candidate guest physical page matches an existing entry, a full comparison of the page contents is performed to exclude a false match. Once the candidate guest physical page’s content is confirmed to match the content of an existing shared host physical page, the guest physical to host physical mapping of the candidate guest physical page is changed to the shared host physical page, and the redundant host memory copy (the page pointed to by the dashed arrow in the Figure above) is reclaimed. This remapping is invisible to the virtual machine and inaccessible to the guest operating system. Because of this invisibility, sensitive information cannot be leaked from one virtual machine to another.

A standard copy-on-write (CoW) technique is used to handle writes to the shared host physical pages. Any attempt to write to the shared pages will generate a minor page fault. In the page fault handler, the hypervisor will transparently create a private copy of the page for the virtual machine and remap the affected guest physical page to this private copy. In this way, virtual machines can safely modify the shared pages without disrupting other virtual machines sharing that memory. Note that writing to a shared page does incur overhead compared to writing to non-shared pages due to the extra work performed in the page fault handler.

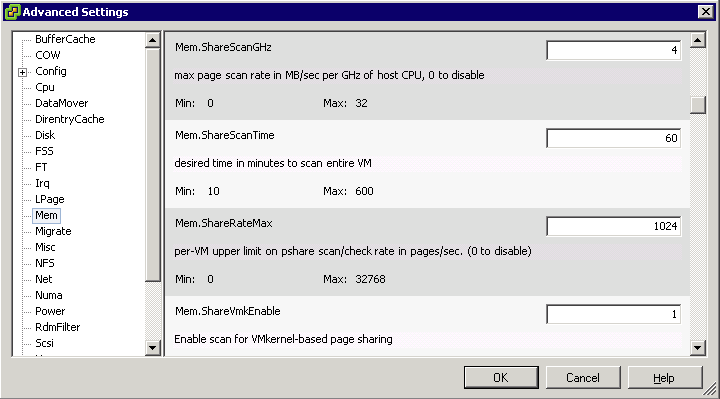

In VMware ESX, the hypervisor scans the guest physical pages randomly with a base scan rate specified by Mem.ShareScanTime, which specifies the desired time to scan the virtual machine’s entire guest memory. The maximum number of scanned pages per second in the host and the maximum number of per-virtual machine scanned pages, (that is, Mem.ShareScanGHz and Mem.ShareRateMax respectively) can also be specified in ESX advanced settings. An example is shown in the Figure below

The default values of these three parameters are carefully chosen to provide sufficient sharing opportunities while keeping the CPU overhead negligible. In fact, ESX intelligently adjusts the page scan rate based on the amount of current shared pages. If the virtual machine’s page sharing opportunity seems to be low, the page scan rate will be reduced accordingly and vice versa. This optimization further mitigates the overhead of page sharing.

In hardware-assisted memory virtualization (for example, Intel EPT Hardware Assist and AMD RVI Hardware Assist systems, ESX will automatically back guest physical pages with large host physical pages (2MB contiguous memory region instead of 4KB for regular pages) for better performance due to less TLB misses. In such systems, ESX will not share those large pages because: 1) the probability of finding two large pages having identical contents is low, and 2) the overhead of doing a bit-by-bit comparison for a 2MB page is much larger than for a 4KB page. However, ESX still generates hashes for the 4KB pages within each large page. Since ESX will not swap out large pages, during host swapping, the large page will be broken into small pages so that these pre-generated hashes can be used to share the small pages before they are swapped out. In short, we may not observe any page sharing for hardware-assisted memory virtualization systems until host memory is overcommitted.

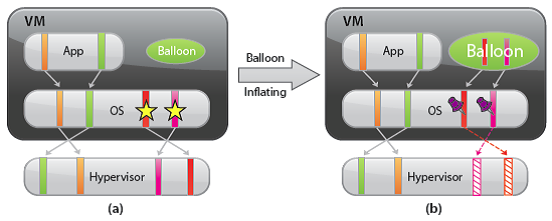

Ballooning

Ballooning is a completely different memory reclamation technique compared to transparent page sharing. Before describing the technique, it is helpful to review why the hypervisor needs to reclaim memory from virtual machines. Due to the virtual machine’s isolation, the guest operating system is not aware that it is running inside a virtual machine and is not aware of the states of other virtual machines on the same host. When the hypervisor runs multiple virtual machines and the total amount of the free host memory becomes low, none of the virtual machines will free guest physical memory because the guest operating system cannot detect the host’s memory shortage. Ballooning makes the guest operating system aware of the low memory status of the host.

In ESX, a balloon driver is loaded into the guest operating system as a pseudo-device driver.

VMware Tools must be installed in order to enable ballooning. This is recommended for all workloads. It has no external interfaces to the guest operating system and communicates with the hypervisor through a private channel. The balloon driver polls the hypervisor to obtain a target balloon size. If the hypervisor needs to reclaim virtual machine memory, it sets a proper target balloon size for the balloon driver, making it “inflate” by allocating guest physical pages within the virtual machine. The figure below illustrates the process of the balloon inflating

In the figure below, four guest physical pages are mapped in the host physical memory. Two of the pages are used by the guest application and the other two pages (marked by stars) are in the guest operating system free list. Note that since the hypervisor cannot identify the two pages in the guest free list, it cannot reclaim the host physical pages that are backing them. Assuming the hypervisor needs to reclaim two pages from the virtual machine, it will set the target balloon size to two pages. After obtaining the target balloon size, the balloon driver allocates two guest physical pages inside the virtual machine and pins them, as shown in Figure b. Here, “pinning” is achieved through the guest operating system interface, which ensures that the pinned pages cannot be paged out to disk under any circumstances. Once the memory is allocated, the balloon driver notifies the hypervisor about the page numbers of the pinned guest physical memory so that the hypervisor can reclaim the host physical pages that are backing them. In Figure b, dashed arrows point at these pages. The hypervisor can safely reclaim this host physical memory because neither the balloon driver nor the guest operating system relies on the contents of these pages. This means that no processes in the virtual machine will intentionally access those pages to read/write any values. Thus, the hypervisor does not need to allocate host physical memory to store the page contents. If any of these pages are re-accessed by the virtual machine for some reason, the hypervisor will treat it as a normal virtual machine memory allocation and allocate a new host physical page for the virtual machine. When the hypervisor decides to deflate the balloon—by setting a smaller target balloon size—the balloon driver deallocates the pinned guest physical memory, which releases it for the guest’s applications.

Typically, the hypervisor inflates the virtual machine balloon when it is under memory pressure. By inflating the balloon, a virtual machine consumes less physical memory on the host, but more physical memory inside the guest. As a result, the hypervisor offloads some of its memory overload to the guest operating system while slightly loading the virtual machine. That is, the hypervisor transfers the memory pressure from the host to the virtual machine. Ballooning induces guest memory pressure. In response, the balloon driver allocates and pins guest physical memory. The guest operating system determines if it needs to page out guest physical memory to satisfy the balloon driver’s allocation requests. If the virtual machine has plenty of free guest physical memory, inflating the balloon will induce no paging and will not impact guest performance. In this case, as illustrated in the figure, the balloon driver allocates the free guest physical memory from the guest free list. Hence, guest-level paging is not necessary. However, if the guest is already under memory pressure, the guest operating system decides which guest physical pages to be paged out to the virtual swap device in order to satisfy the balloon driver’s allocation requests. The genius of ballooning is that it allows the guest operating system to intelligently make the hard decision about which pages to be paged out without the hypervisor’s involvement.

Hypervisor/Host Swapping

In the cases where ballooning and transparent page sharing are not sufficient to reclaim memory, ESX employs hypervisor swapping to reclaim memory. At virtual machine startup, the hypervisor creates a separate swap file for the virtual machine. Then, if necessary, the hypervisor can directly swap out guest physical memory to the swap file, which frees host physical memory for other virtual machines.

Besides the limitation on the reclaimed memory size, both page sharing and ballooning take time to reclaim memory. The page-sharing speed depends on the page scan rate and the sharing opportunity. Ballooning speed relies on the guest operating system’s response time for memory allocation.

In contrast, hypervisor swapping is a guaranteed technique to reclaim a specific amount of memory within a specific amount of time. However, hypervisor swapping is used as a last resort to reclaim memory from the virtual machine due to the following limitations on performance:

- Page selection problems: Under certain circumstances, hypervisor swapping may severely penalize guest performance. This occurs when the hypervisor has no knowledge about which guest physical pages should be swapped out, and the swapping may cause unintended interactions with the native memory management policies in the guest operating system.

- Double paging problems: Another known issue is the double paging problem. Assuming the hypervisor swaps out a guest physical page, it is possible that the guest operating system pages out the same physical page, if the guest is also under memory pressure. This causes the page to be swapped in from the hypervisor swap device and immediately to be paged out to the virtual machine’s virtual swap device.

Page selection and double-paging problems exist because the information needed to avoid them is not available to the hypervisor.

- High swap-in latency: Swapping in pages is expensive for a VM. If the hypervisor swaps out a guest page and the guest subsequently accesses that page, the VM will get blocked until the page is swapped in from disk. High swap-in latency, which can be tens of milliseconds, can severely degrade guest performance.

ESX mitigates the impact of interacting with guest operating system memory management by randomly selecting the swapped guest physical pages.

Memory Compression

The idea of memory compression is very straightforward: if the swapped out pages can be compressed and stored in a compression cache located in the main memory, the next access to the page only causes a page decompression which can be an order of magnitude faster than the disk access. With memory compression, only a few uncompressible pages need to be swapped out if the compression cache is not full. This means the number of future synchronous swap-in operations will be reduced. Hence, it may improve application performance significantly when the host is in heavy memory pressure. In ESX 4.1, only the swap candidate pages will be compressed. This means ESX will not proactively compress guest pages when host swapping is not necessary. In other words, memory compression does not affect workload performance when host memory is undercommitted.

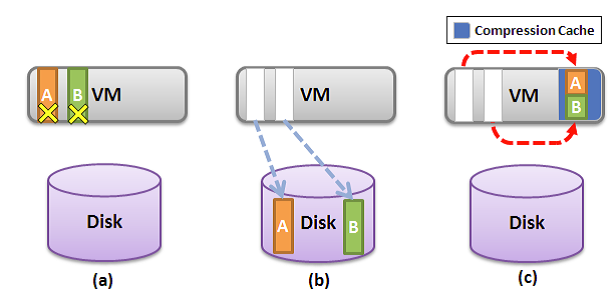

Reclaiming Memory through Compression

Figure a,b and c illustrates how memory compression reclaims host memory compared to host swapping. Assuming ESX needs to reclaim two 4KB physical pages from a VM through host swapping, page A and B are the selected pages (Figure a,b,c). With host swapping only, these two pages will be directly swapped to disk and two physical pages are reclaimed (Figure b). However, with memory compression, each swap candidate page will be compressed and stored using 2KB of space in a per-VM compression cache. Note that page compression would be much faster than the normal page swap out operation which involves a disk I/O. Page compression will fail if the compression ratio is less than 50% and the uncompressible pages will be swapped out. As a result, every successful page compression is accounted for reclaiming 2KB of physical memory. As illustrated in Figure c, pages A and B are compressed and stored as half-pages in the compression cache. Although both pages are removed from VM guest memory, the actual reclaimed memory size is one page.

If any of the subsequent memory access misses in the VM guest memory, the compression cache will be checked first using the host physical page number. If the page is found in the compression cache, it will be decompressed and push back to the guest memory. This page is then removed from the compression cache. Otherwise, the memory request is sent to the host swap device and the VM is blocked.

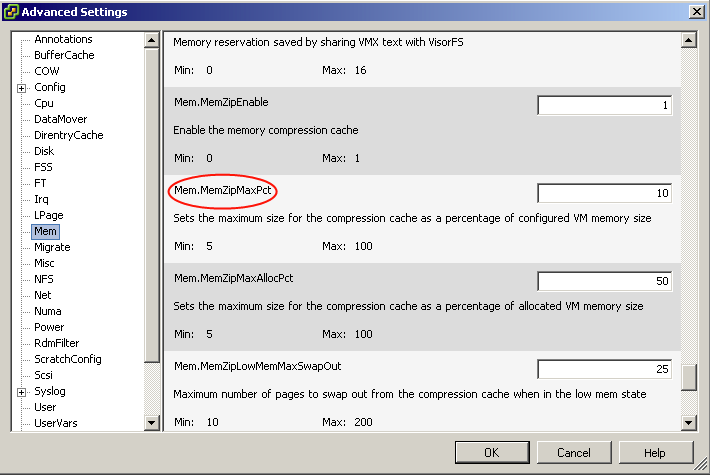

Managing Per-VM Compression Cache

The per-VM compression cache is accounted for by the VM’s guest memory usage, which means ESX will not allocate additional host physical memory to store the compressed pages. The compression cache is transparent to the guest OS. Its size starts with zero when host memory is undercommitted and grows when virtual machine memory starts to be swapped out.

If the compression cache is full, one compressed page must be replaced in order to make room for a new compressed page. An age-based replacement policy is used to choose the target page. The target page will be decompressed and swapped out. ESX will not swap out compressed pages.

If the pages belonging to compression cache need to be swapped out under severe memory pressure, the compression cache size is reduced and the affected compressed pages are decompressed and swapped out.

The maximum compression cache size is important for maintaining good VM performance. If the upper bound is too small, a lot of replaced compressed pages must be decompressed and swapped out. Any following swap-ins of those pages will hurt VM performance. However, since compression cache is accounted for by the VM’s guest memory usage, a very large compression cache may waste VM memory and unnecessarily create VM memory pressure especially when most compressed pages would not be touched in the future. In ESX 4.1, the default maximum compression cache size is conservatively set to 10% of configured VM memory size. This value can be changed through the vSphere Client in Advanced Settings by changing the value for Mem.MemZipMaxPct.

When to reclaim Memory

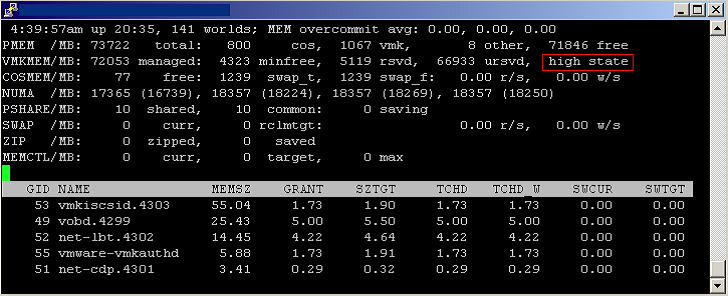

ESX maintains four host free memory states: high, soft, hard, and low, which are reflected by four thresholds: 6%, 4%, 2%, and 1% of host memory respectively. Figure 8 shows how the host free memory state is reported in esxtop.

By default, ESX enables page sharing since it opportunistically “frees” host memory with little overhead. When to use ballooning or swapping (which activates memory compression) to reclaim host memory is largely determined by the current host free memory state.

In the high state, the aggregate virtual machine guest memory usage is smaller than the host memory size. Whether or not host memory is overcommitted, the hypervisor will not reclaim memory through ballooning or swapping. (This is true only when the virtual machine memory limit is not set.)

If host free memory drops towards the soft threshold, the hypervisor starts to reclaim memory using ballooning. Ballooning happens before free memory actually reaches the soft threshold because it takes time for the balloon driver to allocate and pin guest physical memory. Usually, the balloon driver is able to reclaim memory in a timely fashion so that the host free memory stays above the soft threshold.

If ballooning is not sufficient to reclaim memory or the host free memory drops towards the hard threshold, the hypervisor starts to use swapping in addition to using ballooning. During swapping, memory compression is activated as well. With host swapping and memory compression, the hypervisor should be able to quickly reclaim memory and bring the host memory state back to the soft state.

In a rare case where host free memory drops below the low threshold, the hypervisor continues to reclaim memory through swapping and memory compression, and additionally blocks the execution of all virtual machines that consume more memory than their target memory allocations.

In certain scenarios, host memory reclamation happens regardless of the current host free memory state. For example, even if host free memory is in the high state, memory reclamation is still mandatory when a virtual machine’s memory usage exceeds its specified memory limit. If this happens, the hypervisor will employ ballooning and, if necessary, swapping and memory compression to reclaim memory from the virtual machine until the virtual machine’s host memory usage falls back to its specified limit

VMware vSphere 5 Memory Management and Monitoring diagram

The diagram from this KB is fantastic for showing the interoperability between Memory Management Techniques

SMP (Symmetric Multi Processing)

VMware® Virtual Symmetric Multi-Processing (SMP) enhances virtual machine performance by enabling a single virtual machine to use multiple physical processors, simultaneously. A unique VMware feature, Virtual SMP™ enables virtualization of the most processor- and

resource-intensive enterprise applications such as databases, ERP and CRM.

How Is VMware Virtual SMP Used in the Enterprise?

- Create development and testing environments that are more realistic and can be quickly and easily deployed

- Run resource intensive applications in virtualized environments.

- Run enterprise application such as databases, and ERP or CRM, in virtual machines.

- Scale computing environments without adding new hardware. Allow multiple processors to work together on a workload and increase utilization of existing resources.

- Improve software development and deployment.

How Does VMware Virtual SMP Work?

VMware Virtual SMP makes it possible for a single virtual machine to span up to four physical processors, or CPUs. These processors share the same memory, and work on any task regardless of the location of the task in memory. Virtual SMP co-schedules non-idle

virtual processors synchronously while allowing over-commitment of the processors. Idle virtual processors can be de-scheduled with the guest operating system running inside the virtual machine and then re-used for other tasks. Virtual SMP periodically moves processing tasks between the available processors to re-balance the work load. Virtual SMP has built-in controls to minimize overhead on the system.

General Information

- Poor performance of servers (if any) can be attributed to too many CPUs (as the wait time for CPU can increase if there are too any CPUs e.g. for a ‘single’threaded’ app on a a multi-vcpu VM)….

- As long as the operating system is designed to support SMP then the operating system is responsible for balancing the processes among all the available CPUs as evenly as it can. In most cases, adding more CPUs to an SMP system does not increase throughput in direct proportion to the new resources because workloads cannot always take advantage efficiently of multiple CPUs. There is also some overhead involved in sharing resources and scheduling processes. For example, a four-CPU SMP system is not four times as productive as a single-CPU system. The efficiency of a multiple-CPU SMP system, versus a single-CPU system, is defined by the workload’s ability to scale, or the workload’s scaling ratio. This scaling ratio varies for different workloads.

- SMP systems are also affected by the need for locking and synchronization of resources. Before a task can modify a shared data item, it must ensure that no other task will change the data item. This is usually done by means of a lock. While a process or thread is waiting to obtain a lock, it is not productive. Further, while a thread is waiting for the lock, some of its cache lines may be replaced. Thus, when the thread is scheduled again, it may experience higher memory latency. The operating system’s kernel contains many shared data items, so it must perform synchronization internally. Synchronization delays can occur even in an application program that does not share data with other programs because the kernel services have to serialize shared kernel data. In addition to lock contention, path length also increases because more code is being executed.

- The main advantage of deploying an SMP system is the ability to use multiple processors simultaneously to execute different tasks constituting a program, thereby increasing the throughput (for example, the number of transactions per second), compared to a single-CPU system. Only workloads that support parallelisation (including multiple processes or multiple threads that can run in parallel) can benefit from SMP. Single-threaded workloads can be scheduled to only one CPU at the time; and thus, cannot take advantage of additional CPUs being available. On the other hand, some modern applications with significant computational components have built in multi-threaded structures and a high scalability ratio. Good examples of the latter are Microsoft® SQL Server and Microsoft Exchange. Microsoft recommends deploying these applications on SMP systems with at least two CPUs