What is Storage I/ Control?

*VMware Enterprise Plus License Feature

Set an equal baseline and then define priority access to storage resources according to established business rules. Storage I/O Control enables a pre-programmed response to occur when access to a storage resource becomes contentious

With VMware Storage I/O Control, you can configure rules and policies to specify the business priority of each VM. When I/O congestion is detected, Storage I/O Control dynamically allocates the available I/O resources to VMs according to your rules, enabling you to:

- Improve service levels for critical applications

- Virtualize more types of workloads, including I/O-intensive business-critical applications

- Ensure that each cloud tenant gets their fair share of I/O resources

- Increase administrator productivity by reducing amount of active performance management required.

- Increase flexibility and agility of your infrastructure by reducing your need for storage volumes dedicated to a single application

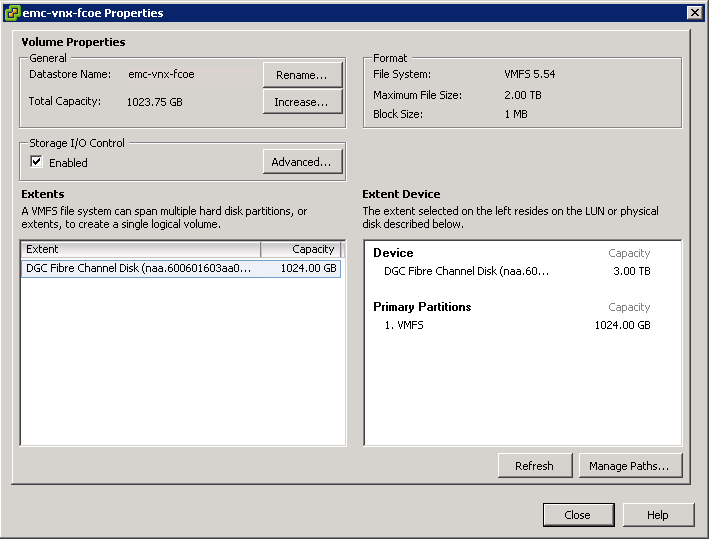

How is it configured?

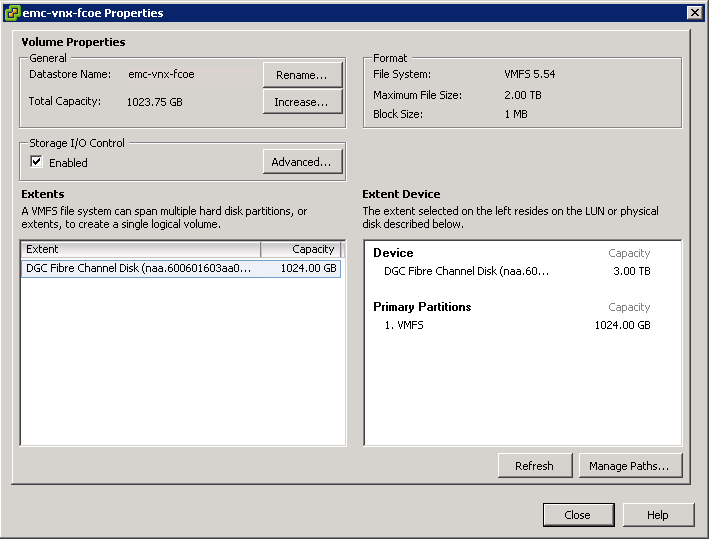

It’s quite straight forward to do. First you have to enable it on the datastores. Only if you want to prioritize a certain VM’s I/Os do you need to do additional configuration steps such as setting shares on a per VM basis. Yes, this can be a bit tedious if you have very many VMs that you want to change from the default shares value. But this only needs to be done once, and after that SIOC is up and running without any additional tweaking needed

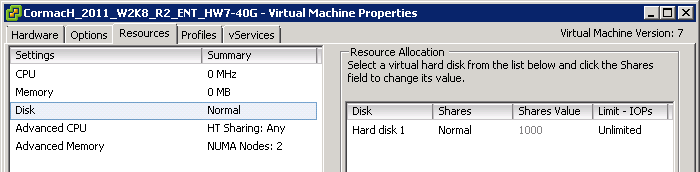

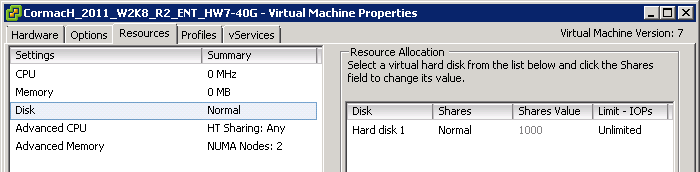

The shares mechanism is triggered when the latency to a particular datastore rises above the pre-defined latency threshold seen earlier. Note that the latency is calculated cluster-wide. Storage I/O Control also allows one to tune & place a maximum on the number of IOPS that a particular VM can generate to a shared datastore. The Shares and IOPS values are configured on a per VM basis. Edit the Settings of the VM, select the Resource tab, and the Disk setting will allow you to set the Shares value for when contention arises (set to Normal/1000 by default), and limit the IOPs that the VM can generate on the datastore (set to Unlimited by default):

Why enable it?

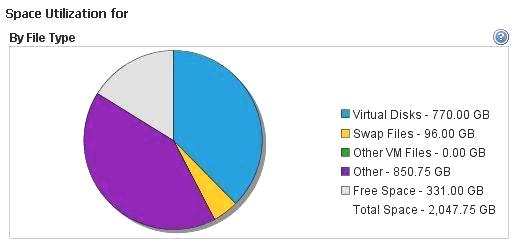

The thing is, without SIOC, you could definitely hit this noisy neighbour problem where one VM could use more than its fair share of resources and impact other VMs residing on the same datastore. So by simply enabling SIOC on that datastore, the algorithms will ensure fairness across all VMs sharing the same datastore as they will all have the same number of shares by default. This is a great reason for admins to use this feature when it is available to them. And another cool feature is that once SIOC is enabled, there are additional performance counters available to you which you typically don’t have

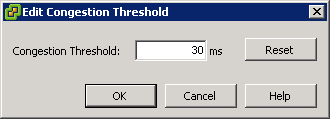

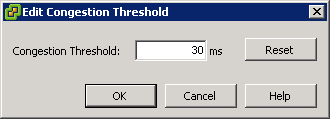

What threshold should you set?

30ms is an appropriate threshold for most applications however you may want to have a discussion with your storage array vendor, as they often make recommendations around latency threshold values for SIOC

Problems

One reason that this can occur is when the back-end disks/spindles have other LUNs built on them, and these LUNs are presented to non ESXi hosts. Check out

KB 1020651 for details on how to address this and previous posts

and

http://www.electricmonk.org.uk/2012/04/20/external-io-workload-detected-on-shared-datastore-running-storage-io-control-sioc/