Intro

For the past decade, processor clock speed has skyrocketed at rates exceeding even the predictions of Moores Law. A multi Gigahertz CPU, however needs to be supplied with an enormous amount of memory bandwidth in order to do its processing effectively.

Even a single CPU running a memory intensive workload such as a scientific computing application, can find itself constrained by memory bandwidth

These problems are amplified many times over on symmetric multiprocessing (SMP) systems where many processors must compete for bandwidth on the same system bus.

What is NUMA?

NUMA is Non Uniform Memory Access

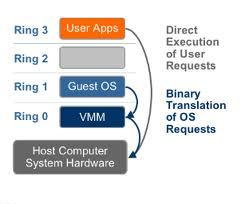

NUMA is an alternative approach that links several small, cost-effective nodes via a high performance interconnect. Each node contains both processors and memory, much like a small SMP system. However, an advanced memory controller allows a node to use memory on all other nodes, creating a single system image. When a processor accesses memory that does not lie within its own node (remote memory), the data must be transferred over the NUMA interconnect, which is slower than accessing local memory. Thus, memory access times are “non-uniform,” depending on the location of the memory, as the technology’s name implies

So what does Non-Uniform Memory Access really mean?

Non-Uniform Memory Access means that it will take longer to access some regions of memory than others. This is due to the fact that some regions of memory are on physically different busses from other regions

Imagine that you are baking a cake. You have a group of ingredients (=memory pages) that you need to complete the recipe(=process). Some of the ingredients you may have in your cabinet(=local memory), but some of the ingredients you might not have, and have to ask a neighbor for(=remote memory). The general idea is to try and have as many of the ingredients in your own cabinet as possible, since this reduces your time and effort in making the cake.

You also have to remember that your cabinets can only hold a fixed amount of ingredients(=physical nodal memory). If you try and buy more, but you have no room to store it, you may have to ask your neighbor to keep it in his/her cabinet until you need it(=local memory full, so allocate pages remotely).

What is meant by Local and Remote Memory?

The terms local memory and remote memory are typically used in reference to a currently running process. That said, local memory is typically defined to be the memory that is on the same node as the CPU currently running the process. Any memory that does not belong to the node on which the process is currently running is then, by that definition, remote.

Local and remote memory can also be used in reference to things other than the currently running process. When in interrupt context, there technically is no currently executing process, but memory on the node containing the CPU handling the interrupt is still called local memory. Also, you could use local and remote memory in terms of a disk. For example if there was a disk (attatched to node 1) doing a DMA, the memory it is reading or writing would be called remote if it were located on another node (ie: node 0)

What is the difference between NUMA and SMP?

The NUMA architecture was designed to surpass the scalability limits of the SMP architecture. With SMP, which stands for Symmetric Multi-Processing, all memory access are posted to the same shared memory bus. This works fine for a relatively small number of CPUs, but the problem with the shared bus appears when you have dozens, even hundreds, of CPUs competing for access to the shared memory bus. NUMA alleviates these bottlenecks by limiting the number of CPUs on any one memory bus, and connecting the various nodes by means of a high speed interconnect.

Why should I use NUMA? What are the benefits of NUMA?

The main benefit of NUMA is, as mentioned above, scalability. It is extremely difficult to scale SMP past 8-12 CPUs. At that number of CPUs, the memory bus is under heavy contention. NUMA is one way of reducing the number of CPUs competing for access to a shared memory bus. This is accomplished by having several memory busses and only having a small number of CPUs on each of those busses. There are other ways of building massively multiprocessor machines

Issues

The high latency of remote memory accesses can leave the processors under-utilized, constantly waiting for data to be transferred to the local node and the NUMA interconnect can also become a bottleneck for applications with high memory bandwidth demands.

Furthermore, performance on such a system may be highly variable, for example, if an application has memory located locally on one benchmarking run, but a subsequent run happens to place all that memory on a remote node. This phenomenon can make capacity planning much more difficult. Finally processor clocks may not be synchronised between multiple nodes so applications that read this clock directly may be behave incorrectly.

Typical Four-Processor NUMA Node Architecture

High-end servers are designed to support more than one system bus. One design approach is to create a number of nodes where each node contains some processors, some memory, and, in some cases, an I/O subsystem as per below pic

Two Four-Processor NUMA Nodes Connected as an Eight-Processor NUMA System

To increase system capacity, additional nodes are connected using the high-speed cache-coherent system interconnect, as shown

In the diagram, all eight processors can access memory in both nodes coherently. For example:

- A processor in Node 1 can access memory within Node 1, (that is, local or “near” memory) using a direct path through the memory controller in Node 1.

- For the same processor to access memory in Node 2 (that is, “remote” or “far” memory), the path taken is through the memory controller in Node 1, out through the system interconnect, and then through the memory controller in Node 2.

It takes more time to access memory in another node than it takes to access local memory. This difference in memory access times is the origin of the name for these systems: non-uniform memory architecture (NUMA).

The ratio of the time taken to access near memory to the time taken to access far memory is referred to as the NUMA ratio. The higher the NUMA ratio value — that is, the greater the disparity between the time it takes to access far memory as compared to near memory — the greater the effect that NUMA characteristics may have on software performance.

3:1 being an optimal ratio