What is Software defined Storage?

VMware’s explanation is “Software Defined Storage is the automation and pooling of storage through a software control plane, and the ability to provide storage from industry standard servers. This offers a significant simplification to the way storage is provisioned and managed, and also paves the way for storage on industry standard servers at a fraction of the cost.

(Source:http://cto.vmware.com/vmwares-strategy-for-software-defined-storage/)

SAN Solutions

There are currently 2 types of SAN Solutions

- Hyper-converged appliances (Nutanix, Scale Computing, Simplivity and Pivot3

- Software only solutions. Deployed as a VM on top of a hypervisor (VMware vSphere Storage Appliance, Maxta, HP’s StoreVirtual VSA, and EMC Scale IO)

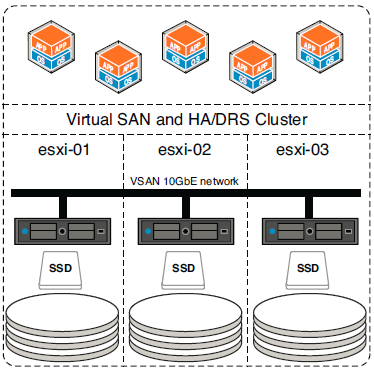

VSAN 5.5

VSAN is also a software-only solution, but VSAN differs significantly from the VSAs listed above. VSAN sits in a different layer and is not a VSA-based solution.

VSAN Features

- Provide scale out functionality

- Provide resilience

- Storage policies per VM or per Virtual disk (QOS)

- Kernel based solution built directly in the hypervisor

- Performance and Responsiveness components such as the data path and clustering are in the kernel

- Other components are implemented in the control plane as native user-space agents

- Uses industry standard H/W

- Simple to use

- Can be used for VDI, Test and Dev environments, Management or DMZ infrastructure and a Disaster Recovery target

- 32 hosts can be connected to a VSAN

- 3200 VMs in a 32 host VSAN cluster of which 2048 VMs can be protected by vSphere HA

VSAN Requirements

- Local host storage

- All hosts must use vSphere 5.5 u1

- Autodeploy (Stateless booting) is not supported by VSAN

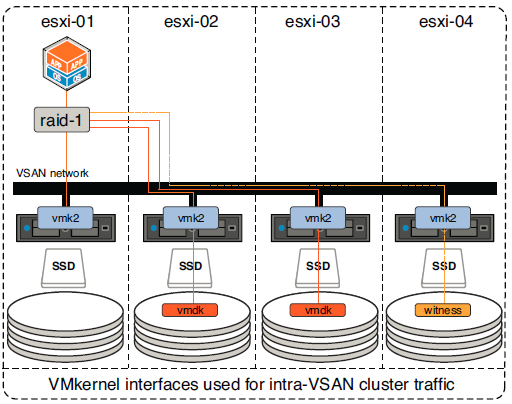

- VMkernel interface required (1GbE) (10gBe recommended) This port is used for inter-cluster node communication. It is also used for reads and writes when one of the ESXi hosts in the cluster owns a particular

VM but the actual data blocks making up the VM files are located on a different ESXi host in the cluster. - Multicast is enabled on the VSAN network (Layer2)

- Supported on vSphere Standard Switches and vSphere Distributed Switches)

- Performance Read/Write buffering (Flash) and Capacity (Magnetic) Disks

- Each host must have at least 1 Flash disk and 1 Magnetic disk

- 3 hosts per cluster to create a VSAN

- Other hosts can use the VSAN without contributing any storage themselves however it is better for utilization, performance and availability to have a uniformly contributed cluster

- VMware hosts must have a minimum of 6GB RAM however if you are using the maximum disk groups then 32GB is recommended

- VSAN must use a disk controller which is capable of running in what is commonly referred to as pass-through mode, HBA mode, or JBOD mode. In other words, the disk controller should provide the capability to pass up the underlying magnetic disks and solid-state disks (SSDs) as individual disk drives without a layer of RAID sitting on top. The result of this is that ESXi can perform operations directly on the disk without those operations being intercepted and interpreted by the controller

- For disk controller adapters that do not support pass-through/HBA/JBOD mode, VSANsupports disk drives presented via a RAID-0 configuration. Volumes can be used by VSAN if they are created using a RAID-0 configuration that contains only a single drive. This needs to be done for both the magnetic disks and the SSDs

VMware VSAN compatibility Guide

VSAN has strict requirements when it comes to disks, flash devices, and disk controllers which can be complex. Use the HCL link below to make sure you adhere to all supported hardware

http://www.vmware.com/resources/compatibility/search.php?deviceCategory=vsan

The designated flash device classes specified within the VMware compatibility guide are

- Class A: 2,500–5,000 writes per second

- Class B: 5,000–10,000 writes per second

- Class C: 10,000–20,000 writes per second

- Class D: 20,000–30,000 writes per second

- Class E: 30,000+ writes per second

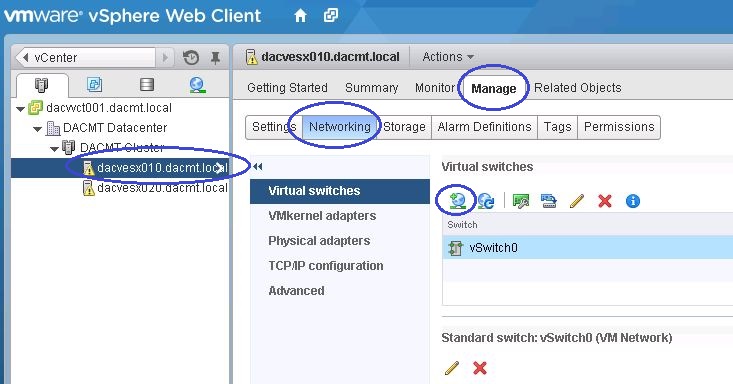

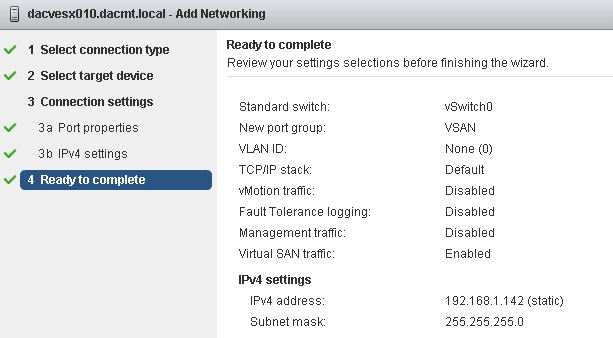

Setting up a VSAN

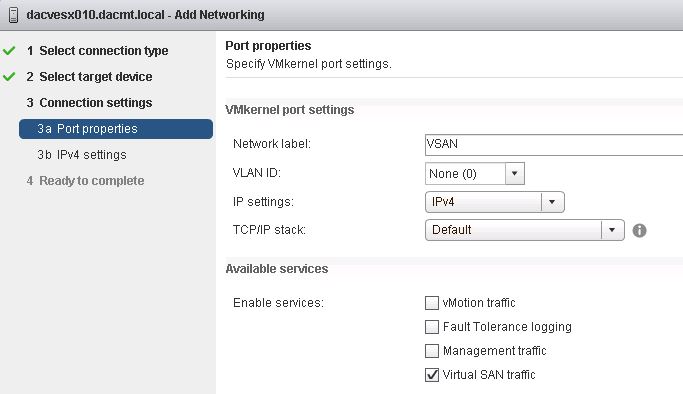

- Firstly all hosts must have a VMKernel network called Virtual SAN traffic

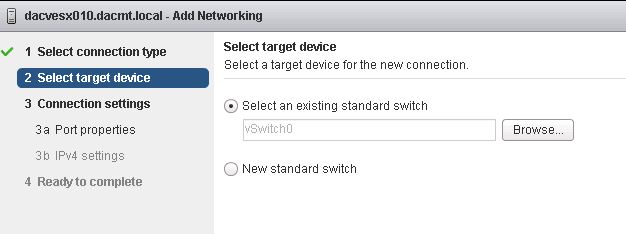

- You can add this port to an existing VSS or VDS or create a new switch altogether

- Log into the web client and select the first host

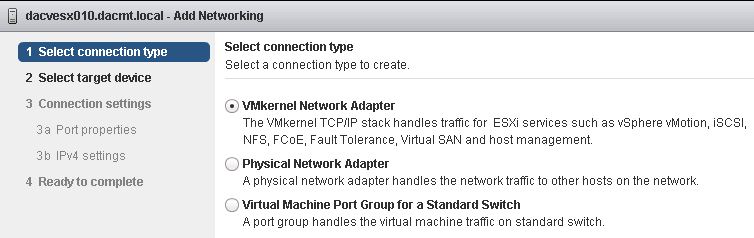

- Click Manage > Networking > Click the Add Networking button

- Keep VMKernel Network Adaptor selected

- On my options I only have 2 options but you will usually have the option to select an existing distributed port group

- Check the settings, put in a network label and tick Virtual SAN traffic

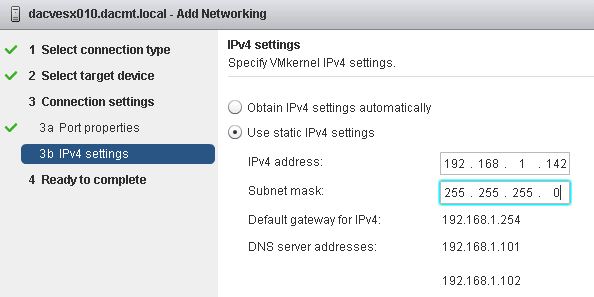

- Enter your network settings

- Check Settings and Finish

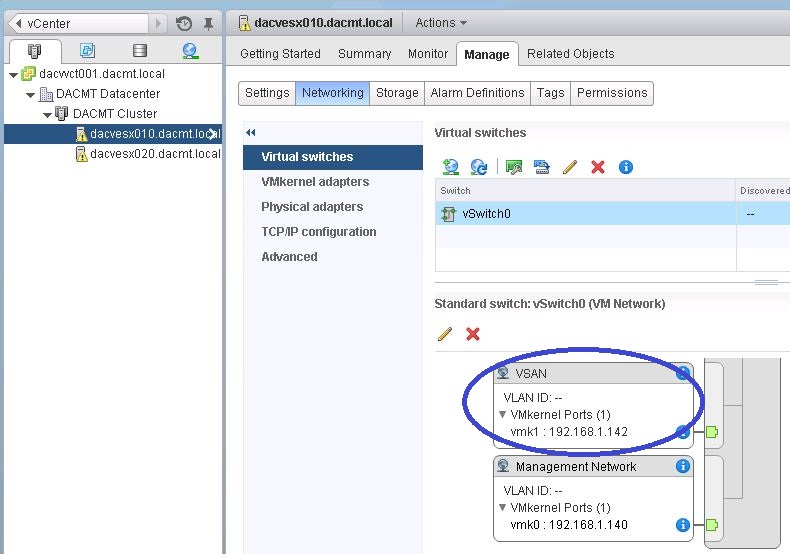

- You should now see your VMKernel Port on your switch

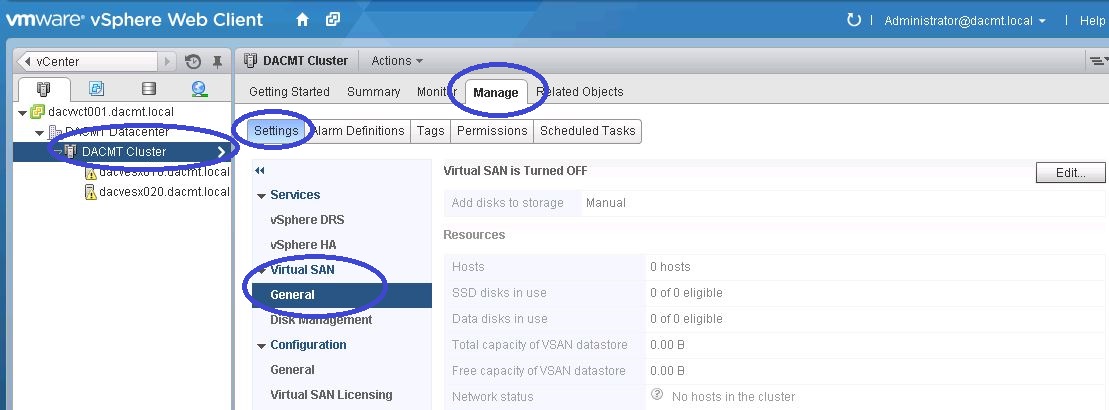

- Next click on the cluster to build a new VSAN Cluster

- Go to Manage > Settings > Virtual SAN > General > Edit

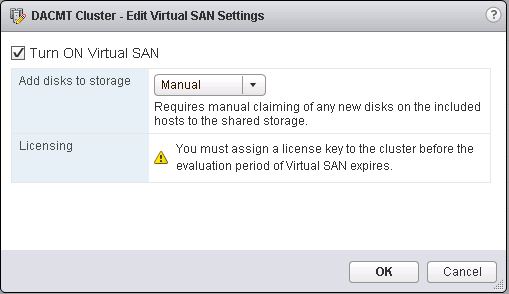

- Next turn on the Virtual SAN. Automatic mode will claim all virtual disks or you can choose Manual Mode

- You will need to turn off vSphere HA to turn on/off VSAN

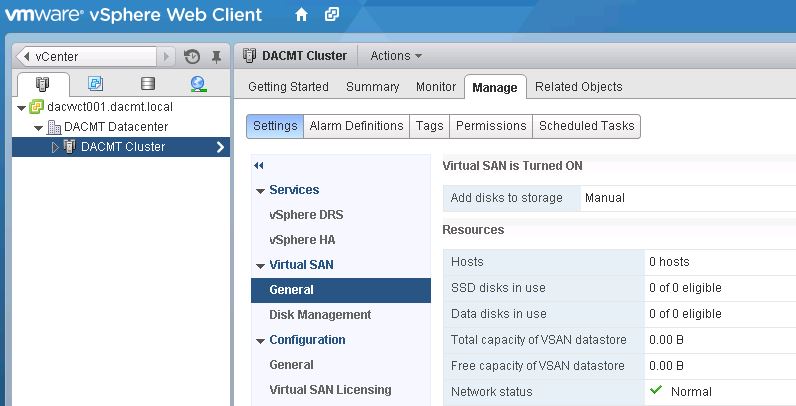

- Check that Virtual SAN is turned on

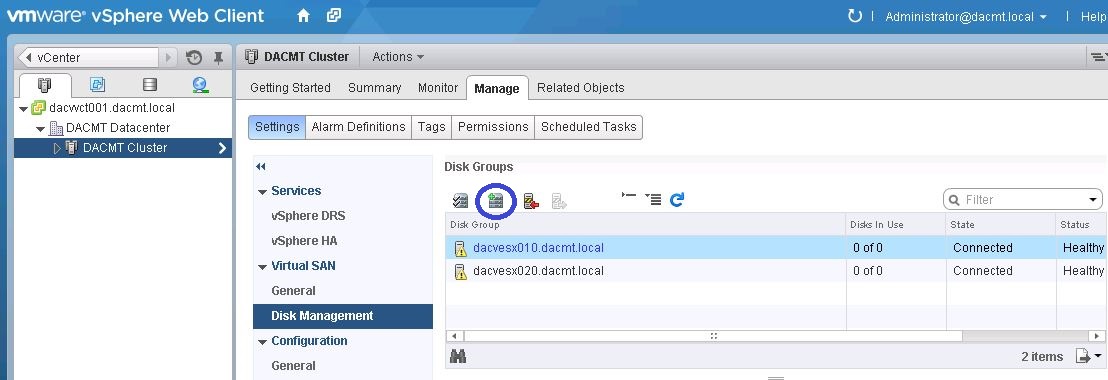

- Next Click on Disk Management to create Disk Groups

- Then click on the Create Disk Group icon (circled in blue)

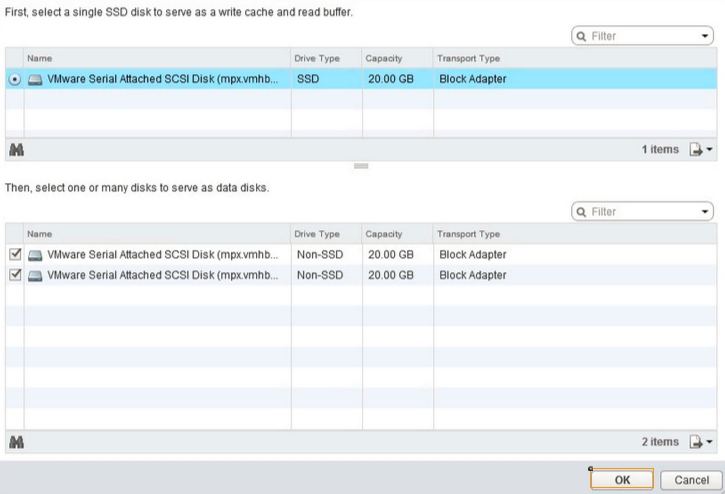

- The disk group must contain one SSD and up to 6 hard drives.

- Repeat this for at least 3 hosts in the cluster

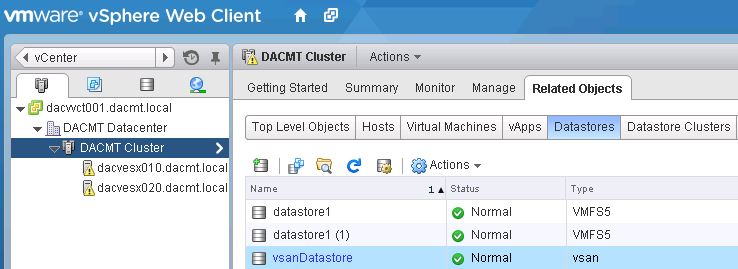

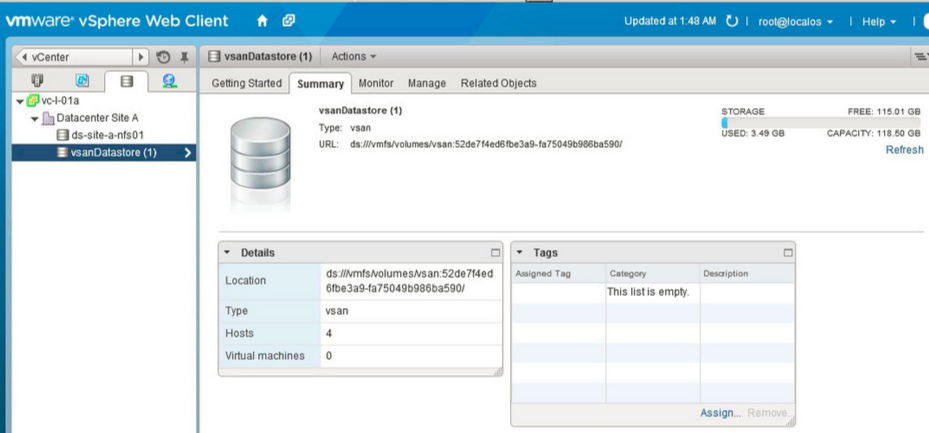

- Next click on Related Objects to view the Datastore

- Click the VSAN Datastore to view the details

- Note I have had to use VMwares screenprint as I didn’t have enough resources in my lab to show this

Links

- Main VSAN Page:

- http://www.vmware.com/products/virtual-san/

- Click Through Demo:

- http://featurewalkthrough.vmware.com/#!/virtual-san

- What’s New with VSAN 6.0 Hands on Lab:

- http://labs.hol.vmware.com/HOL/catalogs/lab/1445