Switch Options

Basically everything comes down to cost, manageability and familiarity and the business requirements for the features provided by each option. I have attached a link below to a very handy comparison breakdown of vSphere switches compared to the Cisco 1000V. There are too many features to place in a blog!

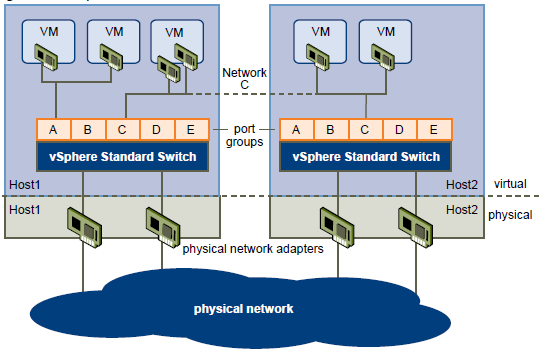

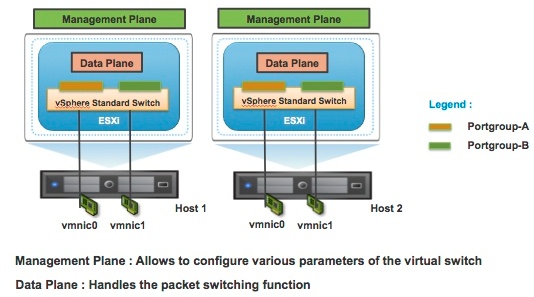

The VMware vSphere Standard Switch (VSS) is the base-level virtual networking alternative. It extends the familiar appearance, configuration, and capabilities of the standard virtual switch (vSwitch) in VMware vSphere 5.

Standard, Advanced and Enterprise License

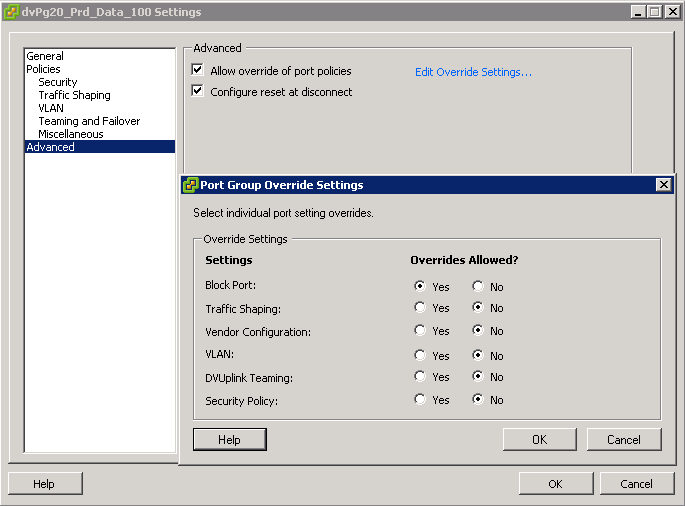

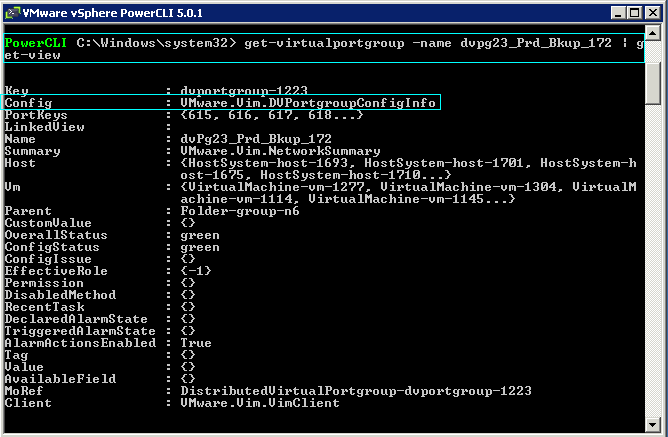

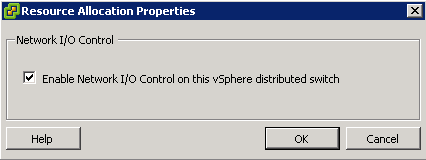

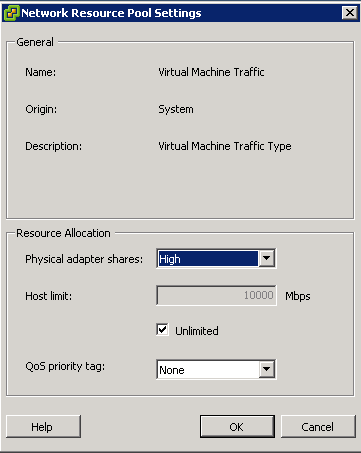

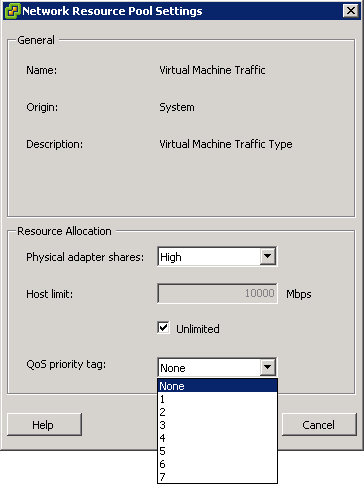

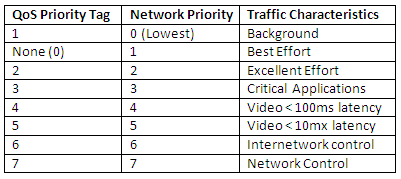

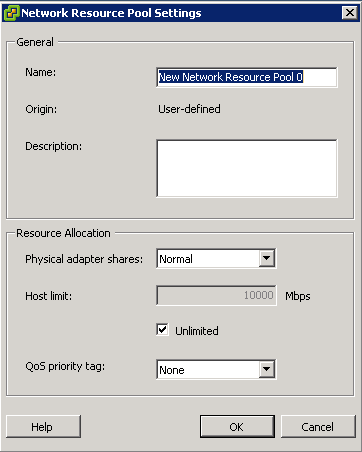

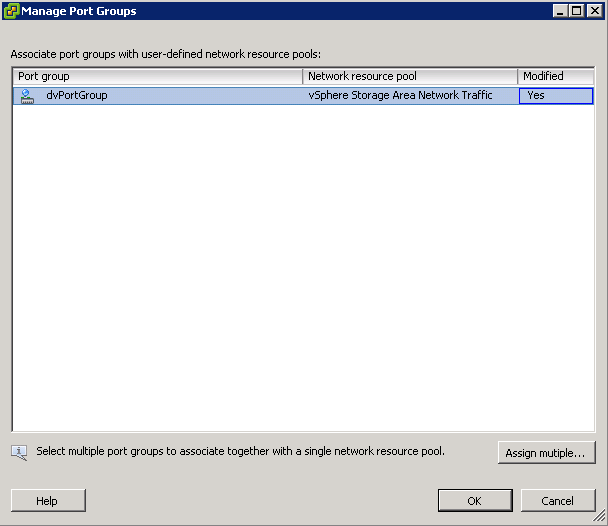

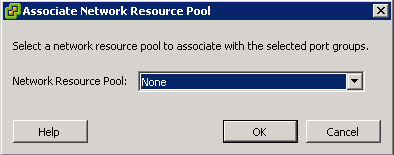

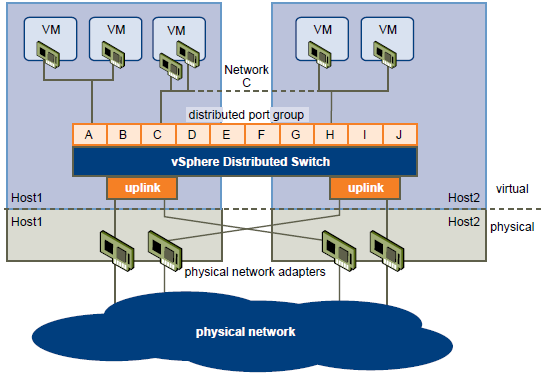

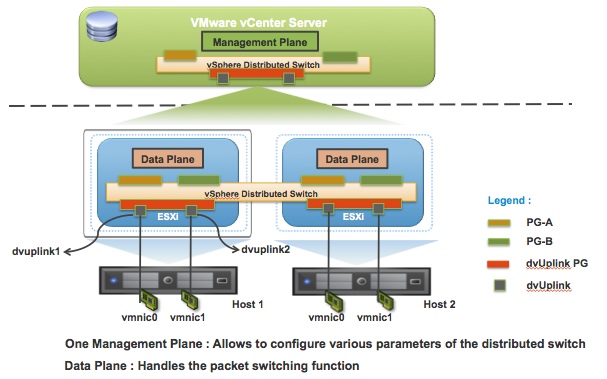

The VMware vSphere Distributed Switch (VDS) extends the feature set of the VMware Standard Switch, while simplifying network provisioning, monitoring, and management through an abstracted, single distributed switch representation of multiple VMware ESX and VMware ESXi™ Servers in a VMware data center. VMware vSphere 5 includes significant advances in virtual switching by providing monitoring, troubleshooting and enhanced NIOC features. VMware vSphere Distributed switch provides flexibility to the I/O resource allocation process by introducing User Defined network resource pools. These new features will help Network Administrators in managing and troubleshooting their virtual infrastructure using familiar tools as well as provide advanced capabilities to manage traffic granularly.

Enterprise Plus License

Cisco Nexus 1000V Series Switches are the result of a Cisco and VMware collaboration building on the VMware vNetwork third-party vSwitch API of VMware VDS and the industry-leading switching technology of the Cisco Nexus Family of switches. Featuring the Cisco® NX-OS Software data center operating system, the Cisco Nexus 1000V Series extends the virtual networking feature set to a level consistent with physical Cisco switches and brings advanced data center networking, security, and operating capabilities to the VMware vSphere environment. It provides end-to-end physical and virtual network provisioning, monitoring, and administration with virtual machine-level granularity using common and existing network tools and interfaces. The Cisco Nexus 1000V Series transparently integrates with VMware vCenter™ Server and VMware vCloud™ Director to provide a consistent virtual machine provisioning workflow while offering features well suited for data center-class applications, VMware View, and other mission-critical virtual machine deployments.

Cisco Nexus 1000v is generally used in large enterprises where the management of firewalls, core- and access switches is in the control of the Network administrators. While the management of the VMware virtual Distributed Switch is in the domain of the vSphere Administrators, with a Cisco Nexus 1000v it is possible to completely separate the management of the virtual switches and hand-over to the network administrators. All this without allowing access to the rest of the vSphere platform to the Network administrators.

Cisco Licensed

The IBM System Networking Distributed Virtual Switch 5000V is an advanced, feature-rich distributed virtual switch for VMware environments with policy-based virtual machine (VM) connectivity. The IBM Distributed Virtual Switch (DVS) 5000V enables network administrators familiar with IBM System Networking switches to manage the IBM DVS 5000V just like IBM physical switches using advanced networking, troubleshooting and management features so the virtual switch is no longer hidden and difficult to manage.

Support for Edge Virtual Bridging (EVB) based on the IEEE 802.1Qbg standard enables scalable, flexible management of networking configuration and policy requirements per VM and eliminates many of the networking challenges introduced with server virtualization. The IBM DVS 5000V works with VMware vSphere 5.0 and beyond and interoperates with any 802.1Qbg-compliant physical switch to enable switching of local VM traffic in the hypervisor or in the upstream physical switch.

IBM Licensed

Cisco Document comparing all 3 switches except IBM

http://www.cisco.com

IBM 5000V Overview Document

http://www-03.ibm.com