What is Software iSCSI port binding?

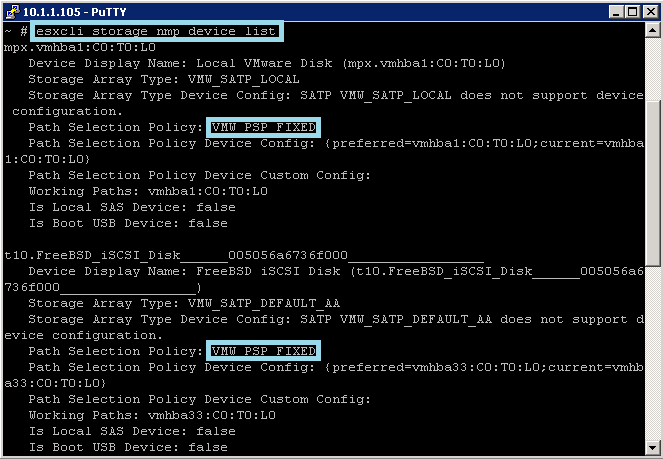

Software iSCSI port binding is the process of creating multiple paths between iSCSI adapters and an iSCSI Storage target. By default, ESXi does not setup multipathing for iSCSI adapters. As a result, all targets are accessible by only a single path. This is true regardless of if teaming was setup for your NICS on the VMkernel port used for iSCSI. To ensure that your storage is still accessible in the event of a path failure or to take advantage of load balancing features, Software iSCSI Port Binding is required.

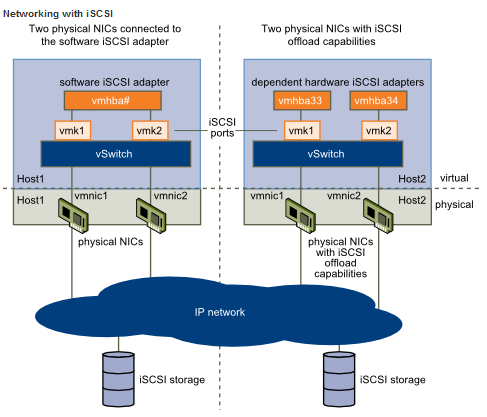

With the software-based iSCSI implementation, you can use standard NICs to connect your host to a remote iSCSI target on the IP network. The software iSCSI adapter that is built into ESXi facilitates this connection by communicating with the physical NICs through the network stack.

Before you can use the software iSCSI adapter, you must

- Set up networking

- Activate the adapter

- Configure parameters such as discovery addresses and CHAP

Setup Networking

Software and dependent hardware iSCSI adapters depend on VMkernel networking. If you use the software or dependent hardware iSCSI adapeters, you must configure connections for the traffic between the iSCSI component and the physical network adapters. Configuring the network connection involves creating a virtual VMkernel interface for each physical network adapter and associating the interface with an appropriate iSCSI adapter.

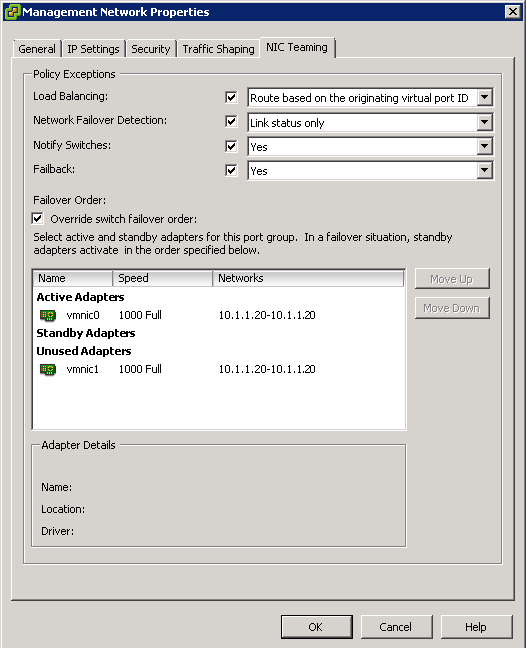

If you use a single vSphere standard switch to connect VMkernel to multiple network adapters, change the port group policy, so that it is compatible with the iSCSI network requirements.

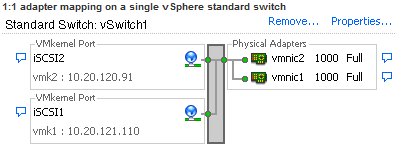

By default, for each virtual adapter on the vSphere standard switch, all network adapters appear as active. You must override this port group policy setup, so that each VMkernel interface maps to only one corresponding active NIC. For example

- vmk1 maps to vmnic1

- vmk2 maps to vmnic2

Procedure

- Create a vSphere standard switch that connects VMkernel with physical network adapters designated for iSCSI traffic. The number of VMkernel adapters must correspond to the number of physical adapters on the vSphere standard switch

- Log in to the vSphere Client and select the host from the inventory panel.

- Click the Configuration tab and click Networking

- Select the vSphere standard switch that you use for iSCSI and click Properties.

- On the Ports tab, select an iSCSI VMkernel adapter and click Edit.

- Click the NIC Teaming tab and select Override switch failover order.

- Designate only one physical adapter as active and move all remaining adapters to the Unused Adapters category. You will see a Warning Trianlge against your iSCSI VMKernel port if you don’t.

- Repeat Step 4 through Step 6 for each iSCSI VMkernel interface on the vSphere standard switch.

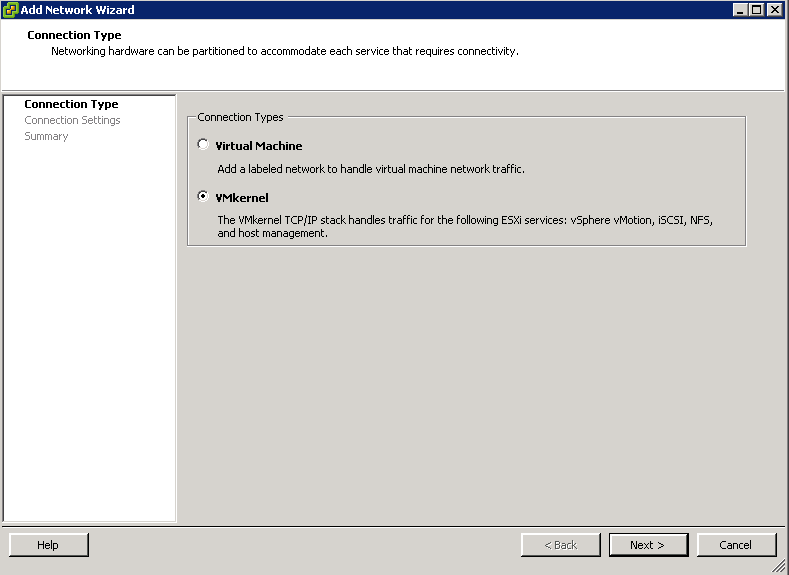

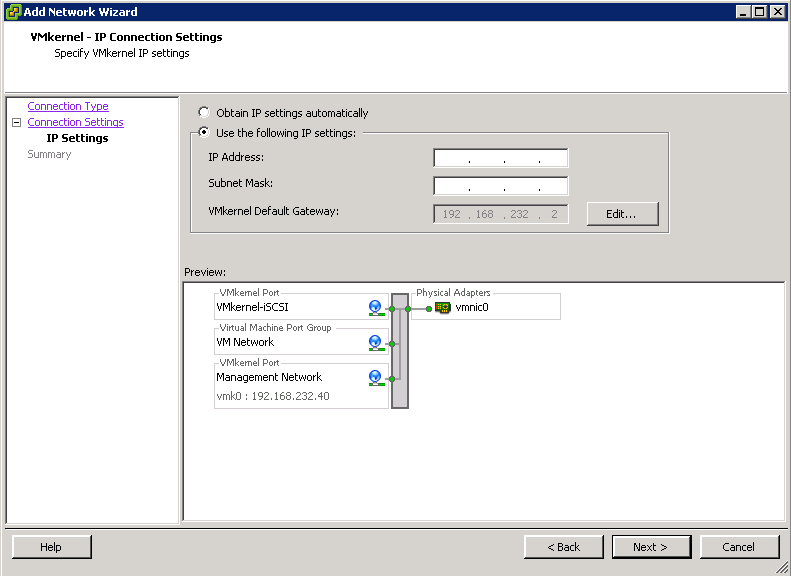

- Next go to the switch properties and click Add and choose VMkernel

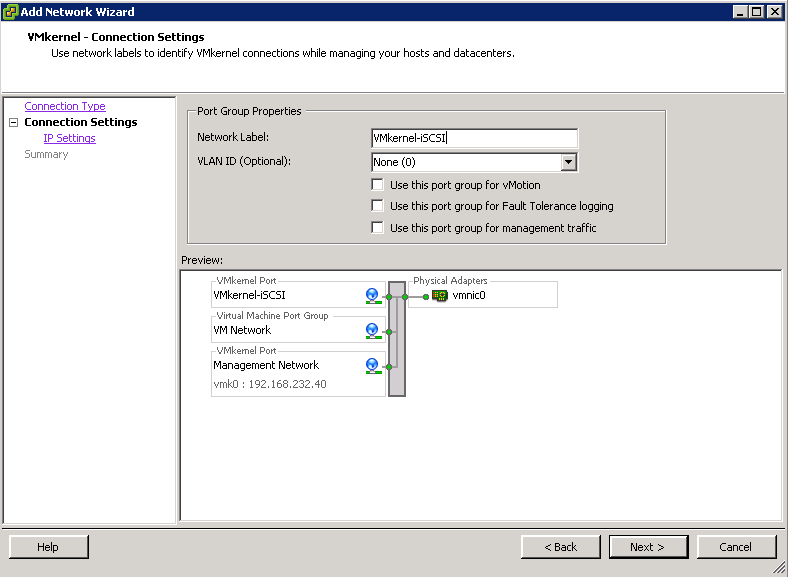

- Type a name. Eg VMkernel-iSCSI

- Enter an IP Address for this adapter

- Finish and check Summary Page

Setup Software iSCSI Adapter

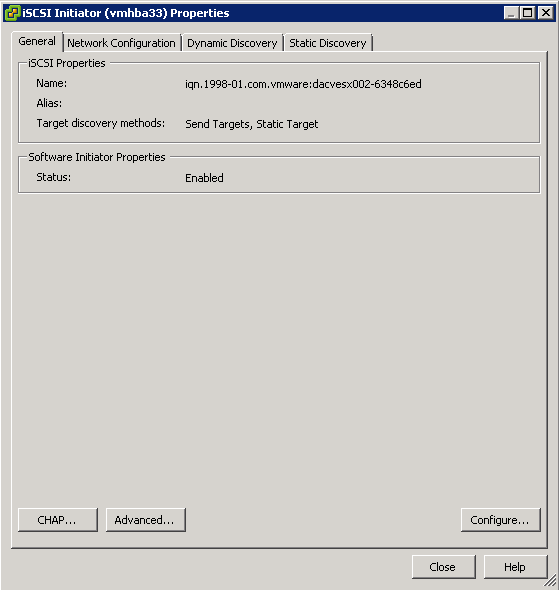

- Within the Host View, click the Configuration tab > Storage Adapters

- Click Add to add a Software iSCSI Adapter

- Right click the new Software iSCSI Adapter and select Properties

- Enable the adapter if it is not already

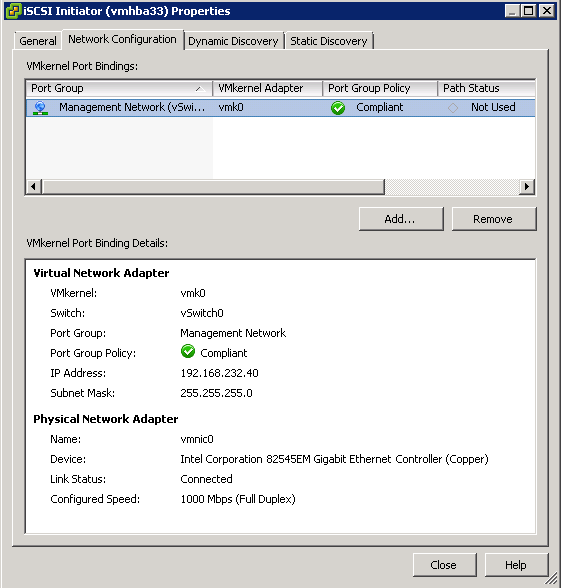

- Open the Network Configuration tab

- Add the new port group(s) associated with the iSCSI network

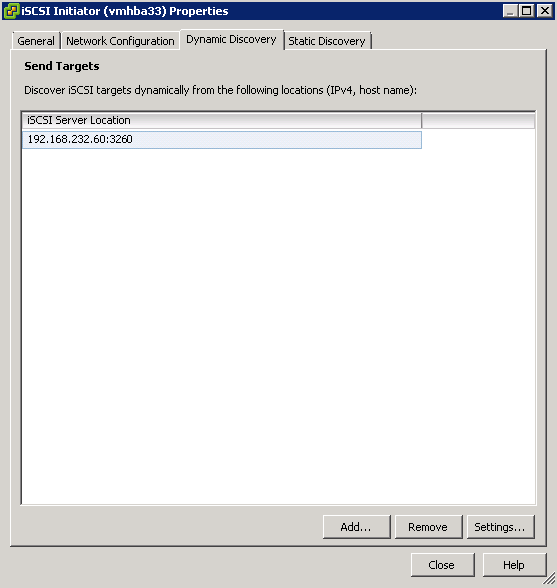

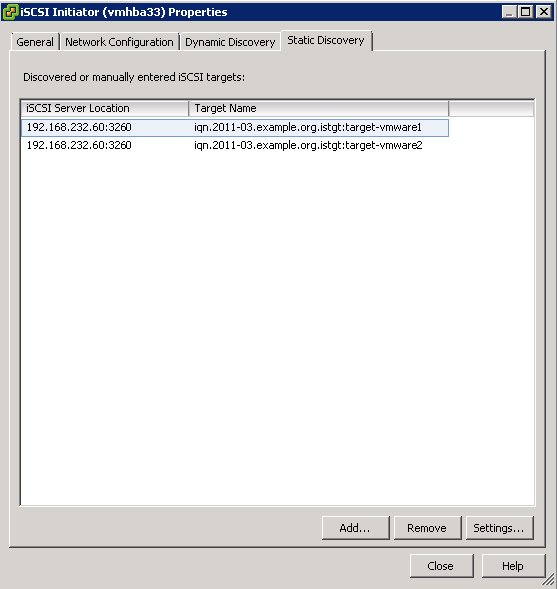

- Click the Dynamic Discovery tab

- Add the IP addresses of the ISCSI targets

- Click Static Discovery and check the details in here

- Click Close

- Rescan the attached disks

What if you have multiple adapters?

- If your host has more than one physical network adapter for software and dependent hardware iSCSI, use the adapters for multipathing.

- You can connect the software iSCSI adapter with any physical NICs available on your host. The dependent iSCSI adapters must be connected only with their own physical NICs.

- Physical NICs must be on the same subnet as the iSCSI storage system they connect to.

The iSCSI adapter and physical NIC connect through a virtual VMkernel adapter, also called virtual network adapter or VMkernel port. You create a VMkernel adapter (vmk) on a vSphere switch (vSwitch) using 1:1 mapping between each virtual and physical network adapter.

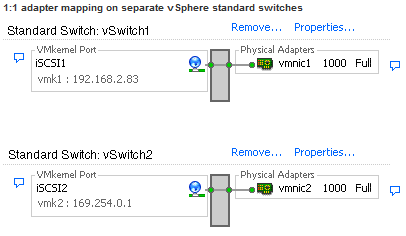

One way to achieve the 1:1 mapping when you have multiple NICs, is to designate a separate vSphere switch for each virtual-to-physical adapter pair. The following examples show configurations that use vSphere standard switches, but you can use distributed switches as well.

If you use separate vSphere switches, you must connect them to different IP subnets.

Otherwise, VMkernel adapters might experience connectivity problems and the host

will fail to discover iSCSI LUNs

An alternative is to add all NICs and VMkernel adapters to a single vSphere

standard switch. In this case, you must override the default network setup and

make sure that each VMkernel adapter maps to only one corresponding active

physical adapter.

General Information on iSCSI Adapters

http://www.electricmonk.org.uk/2012/04/18/using-esxi-with-iscsi-sans/