On the outside, the functionality of vSphere HA is very similar to the functionality of vSphere HA in vSphere 4. Now though HA uses a new VMware developed tool called FDM (Fault Domain Manager) This tool is a replacement for AAM (Automated Availability Manager)

Limitations of AAM

- Strong dependance on name resolution

- Scalability Limits

Advantages of FDM over AAM

- FDM uses a Master/Slave architecture that does not rely on Primary/secondary host designations

- As of 5.0 HA is no longer dependent on DNS, as it works with IP addresses only.

- FDM uses both the management network and the storage devices for communication

- FDM introduces support for IPv6

- FDM addresses the issues of both network partition and network isolation

- Faster install of HA once configured

FDM Agents

FDM uses the concept of an agent that runs on each ESXi host. This agent is separate from the vCenter Management Agents that vCenter uses to communicate with the the ESXi hosts (VPXA)

The FDM agent is installed into the ESXi Hosts in /opt/vmware/fdm and stores it’s configuration files at /etc/opt/vmware/fdm

How FDM works

- When vSphere HA is enabled, the vSphere HA agents participate in an election to pick up a vSphere HA master.The vSphere HA Master is responsible for a number of key tasks within a vSphere HA enabled cluster

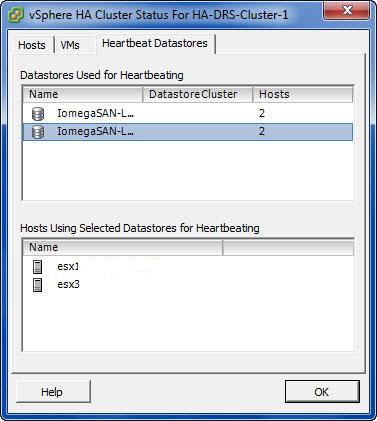

- You must now have at least two shared data stores between all hosts in the HA cluster.

- The Master monitors Slave hosts and will restart VMs in the event of a slave host failure

- The vSphere HA Master monitors the power state of all protected VMs. If a protected VM fails, it will restart the VM

- The Master manages the tasks of adding and removing hosts from the cluster

- The Master manages the list of protected VMs.

- The Master caches the cluster configuration and notifies the slaves of any changes to the cluster configuration

- The Master sends heartbeat messages to the Slave Hosts so they know that the Master is alive

- The Master reports state information to vCenter Server.

- If the existing Master fails, a new HA Master is automatically elected. If the Master went down and a Slave was promoted, when the original Master comes back up, does it become the Master again? The answer is no.

Enhancements to the User Interface

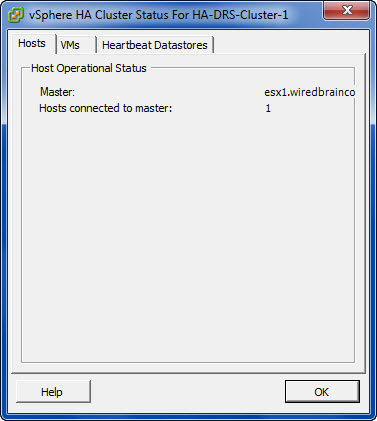

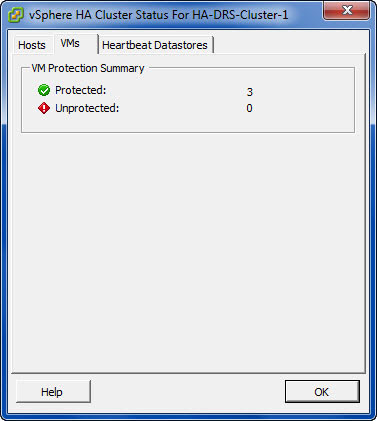

3 tabs in the Cluster Status

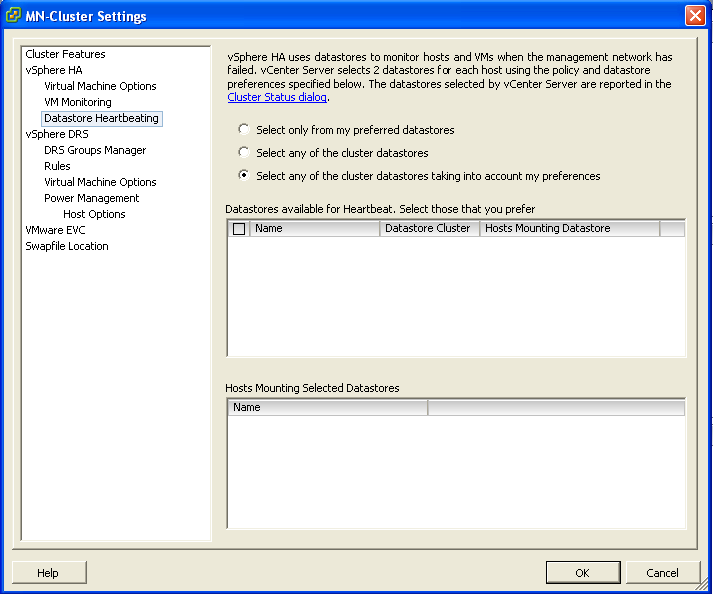

Cluster Settings showing the new Datatatores for heartbeating

How does it work in the event of a problem?

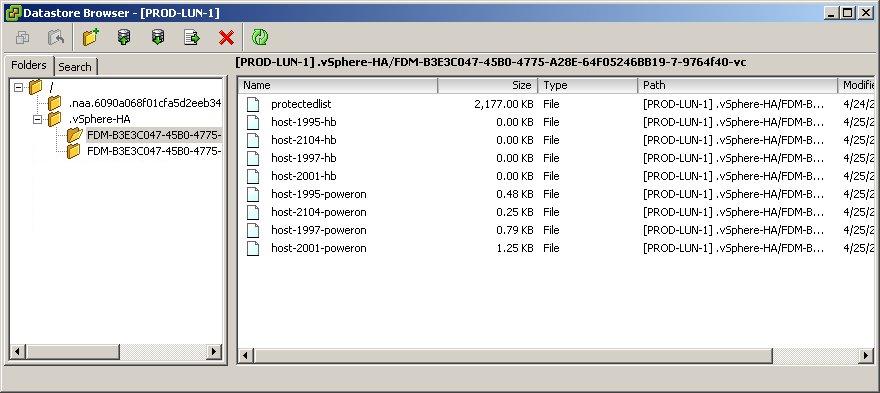

Virtual machine restarts were always initiated, even if only the management network of the host was isolated and the virtual machines were still running. This added an unnecessary level of stress to the host. This has been mitigated by the introduction of the datastore heartbeating mechanism. Datastore heartbeating adds a new level of resiliency and allows HA to make a distinction between a failed host and an isolated / partitioned host. You must now have at least two shared data stores between all hosts in the HA cluster.

Network Partitioning

The term used to describe a situation where one or more Slave Hosts cannot communicate with the Master even though they still have network connectivity. In this case HA is able to check the heartbeat datastores to detect whether the hosts are live and whether action needs to be taken

Network Isolation

This situation involves one or more Slave Hosts losing all management connectivity. Isolated hosts can neither communicate with the vSphere HA Master or communicate with other ESXi Hosts. In this case the Slave Host uses Heartbeat Datastores to notify the master that it is isolated. The Slave Host uses a special binary file, the host-X-poweron file to notify the master. The vSphere Master can then take appropriate action to ensure the VMs are protected

- In the event that a Master cannot communicate with a slave across the management network or a Slave cannot communicate with a Master then the first thing it will try and do is contact the isolation address. By default the gateway on the Management Network

- If it can’t reach the Gateway, it considers itself isolated

- At this point, an ESXi host that has determined it is network isolated will modify a special bit in the binary host-x-poweron file which is found on all datastores which are selected for datastore heartbeating

- The Master sees this bit, used to denote isolation and is therefore notified that the slave host has been isolated

- The Master then locks another file used by HA on the Heartbeat Datastore

- When the isolated node sees that this file has been locked by a master, it knows that the master is responsible for restarting the VMs

- The isolated host is then free to carry out the configured isolation response which only happens when the isolated slave has confirmed via datastore heartbeating infrastructures that the Master has assumed responsibility for restarting the VMs.

Isolation Responses

I’m not going to go into those here but they are bulleted below

- Shutdown

- Restart

- Leave Powered On

Should you change the default Host Isolation Response?

It is highly dependent on the virtual and physical networks in place.

If you have multiple uplinks, vSwitches and physical switches, the likelihood is that only one part of the network may go down at once. In this case use the Leave Powered On setting as its unlikely that a network isolation event would also leave the VMs on the host inaccessible.

Customising the Isolation response address

It is possible to customise the isolation response address in 3 different ways

- Connect to vCenter

- Right click the cluster and select Edit Settings

- Click the vSphere HA Node

- Click Advanced

- Enter one of the 3 options below

- das.isolationaddress1 which tries the first gateway

- das.isolationaddress2 which tries a second gateway

- das.AllowNetwork which allows a different Port Group to try

Leave a Reply