What is a vApp?

You can use VMware vSphere as a platform for running applications, in addition to using it as a platform for running virtual machines. The applications can be packaged to run directly on top of VMware vSphere. The format of how the applications are packaged and managed is called vSphere vApp.

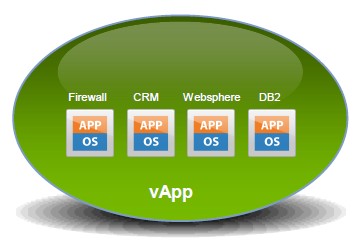

A vApp is a container, like a resource pool and can contain one or more virtual machines. A vApp also shares some functionality with virtual machines. A vApp can power on and power off, and can also be cloned.

In the vSphere Client, a vApp is represented in both the Host and Clusters view and the VM and Template view. Each view has a specific summary page with the current status of the service and relevant summary information, as well as operations on the service.

A vApp is basically a resource container for multiple virtual machines that work together as part of a multi-tier application.

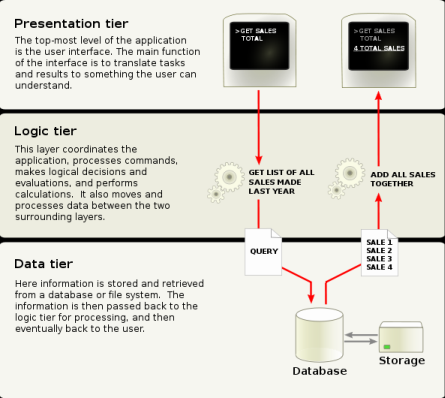

The three applications on each server all work together and are mostly dependent on each other for the application to function properly. If one part of the tier became unavailable, the application will typically quit working as it relies on all the tiers for the application to work.

Virtualization can introduce some challenges with multi-tier applications. For example, if one tier is performing poorly due to resource constraints on a host, then the whole application will suffer as a result. Another challenge comes when powering on a host server, as often times one tier relies on another tier to be started first or the application will fail.

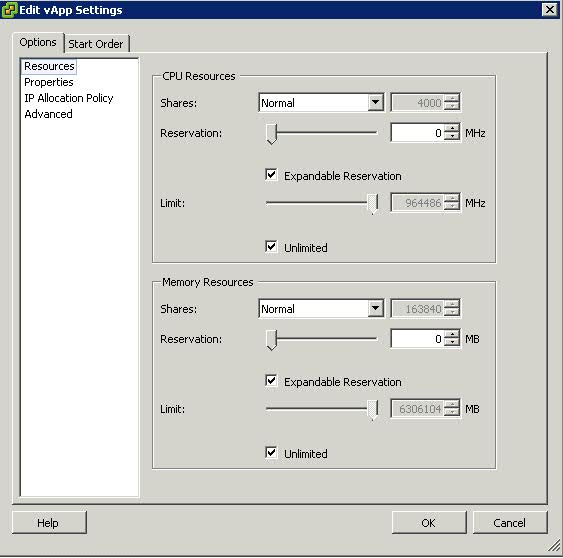

VMware introduced vApps as a method to deal with these problems by providing methods for setting power-on options, IP address allocation and resource allocation, and provide application-level customization for all the virtual machines in the vApp. When you configure a vApp in vSphere you specify properties for it, including CPU and memory resources, IP allocation, application information, and start order.

Distribution Format

The distribution format for vApp is OVF.

Note

The vApp metadata resides in the vCenter Server’s database, so a vApp can be distributed across multiple ESXi hosts. This information can be lost if the vCenter Server database is cleared or if a standalone ESXi host that contains a vApp is removed from vCenter Server. You should back up vApps to an OVF package to avoid losing any metadata.

vApp metadata for virtual machines within vApps do not follow the snapshots semantics for virtual machine configuration. So, vApp properties that are deleted, modified, or defined after a snapshot is taken remain intact (deleted, modified, or defined) after the virtual machine reverts to that snapshot or any prior snapshots.

Where can you create a vApp?

After you create a datacenter and add a clustered host enabled with DRS or a standalone host to your vCenter Server system, you can create a vApp.

You can create a vApp under the following conditions.

- A standalone host is selected in the inventory that is running ESX 3.0 or greater.

- A cluster enabled with DRS is selected in the inventory.

- You can create vApps on folders, standalone hosts, resource pools, clusters enabled with DRS, and within other vApps.

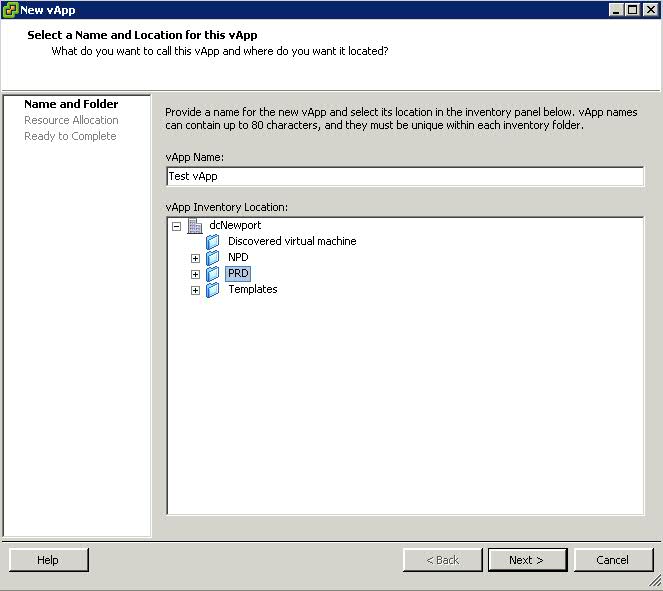

Creating a vApp

- Right click on a cluster or a host and select New vApp

- Put in a name and select the Inventory location

- Click Next

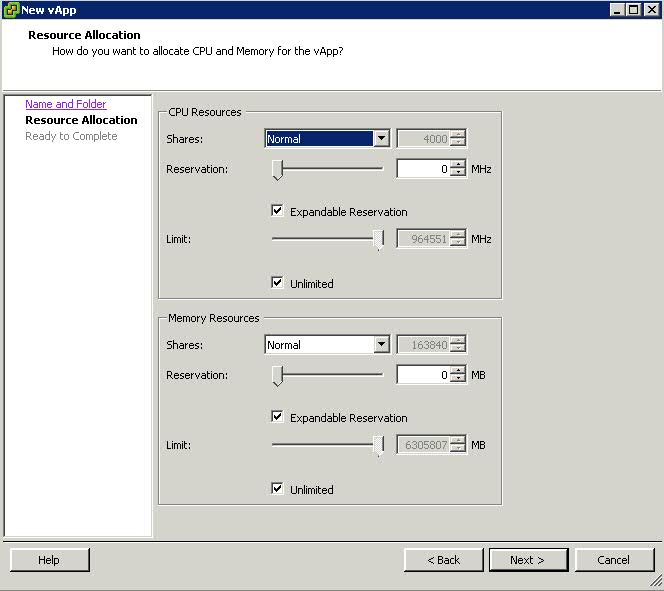

- Adjust resources accordingly and click Next and Finish

Once you are done configuring a vApp, you can add virtual machines (VMs) to it by dragging them using the vSphere client. You can also create resource pools inside of them and nest vApps inside of vApps. If you edit the settings of a VM, select the Options tab, and then select vApp Options, you can enable the vApp functionality for the VM and set individual vApp options for the VM. Once you have created a vApp you can easily export it in Open Virtualization Format (OVF) format, as well as deploy new vApps from one that are already created

- Go to the vApp in the Hosts and Clusters View and select Edit Settings. You can edit the CPU and memory resource allocation for the vApp

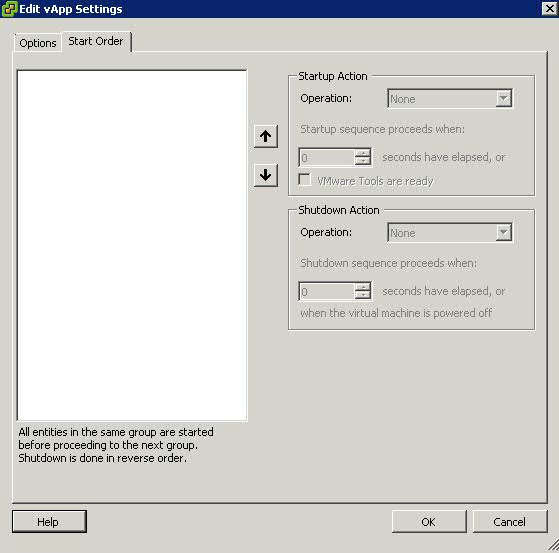

- Click on Start Order. You can change the order in which virtual machines and nested vApps within a vApp start up and shut down. You can also specify delays and actions performed at startup and shutdown

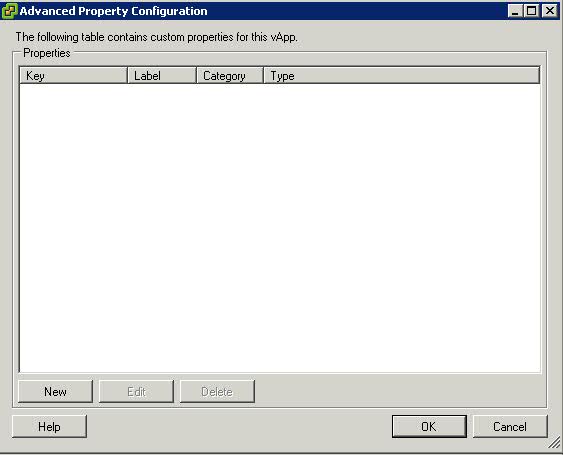

- Click on Properties. You can edit any vApp property that is defined in Advanced Property Configuration

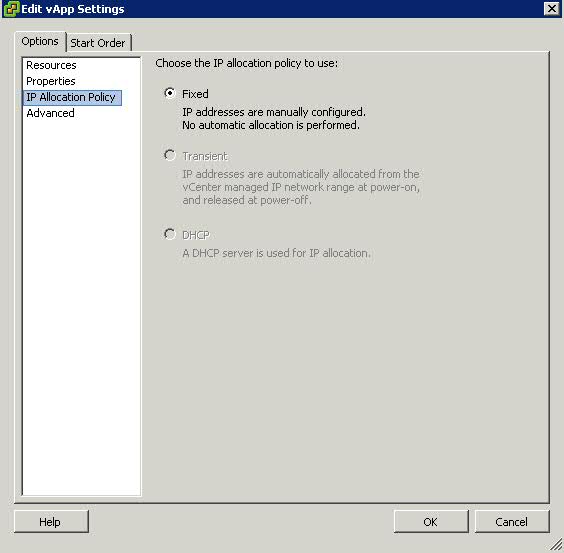

- Click on IP Allocation Policy. You can edit how IP addresses are allocated for the vApp.

Fixed = IP addresses are manually configured. No automatic allocation is performed

Transient = IP addresses are automatically allocated using IP pools from a specified range when the vApp is powered on. The IP addresses are released when the appliance is powered off.

DHCP = A DHCP server is used to allocate the IP addresses. The addresses assigned by the DHCP server are visible in the OVF environments of virtual machines started in the vApp.

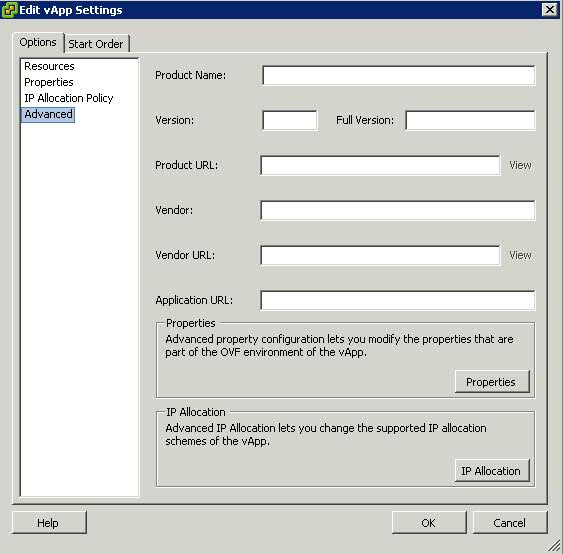

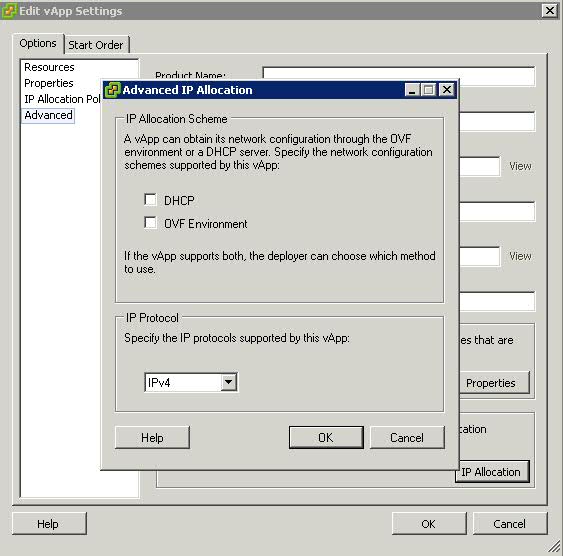

- Click on Advanced. You can edit and configure advanced settings, such as product and vendor information, custom properties, and IP allocation