Archive for January 2012

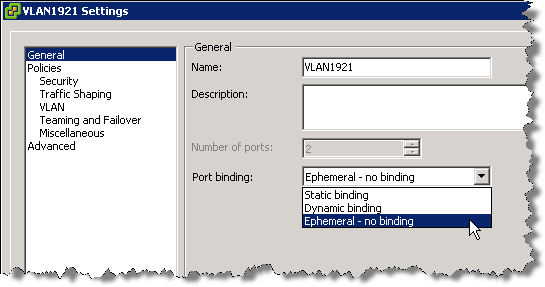

VDS Port Group – Port Bindings

There are 3 types of Port Binding

- Static Binding

- Dynamic Binding

- Ephemeral Binding

Static Binding

When you connect a virtual machine to a port group configured with static binding, a port is immediately assigned and reserved for it, guaranteeing connectivity at all times. The port is disconnected only when the virtual machine is removed from the port group. You can connect a virtual machine to a static-binding port group only through vCenter Server.

Dynamic Binding

In a port group configured with dynamic binding, a port is assigned to a virtual machine only when the virtual machine is powered on and its NIC is in a connected state. The port is disconnected when the virtual machine is powered off or the virtual machine’s NIC is disconnected. Virtual machines connected to a port group configured with dynamic binding must be powered on and off through vCenter.

Dynamic binding can be used in environments where you have more virtual machines than available ports, but do not plan to have a greater number of virtual machines active than you have available ports. For example, if you have 300 virtual machines and 100 ports, but never have more than 90 virtual machines active at one time, dynamic binding would be appropriate for your port group.

Note: Dynamic binding is deprecated in ESXi 5.0.

Ephemeral Binding

In a port group configured with ephemeral binding, a port is created and assigned to a virtual machine by the host when the virtual machine is powered on and its NIC is in a connected state. The port is deleted when the virtual machine is powered off or the virtual machine’s NIC is disconnected.

You can assign a virtual machine to a distributed port group with ephemeral port binding on ESX/ESXi and vCenter, giving you the flexibility to manage virtual machine connections through the host when vCenter is down. Although only ephemeral binding allows you to modify virtual machine network connections when vCenter is down, network traffic is unaffected by vCenter failure regardless of port binding type.

Note: Ephemeral port groups should be used only for recovery purposes when you want to provision ports directly on host bypassing vCenter Server, not for any other case. This is true for several reasons:

The disadvantage is that if you configure ephemeral port binding your network will be less secure. Anybody who will gain host access can create rogue virtual machine and place it on the network or to move VMs between networks. The security hardening guide even recommends to lower the number of ports for each distributed portgroup so there are none unused.

AutoExpand (New Feature)

Note: vSphere 5.0 has introduced a new advanced option for static port binding called Auto Expand. This port group property allows a port group to expand automatically by a small predefined margin whenever the port group is about to run out of ports. In vSphere 5.1, the Auto Expand feature is enabled by default.

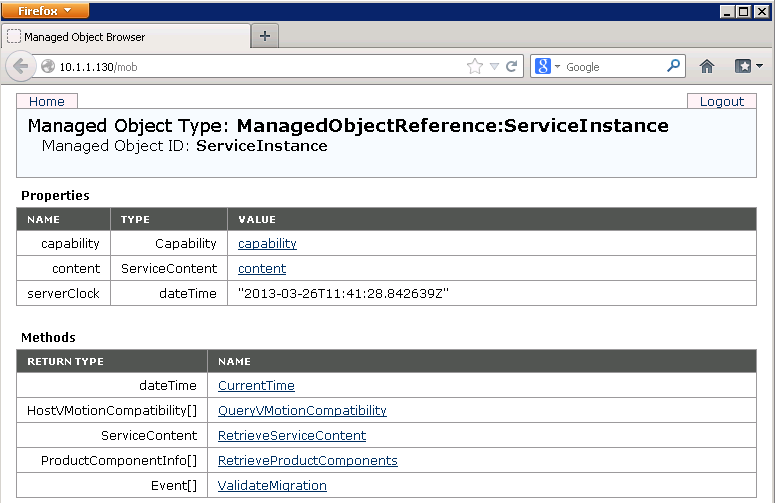

In vSphere 5.0 Auto Expand is disabled by default. To enable it, use the vSphere 5.0 SDK via the managed object browser (MOB):

- In a browser, enter the address http://vc-ip-address/mob/.

- When prompted, enter your vCenter Server username and password.

- Click the Content link.

- In the left pane, search for the row with the word rootFolder.

- Open the link in the right pane of the row. The link should be similar to group-d1 (Datacenters).

- In the left pane, search for the row with the word childEntity. In the right pane, you see a list of datacenter links.

- Click the datacenter link in which the vDS is defined.

- In the left pane, search for the row with the word networkFolder and open the link in the right pane. The link should be similar to group-n123 (network).

- In the left pane, search for the row with the word childEntity. You see a list of vDS and distributed port group links in the right pane.

- Click the distributed port group for which you want to change this property.

- In the left pane, search for the row with the word config and click the link in the right pane.

- In the left pane, search for the row with the word autoExpand. It is usually the first row.

- Note the corresponding value displayed in the right pane. The value should be false by default.

- In the left pane, search for the row with the word configVersion. The value should be 1 if it has not been modified.

- Note the corresponding value displayed in the right pane as it is needed later.

- Note: I found mine said AutoExpand=true and ConfigVersion=3

- Go back to the distributed port group page.

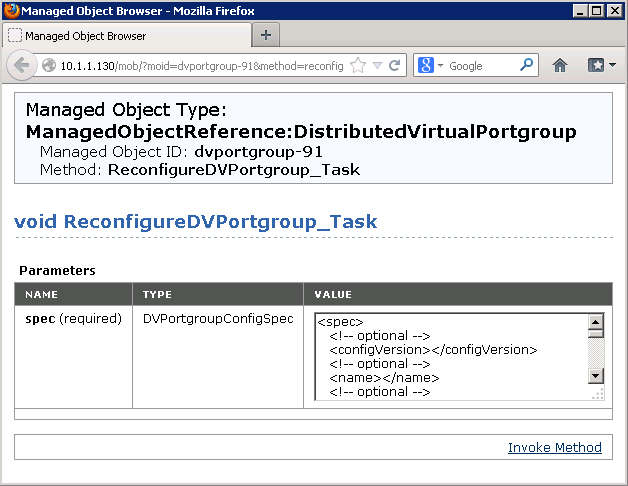

- Click the link at the bottom of the page that reads ReconfigureDv<PortGroup>_Task

- A new window appears.

- In the Value field, find the following lines and adjust them to the values you recorded earlier

<spec>

<configVersion>3</configVersion>

- And scroll to the end and find and adjust this

<autoExpand>true</autoExpand>

</spec>

- where configVersion is what you recorded in step 15.

- Click the Invoke Method link.

- Close the window.

- Repeat Steps 10 through 14 to verify the new value for autoExpand.

Useful VMware Article

Useful Blog on why to use Static Port Binding on vDS Switches

http://blogs.vmware.com/vsphere/2012/05/why-use-static-port-binding-on-vds-.html

Runtime name format explained for a FC Storage Device

Runtime Name

The name of the first path to the device. The runtime name is created by the host. The name is not a reliable identifier for the device, and is not persistent.

The runtime name has the following format:

vmhba#:C#:T#:L#

- vmhba# is the name of the storage adapter. The name refers to the physical adapter on the host, not to the SCSI controller used by the virtual machines.

- C# is the storage channel number.

- T# is the target number. Target numbering is decided by the host and might change if there is a change in the mappings of targets visible to the host. Targets that are shared by different hosts might not have the same target number.

- L# is the LUN number that shows the position of the LUN within the target. The LUN number is provided by the storage system. If a target has only one LUN, the LUN number is always zero (0).

For example, vmhba1:C0:T3:L1 represents LUN1 on target 3 accessed through the storage adapter vmhba1 and channel 0.

RDM’s – Physical and Virtual Compatibility Mode

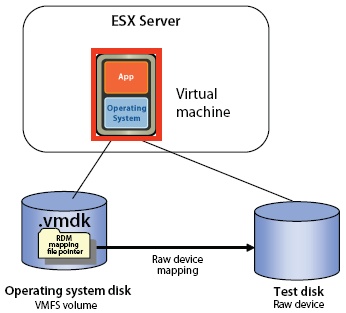

An RDM can be thought of as a symbolic link from a VMFS volume to a raw LUN. The mapping makes LUNs appear as files in a VMFS volume.

There are two types of RDMs: Virtual compatibility mode RDMs and physical compatibility mode RDMs.

Physical Mode RDM’s Uses

- Useful if you are using SAN-aware applications in the virtual machine

- Useful to run SCSI target based software

- Physical mode for the RDM specifies minimal SCSI virtualization of the mapped device, allowing the greatest flexibility for SAN management software. In physical mode, the VMkernel passes all SCSI commands to the device, with one exception: the REPORT LUNs command is virtualized, so that the VMkernel can isolate the LUN for the owning virtual machine. Otherwise, all physical characteristics of the underlying hardware are exposed. Physical mode is useful to run SAN management agents or other SCSI target based software in the virtual machine.

Physical mode RDMs Limitations

- No VMware snapshots

- No VCB support, because VCB requires VMware snapshots

- No cloning VMs that use physical mode RDMs

- No converting VMs that use physical mode RDMs into templates

- No migrating VMs with physical mode RDMs if the migration involves copying the disk

- No VMotion with physical mode RDMs

Virtual mode RDM’s Advantage

- Advanced file locking for data protection

- VMware Snapshots

- Allows for cloning

- Redo logs for streamlining development processes

- More portable across storage

hardware, presenting the same behavior as a virtual disk file

Predictive and Adaptive Schemes for placing VMFS Datastores

Predictive

The Predictive Scheme utilizes several LUNs with different storage characteristics

- Create several Datastores (VMFS or NFS) with different storage characteristics and label each datastore according to its characteristics

- Locate each application in the appropriate RAID for its requirements by measuring the requirements in advance

- Run the applications and see whether VM performance is acceptable or monitor HBA queues as they approach the queue full threshold

- Use RDM’s sparingly and as needed

Adaptive

The Adaptive Scheme utilizes a small number of large LUNs

- Create a standardised Datastore building bloc model (VMFS or NFS)

- Place virtual disks on the Datastore

- Run the applications and see whether disk performance is acceptable (On a VMFS Datastore, monitor the HBA queues as they approach the queue full threshold

- If performance is acceptable, you can place additional virtual disks on the Datastore.If it isn’t Storage vMotion the disks on to a new Datastore

- Use RDM’s sparingly